In mathematics and computer science, an algorithm is an unambiguous specification of how to solve a class of problems. Algorithms can perform calculation, data processing, automated reasoning, and other tasks.

In the computer science subfield of algorithmic information theory, a Chaitin constant or halting probability is a real number that, informally speaking, represents the probability that a randomly constructed program will halt. These numbers are formed from a construction due to Gregory Chaitin.

Complexity characterises the behaviour of a system or model whose components interact in multiple ways and follow local rules, meaning there is no reasonable higher instruction to define the various possible interactions.

Computational complexity theory focuses on classifying computational problems according to their inherent difficulty, and relating these classes to each other. A computational problem is a task solved by a computer. A computation problem is solvable by mechanical application of mathematical steps, such as an algorithm.

The multiverse is a hypothetical group of multiple universes including the universe in which we live. Together, these universes comprise everything that exists: the entirety of space, time, matter, energy, and the physical laws and constants that describe them. The different universes within the multiverse are called "parallel universes", "other universes", or "alternate universes".

In the philosophy of mathematics, constructivism asserts that it is necessary to find a mathematical object to prove that it exists. In standard mathematics, one can prove the existence of a mathematical object without "finding" that object explicitly, by assuming its non-existence and then deriving a contradiction from that assumption. This proof by contradiction is not constructively valid. The constructive viewpoint involves a verificational interpretation of the existential quantifier, which is at odds with its classical interpretation.

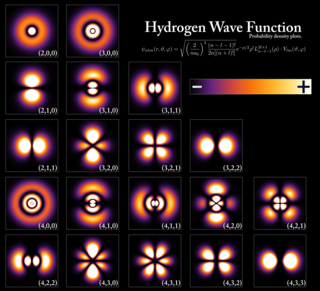

Quantum mechanics, including quantum field theory, is a fundamental theory in physics which describes nature at the smallest scales of energy levels of atoms and subatomic particles.

Computability theory, also known as recursion theory, is a branch of mathematical logic, of computer science, and of the theory of computation that originated in the 1930s with the study of computable functions and Turing degrees. The field has since expanded to include the study of generalized computability and definability. In these areas, recursion theory overlaps with proof theory and effective descriptive set theory.

Combinatorial game theory has several ways of measuring game complexity. This article describes five of them: state-space complexity, game tree size, decision complexity, game-tree complexity, and computational complexity.

Theoretical computer science (TCS) is a subset of general computer science and mathematics that focuses on more mathematical topics of computing and includes the theory of computation.

In algorithmic information theory, algorithmic probability, also known as Solomonoff probability, is a mathematical method of assigning a prior probability to a given observation. It was invented by Ray Solomonoff in the 1960s. It is used in inductive inference theory and analyses of algorithms. In his general theory of inductive inference, Solomonoff uses the prior obtained by this formula, in Bayes' rule for prediction.

In physics and cosmology, digital physics is a collection of theoretical perspectives based on the premise that the universe is describable by information. It is a form of digital ontology about the physical reality. According to this theory, the universe can be conceived of as either the output of a deterministic or probabilistic computer program, a vast, digital computation device, or mathematically isomorphic to such a device.

Ray Solomonoff's theory of universal inductive inference is a theory of prediction based on logical observations, such as predicting the next symbol based upon a given series of symbols. The only assumption that the theory makes is that the environment follows some unknown but computable probability distribution. It is a mathematical formalization of Occam's razor and the Principle of Multiple Explanations.

Neurophilosophy or philosophy of neuroscience is the interdisciplinary study of neuroscience and philosophy that explores the relevance of neuroscientific studies to the arguments traditionally categorized as philosophy of mind. The philosophy of neuroscience attempts to clarify neuroscientific methods and results using the conceptual rigor and methods of philosophy of science.

Algorithmic information theory is a subfield of information theory and computer science that concerns itself with the relationship between computation and information. According to Gregory Chaitin, it is "the result of putting Shannon's information theory and Turing's computability theory into a cocktail shaker and shaking vigorously."

The CBOE Volatility Index, known by its ticker symbol VIX, is a popular measure of the stock market's expectation of volatility implied by S&P 500 index options. It is calculated and disseminated on a real-time basis by the Chicago Board Options Exchange (CBOE), and is commonly referred to as the fear index or the fear gauge.

Quantitative computed tomography (QCT) is a medical technique that measures bone mineral density (BMD) using a standard X-ray Computed Tomography (CT) scanner with a calibration standard to convert Hounsfield Units (HU) of the CT image to bone mineral density values. Quantitative CT scans are primarily used to evaluate bone mineral density at the lumbar spine and hip.

John Vivian Tucker is a British computer scientist and expert on computability theory, also known as recursion theory. Computability theory is about what can and cannot be computed by people and machines. His work has focused on generalising the classical theory to deal with all forms of discrete/digital and continuous/analogue data; and on using the generalisations as formal methods for system design; and on the interface between algorithms and physical equipment.

George Jiří Klir was a Czech-American computer scientist and professor of systems sciences at Binghamton University in Binghamton, New York.

The Annual ACM Symposium on Theory of Computing (STOC) is an academic conference in the field of theoretical computer science. STOC has been organized annually since 1969, typically in May or June; the conference is sponsored by the Association for Computing Machinery special interest group SIGACT. Acceptance rate of STOC, averaged from 1970 to 2012, is 31%, with the rate of 29% in 2012.