This article cites its sources but does not provide page references .(January 2011) (Learn how and when to remove this template message) |

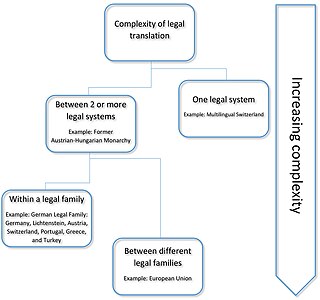

Frame-based terminology is a cognitive approach to terminology developed by Pamela Faber and colleagues at the University of Granada. One of its basic premises is that the conceptualization of any specialized domain is goal-oriented, and depends to a certain degree on the task to be accomplished. Since a major problem in modeling any domain is the fact that languages can reflect different conceptualizations and construals, texts as well as specialized knowledge resources are used to extract a set of domain concepts. Language structure is also analyzed to obtain an inventory of conceptual relations to structure these concepts.

Cognitive linguistics (CL) is an interdisciplinary branch of linguistics, combining knowledge and research from both psychology and linguistics. It describes how language interacts with cognition, how language forms our thoughts, and the evolution of language parallel with the change in the common mindset across time.

Terminology is the study of terms and their use. Terms are words and compound words or multi-word expressions that in specific contexts are given specific meanings—these may deviate from the meanings the same words have in other contexts and in everyday language. Terminology is a discipline that studies, among other things, the development of such terms and their interrelationships within a specialized domain. Terminology differs from lexicography, as it involves the study of concepts, conceptual systems and their labels (terms), whereas lexicography studies words and their meanings.

Pamela Faber Benítez is an American/Spanish linguist. She holds the Chair of Translation and Interpreting at the Department of Translation and Interpreting of the University of Granada since 2001. She received her Ph.D. from the University of Granada in 1986 and also holds degrees from the University of North Carolina at Chapel Hill and Paris-Sorbonne University.

As its name implies, frame-based terminology uses certain aspects of frame semantics to structure specialized domains and create non-language-specific representations. Such configurations are the conceptual meaning underlying specialized texts in different languages, and thus facilitate specialized knowledge acquisition.

Frame semantics is a theory of linguistic meaning developed by Charles J. Fillmore that extends his earlier case grammar. It relates linguistic semantics to encyclopedic knowledge. The basic idea is that one cannot understand the meaning of a single word without access to all the essential knowledge that relates to that word. For example, one would not be able to understand the word "sell" without knowing anything about the situation of commercial transfer, which also involves, among other things, a seller, a buyer, goods, money, the relation between the money and the goods, the relations between the seller and the goods and the money, the relation between the buyer and the goods and the money and so on.

Frame-based terminology focuses on:

- conceptual organization;

- the multidimensional nature of terminological units; and

- the extraction of semantic and syntactic information through the use of multilingual corpora.

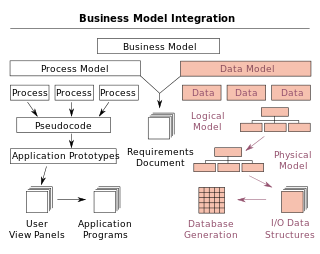

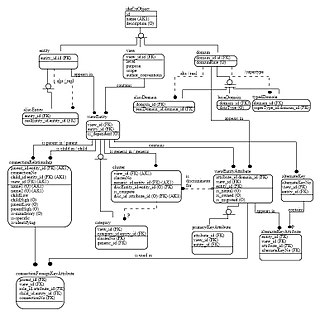

In frame-based terminology, conceptual networks are based on an underlying domain event, which generates templates for the actions and processes that take place in the specialized field as well as the entities that participate in them.

As a result, knowledge extraction is largely text-based. The terminological entries are composed of information from specialized texts as well as specialized language resources. Knowledge is configured and represented in a dynamic conceptual network that is capable of adapting to new contexts. At the most general level, generic roles of agent, patient, result, and instrument are activated by basic predicate meanings such as make, do, affect, use, become, etc. which structure the basic meanings in specialized texts. From a linguistic perspective, Aktionsart distinctions in texts are based on Van Valin's classification of predicate types. At the more specific levels of the network, the qualia structure of the generative lexicon is used as a basis for the systematic classification and relation of nominal entities.

The lexical aspect or aktionsart of a verb is a part of the way in which that verb is structured in relation to time. Any event, state, process, or action which a verb expresses—collectively, any eventuality—may also be said to have the same lexical aspect. Lexical aspect is distinguished from grammatical aspect: lexical aspect is an inherent property of a (semantic) eventuality, whereas grammatical aspect is a property of a realization. Lexical aspect is invariant, while grammatical aspect can be changed according to the whims of the speaker.

Generative Lexicon (GL) is a theory of linguistic semantics which focuses on the distributed nature of compositionality in natural language. The first major work outlining the framework is James Pustejovsky's "Generative Lexicon" (1991). Subsequent important developments are presented in Pustejovsky and Boguraev (1993), Bouillon (1997), and Busa (1996). The first unified treatment of GL was given in Pustejovsky (1995). Unlike purely verb-based approaches to compositionality, Generative Lexicon attempts to spread the semantic load across all constituents of the utterance. Central to the philosophical perspective of GL are two major lines of inquiry: (1) How is it that we are able to deploy a finite number of words in our language in an unbounded number of contexts? (2) Is lexical information and the representations used in composing meanings separable from our commonsense knowledge?

The methodology of frame-based terminology derives the conceptual system of the domain by means of an integrated top-down and bottom-up approach. The bottom-up approach consists of extracting information from a corpus of texts in various languages, specifically related to the domain. The top-down approach includes the information provided by specialized dictionaries and other reference material, complemented by the help of experts in the field.

In linguistics, a corpus or text corpus is a large and structured set of texts. In corpus linguistics, they are used to do statistical analysis and hypothesis testing, checking occurrences or validating linguistic rules within a specific language territory.

In a parallel way, the underlying conceptual framework of a knowledge-domain event is specified. The most generic or base-level categories of a domain are configured in a prototypical domain event or action-environment interface. This provides a template applicable to all levels of information structuring. In this way a structure is obtained which facilitates and enhances knowledge acquisition since the information in term entries is internally as well as externally coherent.