In computer engineering, a load/store architecture is an instruction set architecture that divides instructions into two categories: memory access (load and store between memory and registers), and ALU operations (which only occur between registers). [1] :9-12

Computer engineering is a branch of engineering that integrates several fields of computer science and electronic engineering required to develop computer hardware and software. Computer engineers usually have training in electronic engineering, software design, and hardware-software integration instead of only software engineering or electronic engineering. Computer engineers are involved in many hardware and software aspects of computing, from the design of individual microcontrollers, microprocessors, personal computers, and supercomputers, to circuit design. This field of engineering not only focuses on how computer systems themselves work but also how they integrate into the larger picture.

An instruction set architecture (ISA) is an abstract model of a computer. It is also referred to as architecture or computer architecture. A realization of an ISA is called an implementation. An ISA permits multiple implementations that may vary in performance, physical size, and monetary cost ; because the ISA serves as the interface between software and hardware. Software that has been written for an ISA can run on different implementations of the same ISA. This has enabled binary compatibility between different generations of computers to be easily achieved, and the development of computer families. Both of these developments have helped to lower the cost of computers and to increase their applicability. For these reasons, the ISA is one of the most important abstractions in computing today.

In computer architecture, a processor register is a quickly accessible location available to a computer's central processing unit (CPU). Registers usually consist of a small amount of fast storage, although some registers have specific hardware functions, and may be read-only or write-only. Registers are typically addressed by mechanisms other than main memory, but may in some cases be assigned a memory address e.g. DEC PDP-10, ICT 1900.

RISC instruction set architectures such as PowerPC, SPARC, RISC-V, ARM, and MIPS are load/store architectures. [1] :9-12

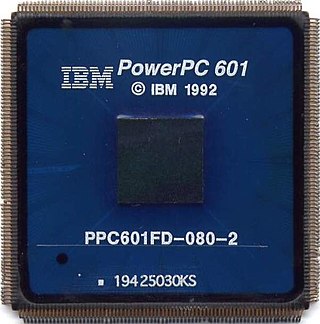

PowerPC is a reduced instruction set computing (RISC) instruction set architecture (ISA) created by the 1991 Apple–IBM–Motorola alliance, known as AIM. PowerPC, as an evolving instruction set, has since 2006 been named Power ISA, while the old name lives on as a trademark for some implementations of Power Architecture-based processors.

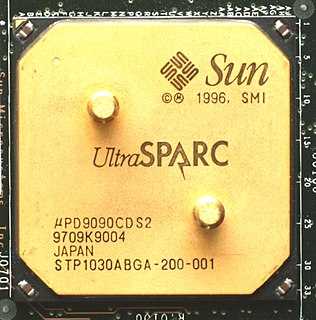

SPARC is a reduced instruction set computing (RISC) instruction set architecture (ISA) originally developed by Sun Microsystems. Its design was strongly influenced by the experimental Berkeley RISC system developed in the early 1980s. First released in 1987, SPARC was one of the most successful early commercial RISC systems, and its success led to the introduction of similar RISC designs from a number of vendors through the 1980s and 90s.

RISC-V is an open-source hardware instruction set architecture (ISA) based on established reduced instruction set computer (RISC) principles.

For instance, in a load/store approach both operands and destination for an ADD operation must be in registers. This differs from a register memory architecture (for example, a CISC instruction set architecture such as x86) in which one of the operands for the ADD operation may be in memory, while the other is in a register. [1] :9-12

In computer engineering, a register–memory architecture is an instruction set architecture that allows operations to be performed on memory, as well as registers. If the architecture allows all operands to be in memory or in registers, or in combinations, it is called a "register plus memory" architecture.

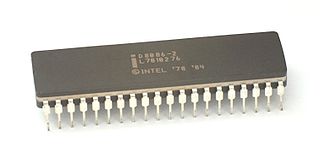

x86 is a family of instruction set architectures based on the Intel 8086 microprocessor and its 8088 variant. The 8086 was introduced in 1978 as a fully 16-bit extension of Intel's 8-bit 8080 microprocessor, with memory segmentation as a solution for addressing more memory than can be covered by a plain 16-bit address. The term "x86" came into being because the names of several successors to Intel's 8086 processor end in "86", including the 80186, 80286, 80386 and 80486 processors.

The earliest example of a load/store architecture was the CDC 6600. [1] :54-56 Almost all vector processors (including many GPUs [2] ) use the load/store approach. [3]

The CDC 6600 was the flagship of the 6000 series of mainframe computer systems manufactured by Control Data Corporation. Generally considered to be the first successful supercomputer, it outperformed the industry's prior recordholder, the IBM 7030 Stretch, by a factor of three. With performance of up to three megaFLOPS, the CDC 6600 was the world's fastest computer from 1964 to 1969, when it relinquished that status to its successor, the CDC 7600.

In computing, a vector processor or array processor is a central processing unit (CPU) that implements an instruction set containing instructions that operate on one-dimensional arrays of data called vectors, compared to the scalar processors, whose instructions operate on single data items. Vector processors can greatly improve performance on certain workloads, notably numerical simulation and similar tasks. Vector machines appeared in the early 1970s and dominated supercomputer design through the 1970s into the 1990s, notably the various Cray platforms. The rapid fall in the price-to-performance ratio of conventional microprocessor designs led to the vector supercomputer's demise in the later 1990s.