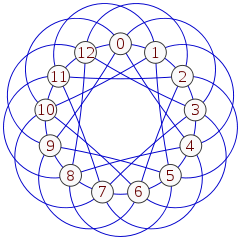

In graph theory, an expander graph is a sparse graph that has strong connectivity properties, quantified using vertex, edge or spectral expansion. Expander constructions have spawned research in pure and applied mathematics, with several applications to complexity theory, design of robust computer networks, and the theory of error-correcting codes.

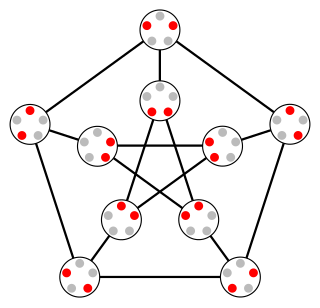

In graph theory, a regular graph is a graph where each vertex has the same number of neighbors; i.e. every vertex has the same degree or valency. A regular directed graph must also satisfy the stronger condition that the indegree and outdegree of each internal vertex are equal to each other. A regular graph with vertices of degree k is called a k‑regular graph or regular graph of degree k. Also, from the handshaking lemma, a regular graph contains an even number of vertices with odd degree.

In physical science and mathematics, the Legendre functionsPλ, Qλ and associated Legendre functionsPμ

λ, Qμ

λ, and Legendre functions of the second kind, Qn, are all solutions of Legendre's differential equation. The Legendre polynomials and the associated Legendre polynomials are also solutions of the differential equation in special cases, which, by virtue of being polynomials, have a large number of additional properties, mathematical structure, and applications. For these polynomial solutions, see the separate Wikipedia articles.

In graph theory and computer science, an adjacency matrix is a square matrix used to represent a finite graph. The elements of the matrix indicate whether pairs of vertices are adjacent or not in the graph.

In mathematics, spectral graph theory is the study of the properties of a graph in relationship to the characteristic polynomial, eigenvalues, and eigenvectors of matrices associated with the graph, such as its adjacency matrix or Laplacian matrix.

In quantum field theory, a quartic interaction is a type of self-interaction in a scalar field. Other types of quartic interactions may be found under the topic of four-fermion interactions. A classical free scalar field satisfies the Klein–Gordon equation. If a scalar field is denoted , a quartic interaction is represented by adding a potential energy term to the Lagrangian density. The coupling constant is dimensionless in 4-dimensional spacetime.

In mathematics, the discrete Laplace operator is an analog of the continuous Laplace operator, defined so that it has meaning on a graph or a discrete grid. For the case of a finite-dimensional graph, the discrete Laplace operator is more commonly called the Laplacian matrix.

In statistical mechanics and mathematics, the Bethe lattice is an infinite connected cycle-free graph where all vertices have the same number of neighbors. The Bethe lattice was introduced into the physics literature by Hans Bethe in 1935. In such a graph, each node is connected to z neighbors; the number z is called either the coordination number or the degree, depending on the field.

In linear algebra, an eigenvector or characteristic vector of a linear transformation is a nonzero vector that changes at most by a constant factor when that linear transformation is applied to it. The corresponding eigenvalue, often represented by , is the multiplying factor.

In probability theory and statistics, the noncentral chi-squared distribution is a noncentral generalization of the chi-squared distribution. It often arises in the power analysis of statistical tests in which the null distribution is a chi-squared distribution; important examples of such tests are the likelihood-ratio tests.

In probability theory and statistics, the generalized inverse Gaussian distribution (GIG) is a three-parameter family of continuous probability distributions with probability density function

In graph theory, the Kneser graphK(n, k) (alternatively KGn,k) is the graph whose vertices correspond to the k-element subsets of a set of n elements, and where two vertices are adjacent if and only if the two corresponding sets are disjoint. Kneser graphs are named after Martin Kneser, who first investigated them in 1956.

In probability theory, the inverse Gaussian distribution is a two-parameter family of continuous probability distributions with support on (0,∞).

In graph theory, a graph is said to be a pseudorandom graph if it obeys certain properties that random graphs obey with high probability. There is no concrete definition of graph pseudorandomness, but there are many reasonable characterizations of pseudorandomness one can consider.

The expander mixing lemma intuitively states that the edges of certain -regular graphs are evenly distributed throughout the graph. In particular, the number of edges between two vertex subsets and is always close to the expected number of edges between them in a random -regular graph, namely .

In the mathematical discipline of graph theory, the expander walk sampling theorem intuitively states that sampling vertices in an expander graph by doing relatively short random walk can simulate sampling the vertices independently from a uniform distribution. The earliest version of this theorem is due to Ajtai, Komlós & Szemerédi (1987), and the more general version is typically attributed to Gillman (1998).

In physics and mathematics, the solid harmonics are solutions of the Laplace equation in spherical polar coordinates, assumed to be (smooth) functions . There are two kinds: the regular solid harmonics, which are well-defined at the origin and the irregular solid harmonics, which are singular at the origin. Both sets of functions play an important role in potential theory, and are obtained by rescaling spherical harmonics appropriately:

In mathematics, the spectral theory of ordinary differential equations is the part of spectral theory concerned with the determination of the spectrum and eigenfunction expansion associated with a linear ordinary differential equation. In his dissertation, Hermann Weyl generalized the classical Sturm–Liouville theory on a finite closed interval to second order differential operators with singularities at the endpoints of the interval, possibly semi-infinite or infinite. Unlike the classical case, the spectrum may no longer consist of just a countable set of eigenvalues, but may also contain a continuous part. In this case the eigenfunction expansion involves an integral over the continuous part with respect to a spectral measure, given by the Titchmarsh–Kodaira formula. The theory was put in its final simplified form for singular differential equations of even degree by Kodaira and others, using von Neumann's spectral theorem. It has had important applications in quantum mechanics, operator theory and harmonic analysis on semisimple Lie groups.

In the mathematical theory of random matrices, the Marchenko–Pastur distribution, or Marchenko–Pastur law, describes the asymptotic behavior of singular values of large rectangular random matrices. The theorem is named after Soviet mathematicians Vladimir Marchenko and Leonid Pastur who proved this result in 1967.

In spectral graph theory, the Alon–Boppana bound provides a lower bound on the second-largest eigenvalue of the adjacency matrix of a -regular graph, meaning a graph in which every vertex has degree . The reason for the interest in the second-largest eigenvalue is that the largest eigenvalue is guaranteed to be due to -regularity, with the all-ones vector being the associated eigenvector. The graphs that come close to meeting this bound are Ramanujan graphs, which are examples of the best possible expander graphs.