In mathematics, the Kantorovich inequality is a particular case of the Cauchy–Schwarz inequality, which is itself a generalization of the triangle inequality.

Mathematics includes the study of such topics as quantity, structure, space, and change.

In mathematics, the Cauchy–Schwarz inequality, also known as the Cauchy–Bunyakovsky–Schwarz inequality, is a useful inequality encountered in many different settings, such as linear algebra, analysis, probability theory, vector algebra and other areas. It is considered to be one of the most important inequalities in all of mathematics.

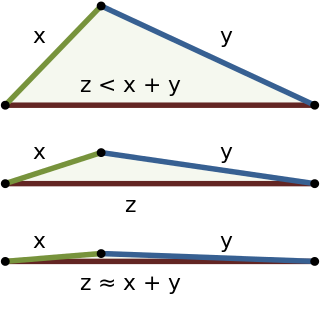

In mathematics, the triangle inequality states that for any triangle, the sum of the lengths of any two sides must be greater than or equal to the length of the remaining side. This statement permits the inclusion of degenerate triangles, but some authors, especially those writing about elementary geometry, will exclude this possibility, thus leaving out the possibility of equality. If x, y, and z are the lengths of the sides of the triangle, with no side being greater than z, then the triangle inequality states that

The triangle inequality states that the length of two sides of any triangle, added together, will be equal to or greater than the length of the third side. In simplest terms, the Kantorovich inequality translates the basic idea of the triangle inequality into the terms and notational conventions of linear programming. (See vector space, inner product, and normed vector space for other examples of how the basic ideas inherent in the triangle inequality—line segment and distance—can be generalized into a broader context.)

Linear programming is a method to achieve the best outcome in a mathematical model whose requirements are represented by linear relationships. Linear programming is a special case of mathematical programming.

A vector space is a collection of objects called vectors, which may be added together and multiplied ("scaled") by numbers, called scalars. Scalars are often taken to be real numbers, but there are also vector spaces with scalar multiplication by complex numbers, rational numbers, or generally any field. The operations of vector addition and scalar multiplication must satisfy certain requirements, called axioms, listed below.

In mathematics, a normed vector space is a vector space over the real or complex numbers, on which a norm is defined. A norm is the formalization and the generalization to real vector spaces of the intuitive notion of distance in the real world. A norm is a real-valued function defined on the vector space that has the following properties:

- The zero vector, 0, has zero length; every other vector has a positive length.

- Multiplying a vector by a positive number changes its length without changing its direction. Moreover,

- The triangle inequality holds. That is, taking norms as distances, the distance from point A through B to C is never shorter than going directly from A to C, or the shortest distance between any two points is a straight line.

More formally, the Kantorovich inequality can be expressed this way:

- Let

- Let

- Then

The Kantorovich inequality is used in convergence analysis; it bounds the convergence rate of Cauchy's steepest descent.

Equivalents of the Kantorovich inequality have arisen in a number of different fields. For instance, the Cauchy–Schwarz–Bunyakovsky inequality and the Wielandt inequality are equivalent to the Kantorovich inequality and all of these are, in turn, special cases of the Hölder inequality.

The Kantorovich inequality is named after Soviet economist, mathematician, and Nobel Prize winner Leonid Kantorovich, a pioneer in the field of linear programming.

The Nobel Prize is a set of annual international awards bestowed in several categories by Swedish and Norwegian institutions in recognition of academic, cultural, or scientific advances.

Leonid Vitaliyevich Kantorovich was a Soviet mathematician and economist, known for his theory and development of techniques for the optimal allocation of resources. He is regarded as the founder of linear programming. He was the winner of the Stalin Prize in 1949 and the Nobel Memorial Prize in Economic Sciences in 1975.

There is also Matrix version of the Kantrovich inequality due to Marshall and Olkin.