Derivation of the Wiener filter for system identification

Given a known input signal  , the output of an unknown LTI system

, the output of an unknown LTI system  can be expressed as:

can be expressed as:

where  is an unknown filter tap coefficients and

is an unknown filter tap coefficients and  is noise.

is noise.

The model system  , using a Wiener filter solution with an order N, can be expressed as:

, using a Wiener filter solution with an order N, can be expressed as:

where  are the filter tap coefficients to be determined.

are the filter tap coefficients to be determined.

The error between the model and the unknown system can be expressed as:

The total squared error  can be expressed as:

can be expressed as:

Use the Minimum mean-square error criterion over all of  by setting its gradient to zero:

by setting its gradient to zero:

In vector calculus, the gradient is a multi-variable generalization of the derivative. Whereas the ordinary derivative of a function of a single variable is a scalar-valued function, the gradient of a function of several variables is a vector-valued function. Specifically, the gradient of a differentiable function of several variables, at a point , is the vector whose components are the partial derivatives of at .

which is

which is  for all

for all

Substitute the definition of  :

:

Distribute the partial derivative:

Using the definition of discrete cross-correlation:

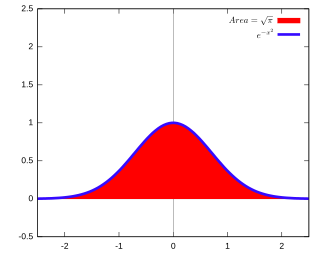

In signal processing, cross-correlation is a measure of similarity of two series as a function of the displacement of one relative to the other. This is also known as a sliding dot product or sliding inner-product. It is commonly used for searching a long signal for a shorter, known feature. It has applications in pattern recognition, single particle analysis, electron tomography, averaging, cryptanalysis, and neurophysiology. The cross-correlation is similar in nature to the convolution of two functions. In an autocorrelation, which is the cross-correlation of a signal with itself, there will always be a peak at a lag of zero, and its size will be the signal energy.

Rearrange the terms:

for all

for all

This system of N equations with N unknowns can be determined.

The resulting coefficients of the Wiener filter can be determined by:  , where

, where  is the cross-correlation vector between

is the cross-correlation vector between  and

and  .

.

Derivation of the LMS algorithm

By relaxing the infinite sum of the Wiener filter to just the error at time  , the LMS algorithm can be derived.

, the LMS algorithm can be derived.

The squared error can be expressed as:

Using the Minimum mean-square error criterion, take the gradient:

Apply chain rule and substitute definition of y[n]

Using gradient descent and a step size  :

:

which becomes, for i = 0, 1, ..., N-1,

This is the LMS update equation.