Related Research Articles

The Transmission Control Protocol (TCP) is one of the main protocols of the Internet protocol suite. It originated in the initial network implementation in which it complemented the Internet Protocol (IP). Therefore, the entire suite is commonly referred to as TCP/IP. TCP provides reliable, ordered, and error-checked delivery of a stream of octets (bytes) between applications running on hosts communicating via an IP network. Major internet applications such as the World Wide Web, email, remote administration, and file transfer rely on TCP, which is part of the Transport Layer of the TCP/IP suite. SSL/TLS often runs on top of TCP.

Network congestion in data networking and queueing theory is the reduced quality of service that occurs when a network node or link is carrying more data than it can handle. Typical effects include queueing delay, packet loss or the blocking of new connections. A consequence of congestion is that an incremental increase in offered load leads either only to a small increase or even a decrease in network throughput.

FAST TCP is a TCP congestion avoidance algorithm especially targeted at long-distance, high latency links, developed at the Netlab, California Institute of Technology and now being commercialized by FastSoft. FastSoft was acquired by Akamai Technologies in 2012.

Transmission Control Protocol (TCP) uses a network congestion-avoidance algorithm that includes various aspects of an additive increase/multiplicative decrease (AIMD) scheme, along with other schemes including slow start and congestion window (CWND), to achieve congestion avoidance. The TCP congestion-avoidance algorithm is the primary basis for congestion control in the Internet. Per the end-to-end principle, congestion control is largely a function of internet hosts, not the network itself. There are several variations and versions of the algorithm implemented in protocol stacks of operating systems of computers that connect to the Internet.

Nagle's algorithm is a means of improving the efficiency of TCP/IP networks by reducing the number of packets that need to be sent over the network. It was defined by John Nagle while working for Ford Aerospace. It was published in 1984 as a Request for Comments (RFC) with title Congestion Control in IP/TCP Internetworks in RFC 896.

TCP tuning techniques adjust the network congestion avoidance parameters of Transmission Control Protocol (TCP) connections over high-bandwidth, high-latency networks. Well-tuned networks can perform up to 10 times faster in some cases. However, blindly following instructions without understanding their real consequences can hurt performance as well.

Packet loss occurs when one or more packets of data travelling across a computer network fail to reach their destination. Packet loss is either caused by errors in data transmission, typically across wireless networks, or network congestion. Packet loss is measured as a percentage of packets lost with respect to packets sent.

HighSpeed TCP (HSTCP) is a congestion control algorithm protocol defined in RFC 3649 for Transport Control Protocol (TCP). Standard TCP performs poorly in networks with a large bandwidth-delay product. It is unable to fully utilize available bandwidth. HSTCP makes minor modifications to standard TCP's congestion control mechanism to overcome this limitation.

UDP-based Data Transfer Protocol (UDT), is a high-performance data transfer protocol designed for transferring large volumetric datasets over high-speed wide area networks. Such settings are typically disadvantageous for the more common TCP protocol.

Bandwidth management is the process of measuring and controlling the communications on a network link, to avoid filling the link to capacity or overfilling the link, which would result in network congestion and poor performance of the network. Bandwidth is described by bit rate and measured in units of bits per second (bit/s) or bytes per second (B/s).

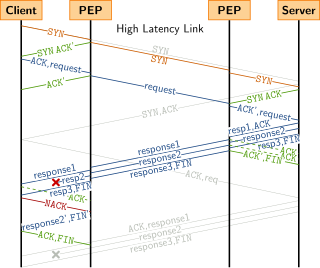

Performance-enhancing proxies (PEPs) are network agents designed to improve the end-to-end performance of some communication protocols. PEP standards are defined in RFC 3135 and RFC 3449.

TCP acceleration is the name of a series of techniques for achieving better throughput on a network connection than standard TCP achieves, without modifying the end applications. It is an alternative or a supplement to TCP tuning.

In computing, Microsoft's Windows Vista and Windows Server 2008 introduced in 2007/2008 a new networking stack named Next Generation TCP/IP stack, to improve on the previous stack in several ways. The stack includes native implementation of IPv6, as well as a complete overhaul of IPv4. The new TCP/IP stack uses a new method to store configuration settings that enables more dynamic control and does not require a computer restart after a change in settings. The new stack, implemented as a dual-stack model, depends on a strong host-model and features an infrastructure to enable more modular components that one can dynamically insert and remove.

A sliding window protocol is a feature of packet-based data transmission protocols. Sliding window protocols are used where reliable in-order delivery of packets is required, such as in the data link layer as well as in the Transmission Control Protocol (TCP). They are also used to improve efficiency when the channel may include high latency.

CUBIC is a network congestion avoidance algorithm for TCP which can achieve high bandwidth connections over networks more quickly and reliably in the face of high latency than earlier algorithms. It helps optimize long fat networks.

WTCP is a proxy-based modification of TCP that preserves the end-to-end semantics of TCP. As its name suggests, it is used in wireless networks to improve the performance of TCP.

Zeta-TCP refers to a set of proprietary Transmission Control Protocol (TCP) algorithms targeted to improve the end-to-end performance of TCP, regardless of whether the peer is Zeta-TCP or any other TCP protocol stack, in other words, to be compatible with the existing TCP algorithms. It was designed and implemented by AppEx Networks Corporation.

DECbit is a TCP congestion control technique implemented in routers to avoid congestion. Its utility is to predict possible congestion and prevent it.

NACK-Oriented Reliable Multicast (NORM) is a transport layer Internet protocol designed to provide reliable transport in multicast groups in data networks. It is formally defined by the Internet Engineering Task Force (IETF) in Request for Comments (RFC) 5740, which was published in November 2009.

Saverio Mascolo is an Italian information engineer, academic and researcher. He is the former Head of the Department of Electrical Engineering and Information Science and the professor of Automatic Control at Department of Ingegneria Elettrica e dell'Informazione (DEI) at Politecnico di Bari, Italy.

References

- ↑ Saverio Mascolo; Claudio Casetti; Mario Gerla; M. Y. Sanadidi; Ren Wang (July 2001), "TCP Westwood: Bandwidth Estimation for Enhanced Transport over Wireless Links", Proc. of the ACM Mobicom 2001, Rome, Italy, July 16-21 2001

- ↑ L. A. Grieco; S. Mascolo (April 2004), "Performance evaluation and comparison of Westwood+, New Reno and Vegas TCP congestion control", ACM Computer Communication Review, vol. 34, no. 2

- ↑ S. Mascolo; G. Racanelli (February 2004), "Testing TCP Westwood+ over Transatlantic Links at 10 Gigabit/second rate", Third International Workshop on Protocols for Fast Long-Distance Networks (PFLDNET05), Ecole Normale Supérieure, Lyon, France, February 3, 4 2005