In mathematics, a finite field or Galois field is a field that contains a finite number of elements. As with any field, a finite field is a set on which the operations of multiplication, addition, subtraction and division are defined and satisfy certain basic rules. The most common examples of finite fields are given by the integers mod p when p is a prime number.

In mathematics, in the area of abstract algebra known as Galois theory, the Galois group of a certain type of field extension is a specific group associated with the field extension. The study of field extensions and their relationship to the polynomials that give rise to them via Galois groups is called Galois theory, so named in honor of Évariste Galois who first discovered them.

In mathematics, specifically abstract algebra, an integral domain is a nonzero commutative ring in which the product of any two nonzero elements is nonzero. Integral domains are generalizations of the ring of integers and provide a natural setting for studying divisibility. In an integral domain, every nonzero element a has the cancellation property, that is, if a ≠ 0, an equality ab = ac implies b = c.

Shor's algorithm is a quantum algorithm for finding the prime factors of an integer. It was developed in 1994 by the American mathematician Peter Shor. It is one of the few known quantum algorithms with compelling potential applications and strong evidence of superpolynomial speedup compared to best known classical algorithms. On the other hand, factoring numbers of practical significance requires far more qubits than available in the near future. Another concern is that noise in quantum circuits may undermine results, requiring additional qubits for quantum error correction.

In mathematics, Hilbert's Nullstellensatz is a theorem that establishes a fundamental relationship between geometry and algebra. This relationship is the basis of algebraic geometry. It relates algebraic sets to ideals in polynomial rings over algebraically closed fields. This relationship was discovered by David Hilbert, who proved the Nullstellensatz in his second major paper on invariant theory in 1893.

In mathematics, an irreducible polynomial is, roughly speaking, a polynomial that cannot be factored into the product of two non-constant polynomials. The property of irreducibility depends on the nature of the coefficients that are accepted for the possible factors, that is, the field to which the coefficients of the polynomial and its possible factors are supposed to belong. For example, the polynomial x2 − 2 is a polynomial with integer coefficients, but, as every integer is also a real number, it is also a polynomial with real coefficients. It is irreducible if it is considered as a polynomial with integer coefficients, but it factors as if it is considered as a polynomial with real coefficients. One says that the polynomial x2 − 2 is irreducible over the integers but not over the reals.

In mathematics, an affine algebraic plane curve is the zero set of a polynomial in two variables. A projective algebraic plane curve is the zero set in a projective plane of a homogeneous polynomial in three variables. An affine algebraic plane curve can be completed in a projective algebraic plane curve by homogenizing its defining polynomial. Conversely, a projective algebraic plane curve of homogeneous equation h(x, y, t) = 0 can be restricted to the affine algebraic plane curve of equation h(x, y, 1) = 0. These two operations are each inverse to the other; therefore, the phrase algebraic plane curve is often used without specifying explicitly whether it is the affine or the projective case that is considered.

In mathematics, and more specifically in computer algebra, computational algebraic geometry, and computational commutative algebra, a Gröbner basis is a particular kind of generating set of an ideal in a polynomial ring K[x1, ..., xn] over a field K. A Gröbner basis allows many important properties of the ideal and the associated algebraic variety to be deduced easily, such as the dimension and the number of zeros when it is finite. Gröbner basis computation is one of the main practical tools for solving systems of polynomial equations and computing the images of algebraic varieties under projections or rational maps.

In mathematics, especially in the field of algebra, a polynomial ring or polynomial algebra is a ring formed from the set of polynomials in one or more indeterminates with coefficients in another ring, often a field.

In mathematics, differential algebra is, broadly speaking, the area of mathematics consisting in the study of differential equations and differential operators as algebraic objects in view of deriving properties of differential equations and operators without computing the solutions, similarly as polynomial algebras are used for the study of algebraic varieties, which are solution sets of systems of polynomial equations. Weyl algebras and Lie algebras may be considered as belonging to differential algebra.

In algebra, Gauss's lemma, named after Carl Friedrich Gauss, is a statement about polynomials over the integers, or, more generally, over a unique factorization domain. Gauss's lemma underlies all the theory of factorization and greatest common divisors of such polynomials.

In mathematics and computer algebra, factorization of polynomials or polynomial factorization expresses a polynomial with coefficients in a given field or in the integers as the product of irreducible factors with coefficients in the same domain. Polynomial factorization is one of the fundamental components of computer algebra systems.

In computational algebra, the Cantor–Zassenhaus algorithm is a method for factoring polynomials over finite fields.

In algebra, the greatest common divisor of two polynomials is a polynomial, of the highest possible degree, that is a factor of both the two original polynomials. This concept is analogous to the greatest common divisor of two integers.

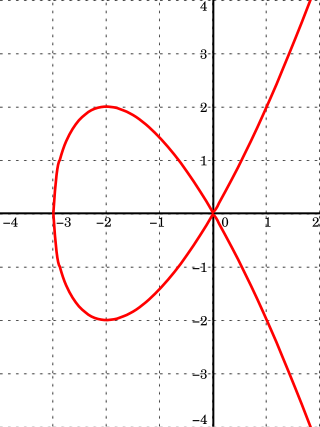

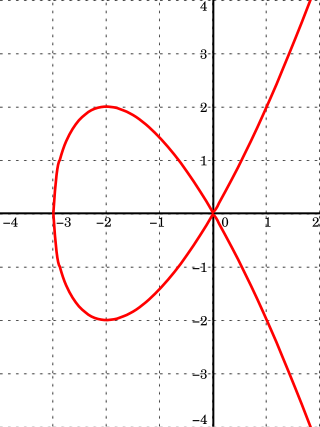

A hyperelliptic curve is a particular kind of algebraic curve. There exist hyperelliptic curves of every genus . If the genus of a hyperelliptic curve equals 1, we simply call the curve an elliptic curve. Hence we can see hyperelliptic curves as generalizations of elliptic curves. There is a well-known group structure on the set of points lying on an elliptic curve over some field , which we can describe geometrically with chords and tangents. Generalizing this group structure to the hyperelliptic case is not straightforward. We cannot define the same group law on the set of points lying on a hyperelliptic curve, instead a group structure can be defined on the so-called Jacobian of a hyperelliptic curve. The computations differ depending on the number of points at infinity. Imaginary hyperelliptic curves are hyperelliptic curves with exactly 1 point at infinity: real hyperelliptic curves have two points at infinity.

In mathematics the Function Field Sieve is one of the most efficient algorithms to solve the Discrete Logarithm Problem (DLP) in a finite field. It has heuristic subexponential complexity. Leonard Adleman developed it in 1994 and then elaborated it together with M. D. Huang in 1999. Previous work includes the work of D. Coppersmith about the DLP in fields of characteristic two.

In mathematics, an algebraic number field is an extension field of the field of rational numbers such that the field extension has finite degree . Thus is a field that contains and has finite dimension when considered as a vector space over .

In mathematics and computer algebra the factorization of a polynomial consists of decomposing it into a product of irreducible factors. This decomposition is theoretically possible and is unique for polynomials with coefficients in any field, but rather strong restrictions on the field of the coefficients are needed to allow the computation of the factorization by means of an algorithm. In practice, algorithms have been designed only for polynomials with coefficients in a finite field, in the field of rationals or in a finitely generated field extension of one of them.

In number theory, Berlekamp's root finding algorithm, also called the Berlekamp–Rabin algorithm, is the probabilistic method of finding roots of polynomials over a field . The method was discovered by Elwyn Berlekamp in 1970 as an auxiliary to the algorithm for polynomial factorization over finite fields. The algorithm was later modified by Rabin for arbitrary finite fields in 1979. The method was also independently discovered before Berlekamp by other researchers.

In algebraic number theory, the Dedekind–Kummer theorem describes how a prime ideal in a Dedekind domain factors over the domain's integral closure.