In algorithmic information theory, the Kolmogorov complexity of an object, such as a piece of text, is the length of a shortest computer program that produces the object as output. It is a measure of the computational resources needed to specify the object, and is also known as algorithmic complexity, Solomonoff–Kolmogorov–Chaitin complexity, program-size complexity, descriptive complexity, or algorithmic entropy. It is named after Andrey Kolmogorov, who first published on the subject in 1963 and is a generalization of classical information theory.

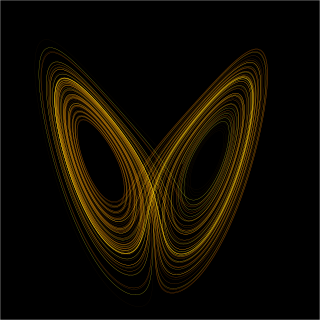

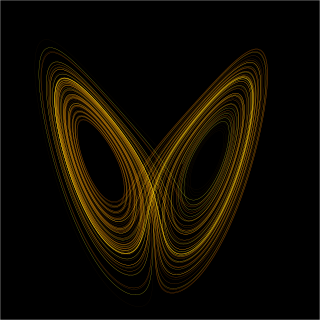

In mathematics, a dynamical system is a system in which a function describes the time dependence of a point in an ambient space, such as in a parametric curve. Examples include the mathematical models that describe the swinging of a clock pendulum, the flow of water in a pipe, the random motion of particles in the air, and the number of fish each springtime in a lake. The most general definition unifies several concepts in mathematics such as ordinary differential equations and ergodic theory by allowing different choices of the space and how time is measured. Time can be measured by integers, by real or complex numbers or can be a more general algebraic object, losing the memory of its physical origin, and the space may be a manifold or simply a set, without the need of a smooth space-time structure defined on it.

In computability theory, Rice's theorem states that all non-trivial semantic properties of programs are undecidable. A semantic property is one about the program's behavior, unlike a syntactic property. A property is non-trivial if it is neither true for every program, nor false for every program.

In computability theory, Kleene's recursion theorems are a pair of fundamental results about the application of computable functions to their own descriptions. The theorems were first proved by Stephen Kleene in 1938 and appear in his 1952 book Introduction to Metamathematics. A related theorem, which constructs fixed points of a computable function, is known as Rogers's theorem and is due to Hartley Rogers, Jr.

In computability theory, a set S of natural numbers is called computably enumerable (c.e.), recursively enumerable (r.e.), semidecidable, partially decidable, listable, provable or Turing-recognizable if:

In computability theory the S m

n theorem, written also as "smn-theorem" or "s-m-n theorem" is a basic result about programming languages. It was first proved by Stephen Cole Kleene (1943). The name S m

n comes from the occurrence of an S with subscript n and superscript m in the original formulation of the theorem.

In computational complexity theory the Blum axioms or Blum complexity axioms are axioms that specify desirable properties of complexity measures on the set of computable functions. The axioms were first defined by Manuel Blum in 1967.

In computability theory the Myhill isomorphism theorem, named after John Myhill, provides a characterization for two numberings to induce the same notion of computability on a set.

In logic and theoretical computer science, and specifically proof theory and computational complexity theory, proof complexity is the field aiming to understand and analyse the computational resources that are required to prove or refute statements. Research in proof complexity is predominantly concerned with proving proof-length lower and upper bounds in various propositional proof systems. For example, among the major challenges of proof complexity is showing that the Frege system, the usual propositional calculus, does not admit polynomial-size proofs of all tautologies. Here the size of the proof is simply the number of symbols in it, and a proof is said to be of polynomial size if it is polynomial in the size of the tautology it proves.

In computational complexity theory, the Gap Theorem, also known as the Borodin–Trakhtenbrot Gap Theorem, is a major theorem about the complexity of computable functions.

In computational complexity theory, the compression theorem is an important theorem about the complexity of computable functions.

In computability theory, a function is called limit computable if it is the limit of a uniformly computable sequence of functions. The terms computable in the limit, limit recursive and recursively approximable are also used. One can think of limit computable functions as those admitting an eventually correct computable guessing procedure at their true value. A set is limit computable just when its characteristic function is limit computable.

In optics, a caustic or caustic network is the envelope of light rays which have been reflected or refracted by a curved surface or object, or the projection of that envelope of rays on another surface. The caustic is a curve or surface to which each of the light rays is tangent, defining a boundary of an envelope of rays as a curve of concentrated light. Therefore, in the photo to the right, caustics can be seen as patches of light or their bright edges. These shapes often have cusp singularities.

In computational complexity theory, a computational hardness assumption is the hypothesis that a particular problem cannot be solved efficiently. It is not known how to prove (unconditional) hardness for essentially any useful problem. Instead, computer scientists rely on reductions to formally relate the hardness of a new or complicated problem to a computational hardness assumption about a problem that is better-understood.

In the mathematical field of differential topology, the Lie bracket of vector fields, also known as the Jacobi–Lie bracket or the commutator of vector fields, is an operator that assigns to any two vector fields X and Y on a smooth manifold M a third vector field denoted [X, Y].

In computability theory, the T predicate, first studied by mathematician Stephen Cole Kleene, is a particular set of triples of natural numbers that is used to represent computable functions within formal theories of arithmetic. Informally, the T predicate tells whether a particular computer program will halt when run with a particular input, and the corresponding U function is used to obtain the results of the computation if the program does halt. As with the smn theorem, the original notation used by Kleene has become standard terminology for the concept.

The #P-completeness of 01-permanent, sometimes known as Valiant's theorem, is a mathematical proof about the permanent of matrices, considered a seminal result in computational complexity theory. In a 1979 scholarly paper, Leslie Valiant proved that the computational problem of computing the permanent of a matrix is #P-hard, even if the matrix is restricted to have entries that are all 0 or 1. In this restricted case, computing the permanent is even #P-complete, because it corresponds to the #P problem of counting the number of permutation matrices one can get by changing ones into zeroes.

In computability theory, the halting problem is the problem of determining, from a description of an arbitrary computer program and an input, whether the program will finish running, or continue to run forever. The halting problem is undecidable, meaning that no general algorithm exists that solves the halting problem for all possible program–input pairs.

Bounded arithmetic is a collective name for a family of weak subtheories of Peano arithmetic. Such theories are typically obtained by requiring that quantifiers be bounded in the induction axiom or equivalent postulates. The main purpose is to characterize one or another class of computational complexity in the sense that a function is provably total if and only if it belongs to a given complexity class. Further, theories of bounded arithmetic present uniform counterparts to standard propositional proof systems such as Frege system and are, in particular, useful for constructing polynomial-size proofs in these systems. The characterization of standard complexity classes and correspondence to propositional proof systems allows to interpret theories of bounded arithmetic as formal systems capturing various levels of feasible reasoning.

The union theorem is a result from the 60s in computational complexity theory. It was published in 1969 by Ed McCreight and Albert Meyer.