The anthropic principle, also known as the "observation selection effect", is the hypothesis, first proposed in 1957 by Robert Dicke, that the range of possible observations that could be made about the universe is limited by the fact that observations could happen only in a universe capable of developing intelligent life. Proponents of the anthropic principle argue that it explains why the universe has the age and the fundamental physical constants necessary to accommodate conscious life, since if either had been different, no one would have been around to make observations. Anthropic reasoning is often used to deal with the idea that the universe seems to be finely tuned for the existence of life.

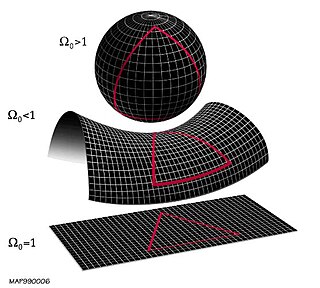

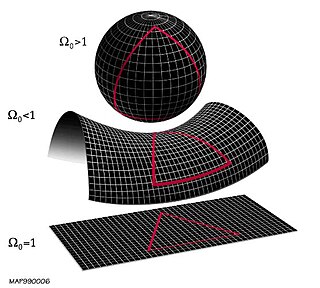

The Big Bang is a physical theory that describes how the universe expanded from an initial state of high density and temperature. It was first proposed in 1927 by Roman Catholic priest and physicist Georges Lemaître. Various cosmological models of the Big Bang explain the evolution of the observable universe from the earliest known periods through its subsequent large-scale form. These models offer a comprehensive explanation for a broad range of observed phenomena, including the abundance of light elements, the cosmic microwave background (CMB) radiation, and large-scale structure. The overall uniformity of the universe, known as the flatness problem, is explained through cosmic inflation: a sudden and very rapid expansion of space during the earliest moments. However, physics currently lacks a widely accepted theory of quantum gravity that can successfully model the earliest conditions of the Big Bang.

In particle physics, quantum electrodynamics (QED) is the relativistic quantum field theory of electrodynamics. In essence, it describes how light and matter interact and is the first theory where full agreement between quantum mechanics and special relativity is achieved. QED mathematically describes all phenomena involving electrically charged particles interacting by means of exchange of photons and represents the quantum counterpart of classical electromagnetism giving a complete account of matter and light interaction.

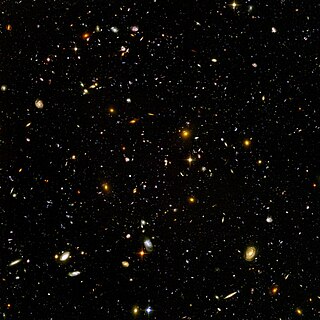

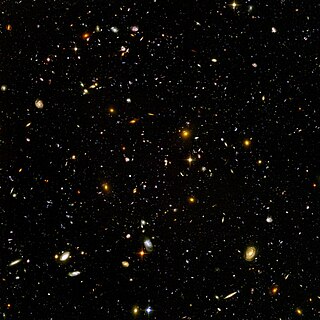

The universe is all of space and time and their contents. It comprises all of existence, any fundamental interaction, physical process and physical constant, and therefore all forms of energy and matter, and the structures they form, from sub-atomic particles to entire galaxies. Space and time, according to the prevailing cosmological theory of the Big Bang, emerged together 13.787±0.020 billion years ago, and the universe has been expanding ever since. Today the universe has expanded into an age and size that is physically only in parts observable as the observable universe, which is approximately 93 billion light-years in diameter at the present day, while the spatial size, if any, of the entire universe is unknown.

In cosmology, the cosmological constant, alternatively called Einstein's cosmological constant, is the constant coefficient of a term that Albert Einstein temporarily added to his field equations of general relativity. He later removed it, however much later it was revived and reinterpreted as the energy density of space, or vacuum energy, that arises in quantum mechanics. It is closely associated with the concept of dark energy.

A gravitational singularity, spacetime singularity or simply singularity is a condition in which gravity is predicted to be so intense that spacetime itself would break down catastrophically. As such, a singularity is by definition no longer part of the regular spacetime and cannot be determined by "where" or "when". Gravitational singularities exist at a junction between general relativity and quantum mechanics; therefore, the properties of the singularity cannot be described without an established theory of quantum gravity. Trying to find a complete and precise definition of singularities in the theory of general relativity, the current best theory of gravity, remains a difficult problem. A singularity in general relativity can be defined by the scalar invariant curvature becoming infinite or, better, by a geodesic being incomplete.

A Brief History of Time: From the Big Bang to Black Holes is a book on theoretical cosmology by English physicist Stephen Hawking. It was first published in 1988. Hawking wrote the book for readers who had no prior knowledge of physics.

T-symmetry or time reversal symmetry is the theoretical symmetry of physical laws under the transformation of time reversal,

The ultimate fate of the universe is a topic in physical cosmology, whose theoretical restrictions allow possible scenarios for the evolution and ultimate fate of the universe to be described and evaluated. Based on available observational evidence, deciding the fate and evolution of the universe has become a valid cosmological question, being beyond the mostly untestable constraints of mythological or theological beliefs. Several possible futures have been predicted by different scientific hypotheses, including that the universe might have existed for a finite and infinite duration, or towards explaining the manner and circumstances of its beginning.

The Big Crunch is a hypothetical scenario for the ultimate fate of the universe, in which the expansion of the universe eventually reverses and the universe recollapses, ultimately causing the cosmic scale factor to reach zero, an event potentially followed by a reformation of the universe starting with another Big Bang. The vast majority of evidence indicates that this hypothesis is not correct. Instead, astronomical observations show that the expansion of the universe is accelerating rather than being slowed by gravity, suggesting that a Big Chill is more likely. However, some physicists have proposed that a "Big Crunch-style" event could result from a dark energy fluctuation.

Renormalization is a collection of techniques in quantum field theory, statistical field theory, and the theory of self-similar geometric structures, that are used to treat infinities arising in calculated quantities by altering values of these quantities to compensate for effects of their self-interactions. But even if no infinities arose in loop diagrams in quantum field theory, it could be shown that it would be necessary to renormalize the mass and fields appearing in the original Lagrangian.

Frank Jennings Tipler is an American mathematical physicist and cosmologist, holding a joint appointment in the Departments of Mathematics and Physics at Tulane University. Tipler has written books and papers on the Omega Point based on Pierre Teilhard de Chardin's religious ideas, which he claims is a mechanism for the resurrection of the dead. He is also known for his theories on the Tipler cylinder time machine. His work has attracted criticism, most notably from Quaker and systems theorist George Ellis who has argued that his theories are largely pseudoscience.

The flatness problem is a cosmological fine-tuning problem within the Big Bang model of the universe. Such problems arise from the observation that some of the initial conditions of the universe appear to be fine-tuned to very 'special' values, and that small deviations from these values would have extreme effects on the appearance of the universe at the current time.

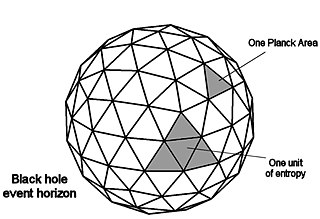

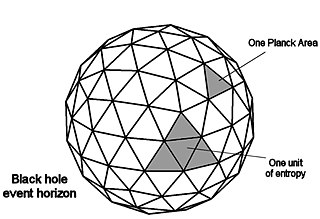

In physics, the Bekenstein bound is an upper limit on the thermodynamic entropy S, or Shannon entropy H, that can be contained within a given finite region of space which has a finite amount of energy—or conversely, the maximal amount of information required to perfectly describe a given physical system down to the quantum level. It implies that the information of a physical system, or the information necessary to perfectly describe that system, must be finite if the region of space and the energy are finite. In computer science this implies that non-finite models such as Turing machines are not realizable as finite devices.

Bremermann's limit, named after Hans-Joachim Bremermann, is a limit on the maximum rate of computation that can be achieved in a self-contained system in the material universe. It is derived from Einstein's mass-energy equivalency and the Heisenberg uncertainty principle, and is c2/h ≈ 1.3563925 × 1050 bits per second per kilogram.

The limits of computation are governed by a number of different factors. In particular, there are several physical and practical limits to the amount of computation or data storage that can be performed with a given amount of mass, volume, or energy.

Eternal inflation is a hypothetical inflationary universe model, which is itself an outgrowth or extension of the Big Bang theory.

The expansion of the universe is the increase in distance between gravitationally unbound parts of the observable universe with time. It is an intrinsic expansion, so it does not mean that the universe expands "into" anything or that space exists "outside" it. To any observer in the universe, it appears that all but the nearest galaxies recede at speeds that are proportional to their distance from the observer, on average. While objects cannot move faster than light, this limitation applies only with respect to local reference frames and does not limit the recession rates of cosmologically distant objects.

The Boltzmann brain thought experiment suggests that it might be more likely for a single brain to spontaneously form in a void, complete with a memory of having existed in our universe, rather than for the entire universe to come about in the manner cosmologists think it actually did. Physicists use the Boltzmann brain thought experiment as a reductio ad absurdum argument for evaluating competing scientific theories.

In astrophysics, an event horizon is a boundary beyond which events cannot affect an observer. Wolfgang Rindler coined the term in the 1950s.