In the field of artificial intelligence (AI), tasks that are hypothesised to require artificial general intelligence to solve are informally known as AI-complete or AI-hard. Calling a problem AI-complete reflects the belief that it cannot be solved by a simple specific algorithm.

A chatbot is a software application or web interface that is designed to mimic human conversation through text or voice interactions. Modern chatbots are typically online and use generative artificial intelligence systems that are capable of maintaining a conversation with a user in natural language and simulating the way a human would behave as a conversational partner. Such chatbots often use deep learning and natural language processing, but simpler chatbots have existed for decades.

Machine learning (ML) is a field of study in artificial intelligence concerned with the development and study of statistical algorithms that can learn from data and generalize to unseen data, and thus perform tasks without explicit instructions. Recently, artificial neural networks have been able to surpass many previous approaches in performance.

Natural language generation (NLG) is a software process that produces natural language output. A widely-cited survey of NLG methods describes NLG as "the subfield of artificial intelligence and computational linguistics that is concerned with the construction of computer systems than can produce understandable texts in English or other human languages from some underlying non-linguistic representation of information".

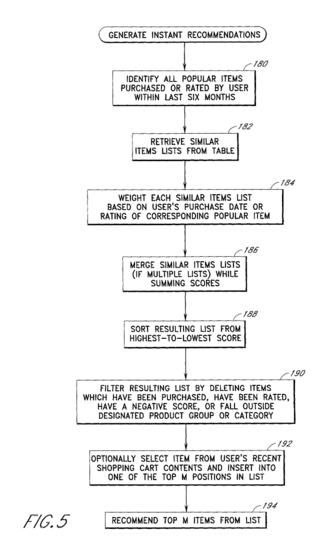

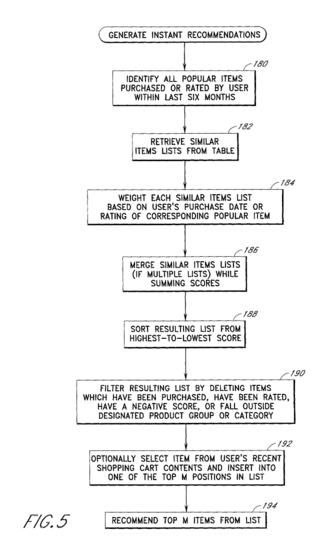

A recommender system, or a recommendation system, is a subclass of information filtering system that provides suggestions for items that are most pertinent to a particular user. Recommender systems are particularly useful when an individual needs to choose an item from a potentially overwhelming number of items that a service may offer.

Ben Shneiderman is an American computer scientist, a Distinguished University Professor in the University of Maryland Department of Computer Science, which is part of the University of Maryland College of Computer, Mathematical, and Natural Sciences at the University of Maryland, College Park, and the founding director (1983-2000) of the University of Maryland Human-Computer Interaction Lab. He conducted fundamental research in the field of human–computer interaction, developing new ideas, methods, and tools such as the direct manipulation interface, and his eight rules of design.

Artificial intelligence (AI) has been used in applications throughout industry and academia. Similar to electricity or computers, AI serves as a general-purpose technology that has numerous applications. Its applications span language translation, image recognition, decision-making, credit scoring, e-commerce and various other domains. AI which accommodates such technologies as machines being equipped perceive, understand, act and learning a scientific discipline.

Value sensitive design (VSD) is a theoretically grounded approach to the design of technology that accounts for human values in a principled and comprehensive manner. VSD originated within the field of information systems design and human-computer interaction to address design issues within the fields by emphasizing the ethical values of direct and indirect stakeholders. It was developed by Batya Friedman and Peter Kahn at the University of Washington starting in the late 1980s and early 1990s. Later, in 2019, Batya Friedman and David Hendry wrote a book on this topic called "Value Sensitive Design: Shaping Technology with Moral Imagination". Value Sensitive Design takes human values into account in a well-defined matter throughout the whole process. Designs are developed using an investigation consisting of three phases: conceptual, empirical and technological. These investigations are intended to be iterative, allowing the designer to modify the design continuously.

Eric Joel Horvitz is an American computer scientist, and Technical Fellow at Microsoft, where he serves as the company's first Chief Scientific Officer. He was previously the director of Microsoft Research Labs, including research centers in Redmond, WA, Cambridge, MA, New York, NY, Montreal, Canada, Cambridge, UK, and Bangalore, India.

Apache SINGA is an Apache top-level project for developing an open source machine learning library. It provides a flexible architecture for scalable distributed training, is extensible to run over a wide range of hardware, and has a focus on health-care applications.

Explainable AI (XAI), often overlapping with Interpretable AI, or Explainable Machine Learning (XML), either refers to an artificial intelligence (AI) system over which it is possible for humans to retain intellectual oversight, or refers to the methods to achieve this. The main focus is usually on the reasoning behind the decisions or predictions made by the AI which are made more understandable and transparent. XAI counters the "black box" tendency of machine learning, where even the AI's designers cannot explain why it arrived at a specific decision.

In the regulation of algorithms, particularly artificial intelligence and its subfield of machine learning, a right to explanation is a right to be given an explanation for an output of the algorithm. Such rights primarily refer to individual rights to be given an explanation for decisions that significantly affect an individual, particularly legally or financially. For example, a person who applies for a loan and is denied may ask for an explanation, which could be "Credit bureau X reports that you declared bankruptcy last year; this is the main factor in considering you too likely to default, and thus we will not give you the loan you applied for."

Algorithmic bias describes systematic and repeatable errors in a computer system that create "unfair" outcomes, such as "privileging" one category over another in ways different from the intended function of the algorithm.

Argument technology is a sub-field of collective intelligence and artificial intelligence that focuses on applying computational techniques to the creation, identification, analysis, navigation, evaluation and visualisation of arguments and debates.

ACM Conference on Fairness, Accountability, and Transparency is a peer-reviewed academic conference series about ethics and computing systems. Sponsored by the Association for Computing Machinery, this conference focuses on issues such as algorithmic transparency, fairness in machine learning, bias, and ethics from a multi-disciplinary perspective. The conference community includes computer scientists, statisticians, social scientists, scholars of law, and others.

Tawanna Dillahunt is an American computer scientist and information scientist based at the University of Michigan School of Information. She runs the Social Innovations Group, a research group that designs, builds, and enhances technologies to solve real-world problems. Her research has been cited over 4,600 times according to Google Scholar.

Hanna Wallach is a computational social scientist and partner research manager at Microsoft Research. Her work makes use of machine learning models to study the dynamics of social processes. Her current research focuses on issues of fairness, accountability, transparency, and ethics as they relate to AI and machine learning.

Jofish Kaye is an American and British scientist specializing in human-computer interaction and artificial intelligence. He runs interaction design and user research at anthem.ai, and is an editor of Personal & Ubiquitous Computing.

Margaret Mitchell is a computer scientist who works on algorithmic bias and fairness in machine learning. She is most well known for her work on automatically removing undesired biases concerning demographic groups from machine learning models, as well as more transparent reporting of their intended use.

Automated decision-making (ADM) involves the use of data, machines and algorithms to make decisions in a range of contexts, including public administration, business, health, education, law, employment, transport, media and entertainment, with varying degrees of human oversight or intervention. ADM involves large-scale data from a range of sources, such as databases, text, social media, sensors, images or speech, that is processed using various technologies including computer software, algorithms, machine learning, natural language processing, artificial intelligence, augmented intelligence and robotics. The increasing use of automated decision-making systems (ADMS) across a range of contexts presents many benefits and challenges to human society requiring consideration of the technical, legal, ethical, societal, educational, economic and health consequences.