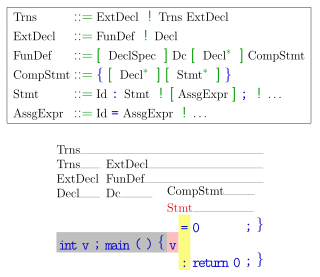

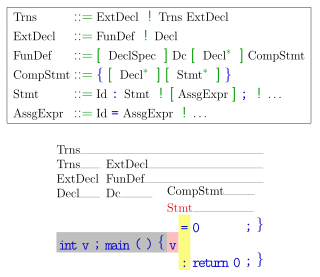

In formal language theory, a context-free grammar (CFG) is a formal grammar whose production rules are of the form

In computer science, the Earley parser is an algorithm for parsing strings that belong to a given context-free language, though it may suffer problems with certain nullable grammars. The algorithm, named after its inventor, Jay Earley, is a chart parser that uses dynamic programming; it is mainly used for parsing in computational linguistics. It was first introduced in his dissertation in 1968.

In computer science, an LALR parser or Look-Ahead LR parser is a simplified version of a canonical LR parser, to parse a text according to a set of production rules specified by a formal grammar for a computer language.

In computer science, LR parsers are a type of bottom-up parser that analyse deterministic context-free languages in linear time. There are several variants of LR parsers: SLR parsers, LALR parsers, Canonical LR(1) parsers, Minimal LR(1) parsers, and GLR parsers. LR parsers can be generated by a parser generator from a formal grammar defining the syntax of the language to be parsed. They are widely used for the processing of computer languages.

Yacc is a computer program for the Unix operating system developed by Stephen C. Johnson. It is a Look Ahead Left-to-Right Rightmost Derivation (LALR) parser generator, generating a LALR parser based on a formal grammar, written in a notation similar to Backus–Naur Form (BNF). Yacc is supplied as a standard utility on BSD and AT&T Unix. GNU-based Linux distributions include Bison, a forward-compatible Yacc replacement.

GNU Bison, commonly known as Bison, is a parser generator that is part of the GNU Project. Bison reads a specification in the BNF notation, warns about any parsing ambiguities, and generates a parser that reads sequences of tokens and decides whether the sequence conforms to the syntax specified by the grammar.

In computer science, an LL parser is a top-down parser for a restricted context-free language. It parses the input from Left to right, performing Leftmost derivation of the sentence.

In computer science, a Simple LR or SLR parser is a type of LR parser with small parse tables and a relatively simple parser generator algorithm. As with other types of LR(1) parser, an SLR parser is quite efficient at finding the single correct bottom-up parse in a single left-to-right scan over the input stream, without guesswork or backtracking. The parser is mechanically generated from a formal grammar for the language.

In computer science, a canonical LR parser or LR(1) parser is an LR(k) parser for k=1, i.e. with a single lookahead terminal. The special attribute of this parser is that any LR(k) grammar with k>1 can be transformed into an LR(1) grammar. However, back-substitutions are required to reduce k and as back-substitutions increase, the grammar can quickly become large, repetitive and hard to understand. LR(k) can handle all deterministic context-free languages. In the past this LR(k) parser has been avoided because of its huge memory requirements in favor of less powerful alternatives such as the LALR and the LL(1) parser. Recently, however, a "minimal LR(1) parser" whose space requirements are close to LALR parsers, is being offered by several parser generators.

In computer programming, the interpreter pattern is a design pattern that specifies how to evaluate sentences in a language. The basic idea is to have a class for each symbol in a specialized computer language. The syntax tree of a sentence in the language is an instance of the composite pattern and is used to evaluate (interpret) the sentence for a client. See also Composite pattern.

Parsing, syntax analysis, or syntactic analysis is the process of analyzing a string of symbols, either in natural language, computer languages or data structures, conforming to the rules of a formal grammar. The term parsing comes from Latin pars (orationis), meaning part.

Top-down parsing in computer science is a parsing strategy where one first looks at the highest level of the parse tree and works down the parse tree by using the rewriting rules of a formal grammar. LL parsers are a type of parser that uses a top-down parsing strategy.

The dangling else is a problem in programming of parser generators in which an optional else clause in an if–then(–else) statement results in nested conditionals being ambiguous. Formally, the reference context-free grammar of the language is ambiguous, meaning there is more than one correct parse tree.

In computer science, a parsing expression grammar (PEG), is a type of analytic formal grammar, i.e. it describes a formal language in terms of a set of rules for recognizing strings in the language. The formalism was introduced by Bryan Ford in 2004 and is closely related to the family of top-down parsing languages introduced in the early 1970s. Syntactically, PEGs also look similar to context-free grammars (CFGs), but they have a different interpretation: the choice operator selects the first match in PEG, while it is ambiguous in CFG. This is closer to how string recognition tends to be done in practice, e.g. by a recursive descent parser.

In computer science, an operator precedence parser is a bottom-up parser that interprets an operator-precedence grammar. For example, most calculators use operator precedence parsers to convert from the human-readable infix notation relying on order of operations to a format that is optimized for evaluation such as Reverse Polish notation (RPN).

A GLR parser is an extension of an LR parser algorithm to handle non-deterministic and ambiguous grammars. The theoretical foundation was provided in a 1974 paper by Bernard Lang. It describes a systematic way to produce such algorithms, and provides uniform results regarding correctness proofs, complexity with respect to grammar classes, and optimization techniques. The first actual implementation of GLR was described in a 1984 paper by Masaru Tomita, it has also been referred to as a "parallel parser". Tomita presented five stages in his original work, though in practice it is the second stage that is recognized as the GLR parser.

In computer science, recursive ascent parsing is a technique for implementing an LALR parser which uses mutually-recursive functions rather than tables. Thus, the parser is directly encoded in the host language similar to recursive descent. Direct encoding usually yields a parser which is faster than its table-driven equivalent for the same reason that compilation is faster than interpretation. It is also (nominally) possible to hand edit a recursive ascent parser, whereas a tabular implementation is nigh unreadable to the average human.

A lookahead left-to-right (LALR) parser generator is a software tool that reads a BNF grammar and creates an LALR parser which is capable of parsing files written in the computer language defined by the BNF grammar. LALR parsers are desirable because they are very fast and small in comparison to other types of parsers.

In formal language theory, an LL grammar is a context-free grammar that can be parsed by an LL parser, which parses the input from Left to right, and constructs a Leftmost derivation of the sentence. A language that has an LL grammar is known as an LL language. These form subsets of deterministic context-free grammars (DCFGs) and deterministic context-free languages (DCFLs), respectively. One says that a given grammar or language "is an LL grammar/language" or simply "is LL" to indicate that it is in this class.

A shift-reduce parser is a class of efficient, table-driven bottom-up parsing methods for computer languages and other notations formally defined by a grammar. The parsing methods most commonly used for parsing programming languages, LR parsing and its variations, are shift-reduce methods. The precedence parsers used before the invention of LR parsing are also shift-reduce methods. All shift-reduce parsers have similar outward effects, in the incremental order in which they build a parse tree or call specific output actions.