In computer science, the Boolean satisfiability problem is the problem of determining if there exists an interpretation that satisfies a given Boolean formula. In other words, it asks whether the variables of a given Boolean formula can be consistently replaced by the values TRUE or FALSE in such a way that the formula evaluates to TRUE. If this is the case, the formula is called satisfiable. On the other hand, if no such assignment exists, the function expressed by the formula is FALSE for all possible variable assignments and the formula is unsatisfiable. For example, the formula "a AND NOT b" is satisfiable because one can find the values a = TRUE and b = FALSE, which make = TRUE. In contrast, "a AND NOT a" is unsatisfiable.

In computational complexity theory, the complexity class ♯P is the set of the counting problems associated with the decision problems in the set NP. More formally, ♯P is the class of function problems of the form "compute f(x)", where f is the number of accepting paths of a nondeterministic Turing machine running in polynomial time. Unlike most well-known complexity classes, it is not a class of decision problems but a class of function problems.

In complexity theory, computational problems that are co-NP-complete are those that are the hardest problems in co-NP, in the sense that any problem in co-NP can be reformulated as a special case of any co-NP-complete problem with only polynomial overhead. If P is different from co-NP, then all of the co-NP-complete problems are not solvable in polynomial time. If there exists a way to solve a co-NP-complete problem quickly, then that algorithm can be used to solve all co-NP problems quickly.

In computer science, 2-satisfiability, 2-SAT or just 2SAT is a computational problem of assigning values to variables, each of which has two possible values, in order to satisfy a system of constraints on pairs of variables. It is a special case of the general Boolean satisfiability problem, which can involve constraints on more than two variables, and of constraint satisfaction problems, which can allow more than two choices for the value of each variable. But in contrast to those more general problems, which are NP-complete, 2-satisfiability can be solved in polynomial time.

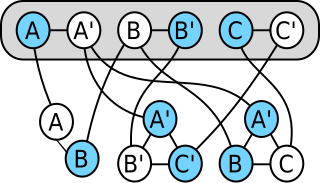

In the mathematical discipline of graph theory, a matching or independent edge set in a graph is a set of edges without common vertices. Finding a matching in a bipartite graph can be treated as a network flow problem.

In computational complexity theory, the Cook–Levin theorem, also known as Cook's theorem, states that the Boolean satisfiability problem is NP-complete. That is, any problem in NP can be reduced in polynomial time by a deterministic Turing machine to the problem of determining whether a Boolean formula is satisfiable.

The Fulkerson Prize for outstanding papers in the area of discrete mathematics is sponsored jointly by the Mathematical Optimization Society (MOS) and the American Mathematical Society (AMS). Up to three awards of $1500 each are presented at each (triennial) International Symposium of the MOS. Originally, the prizes were paid out of a memorial fund administered by the AMS that was established by friends of the late Delbert Ray Fulkerson to encourage mathematical excellence in the fields of research exemplified by his work. The prizes are now funded by an endowment administered by MPS.

In complexity theory the class APX is the set of NP optimization problems that allow polynomial-time approximation algorithms with approximation ratio bounded by a constant. In simple terms, problems in this class have efficient algorithms that can find an answer within some fixed multiplicative factor of the optimal answer.

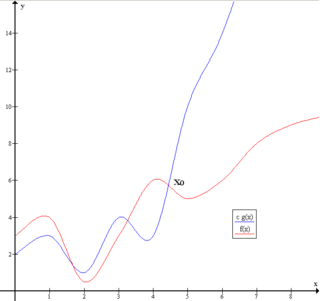

The Valiant–Vazirani theorem is a theorem in computational complexity theory stating that if there is a polynomial time algorithm for Unambiguous-SAT, then NP = RP. It was proven by Leslie Valiant and Vijay Vazirani in their paper titled NP is as easy as detecting unique solutions published in 1986. The proof is based on the Mulmuley–Vazirani–Vazirani isolation lemma, which was subsequently used for a number of important applications in theoretical computer science.

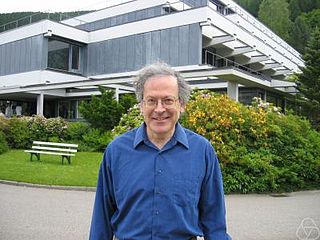

Vijay Virkumar Vazirani is an Indian American distinguished professor of computer science in the Donald Bren School of Information and Computer Sciences at the University of California, Irvine.

In computational complexity theory, the maximum satisfiability problem (MAX-SAT) is the problem of determining the maximum number of clauses, of a given Boolean formula in conjunctive normal form, that can be made true by an assignment of truth values to the variables of the formula. It is a generalization of the Boolean satisfiability problem, which asks whether there exists a truth assignment that makes all clauses true.

The Tutte polynomial, also called the dichromate or the Tutte–Whitney polynomial, is a graph polynomial. It is a polynomial in two variables which plays an important role in graph theory. It is defined for every undirected graph and contains information about how the graph is connected. It is denoted by .

In computational complexity theory, a gadget is a subset of a problem instance that simulates the behavior of one of the fundamental units of a different computational problem. Gadgets are typically used to construct reductions from one computational problem to another, as part of proofs of NP-completeness or other types of computational hardness. The component design technique is a method for constructing reductions by using gadgets.

In linear algebra, the computation of the permanent of a matrix is a problem that is thought to be more difficult than the computation of the determinant of a matrix despite the apparent similarity of the definitions.

The #P-completeness of 01-permanent, sometimes known as Valiant's theorem, is a mathematical proof about the permanent of matrices, considered a seminal result in computational complexity theory. In a 1979 scholarly paper, Leslie Valiant proved that the computational problem of computing the permanent of a matrix is #P-hard, even if the matrix is restricted to have entries that are all 0 or 1. In this restricted case, computing the permanent is even #P-complete, because it corresponds to the #P problem of counting the number of permutation matrices one can get by changing ones into zeroes.

In computational complexity theory, an NP-complete decision problem is one belonging to both the NP and the NP-hard complexity classes. In this context, NP stands for "nondeterministic polynomial time". The set of NP-complete problems is often denoted by NP-C or NPC.

In computational complexity theory, the exponential time hypothesis is an unproven computational hardness assumption that was formulated by Impagliazzo & Paturi (1999). The hypothesis states that 3-SAT cannot be solved in subexponential time in the worst case. The exponential time hypothesis, if true, would imply that P ≠ NP, but it is a stronger statement. It can be used to show that many computational problems are equivalent in complexity, in the sense that if one of them has a subexponential time algorithm then they all do.

In theoretical computer science, the term isolation lemma refers to randomized algorithms that reduce the number of solutions to a problem to one, should a solution exist. This is achieved by constructing random constraints such that, with non-negligible probability, exactly one solution satisfies these additional constraints if the solution space is not empty. Isolation lemmas have important applications in computer science, such as the Valiant–Vazirani theorem and Toda's theorem in computational complexity theory.

The FKT algorithm, named after Fisher, Kasteleyn, and Temperley, counts the number of perfect matchings in a planar graph in polynomial time. This same task is #P-complete for general graphs. Counting the number of matchings, even for planar graphs, is also #P-complete. The key idea is to convert the problem into a Pfaffian computation of a skew-symmetric matrix derived from a planar embedding of the graph. The Pfaffian of this matrix is then computed efficiently using standard determinant algorithms.