In logic and computer science, the Boolean satisfiability problem (sometimes called propositional satisfiability problem and abbreviated SATISFIABILITY, SAT or B-SAT) is the problem of determining if there exists an interpretation that satisfies a given Boolean formula. In other words, it asks whether the variables of a given Boolean formula can be consistently replaced by the values TRUE or FALSE in such a way that the formula evaluates to TRUE. If this is the case, the formula is called satisfiable. On the other hand, if no such assignment exists, the function expressed by the formula is FALSE for all possible variable assignments and the formula is unsatisfiable. For example, the formula "a AND NOT b" is satisfiable because one can find the values a = TRUE and b = FALSE, which make (a AND NOT b) = TRUE. In contrast, "a AND NOT a" is unsatisfiable.

Inductive logic programming (ILP) is a subfield of symbolic artificial intelligence which uses logic programming as a uniform representation for examples, background knowledge and hypotheses. The term "inductive" here refers to philosophical rather than mathematical induction. Given an encoding of the known background knowledge and a set of examples represented as a logical database of facts, an ILP system will derive a hypothesised logic program which entails all the positive and none of the negative examples.

In boolean logic, a disjunctive normal form (DNF) is a canonical normal form of a logical formula consisting of a disjunction of conjunctions; it can also be described as an OR of ANDs, a sum of products, or — in philosophical logic — a cluster concept. As a normal form, it is useful in automated theorem proving.

In Boolean logic, a formula is in conjunctive normal form (CNF) or clausal normal form if it is a conjunction of one or more clauses, where a clause is a disjunction of literals; otherwise put, it is a product of sums or an AND of ORs. As a canonical normal form, it is useful in automated theorem proving and circuit theory.

In computer science, 2-satisfiability, 2-SAT or just 2SAT is a computational problem of assigning values to variables, each of which has two possible values, in order to satisfy a system of constraints on pairs of variables. It is a special case of the general Boolean satisfiability problem, which can involve constraints on more than two variables, and of constraint satisfaction problems, which can allow more than two choices for the value of each variable. But in contrast to those more general problems, which are NP-complete, 2-satisfiability can be solved in polynomial time.

In proof theory, the semantic tableau is a decision procedure for sentential and related logics, and a proof procedure for formulae of first-order logic. An analytic tableau is a tree structure computed for a logical formula, having at each node a subformula of the original formula to be proved or refuted. Computation constructs this tree and uses it to prove or refute the whole formula. The tableau method can also determine the satisfiability of finite sets of formulas of various logics. It is the most popular proof procedure for modal logics.

In formal logic, Horn-satisfiability, or HORNSAT, is the problem of deciding whether a given set of propositional Horn clauses is satisfiable or not. Horn-satisfiability and Horn clauses are named after Alfred Horn.

The closed-world assumption (CWA), in a formal system of logic used for knowledge representation, is the presumption that a statement that is true is also known to be true. Therefore, conversely, what is not currently known to be true, is false. The same name also refers to a logical formalization of this assumption by Raymond Reiter. The opposite of the closed-world assumption is the open-world assumption (OWA), stating that lack of knowledge does not imply falsity. Decisions on CWA vs. OWA determine the understanding of the actual semantics of a conceptual expression with the same notations of concepts. A successful formalization of natural language semantics usually cannot avoid an explicit revelation of whether the implicit logical backgrounds are based on CWA or OWA.

In mathematical logic and automated theorem proving, resolution is a rule of inference leading to a refutation-complete theorem-proving technique for sentences in propositional logic and first-order logic. For propositional logic, systematically applying the resolution rule acts as a decision procedure for formula unsatisfiability, solving the Boolean satisfiability problem. For first-order logic, resolution can be used as the basis for a semi-algorithm for the unsatisfiability problem of first-order logic, providing a more practical method than one following from Gödel's completeness theorem.

The Davis–Putnam algorithm was developed by Martin Davis and Hilary Putnam for checking the validity of a first-order logic formula using a resolution-based decision procedure for propositional logic. Since the set of valid first-order formulas is recursively enumerable but not recursive, there exists no general algorithm to solve this problem. Therefore, the Davis–Putnam algorithm only terminates on valid formulas. Today, the term "Davis–Putnam algorithm" is often used synonymously with the resolution-based propositional decision procedure that is actually only one of the steps of the original algorithm.

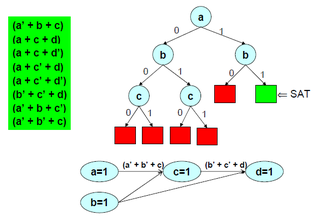

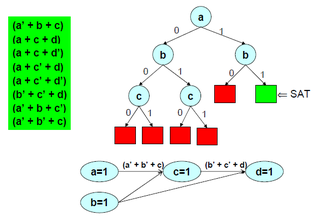

In logic and computer science, the Davis–Putnam–Logemann–Loveland (DPLL) algorithm is a complete, backtracking-based search algorithm for deciding the satisfiability of propositional logic formulae in conjunctive normal form, i.e. for solving the CNF-SAT problem.

In constraint satisfaction, local consistency conditions are properties of constraint satisfaction problems related to the consistency of subsets of variables or constraints. They can be used to reduce the search space and make the problem easier to solve. Various kinds of local consistency conditions are leveraged, including node consistency, arc consistency, and path consistency.

Constraint logic programming is a form of constraint programming, in which logic programming is extended to include concepts from constraint satisfaction. A constraint logic program is a logic program that contains constraints in the body of clauses. An example of a clause including a constraint is A(X,Y):-X+Y>0,B(X),C(Y). In this clause, X+Y>0 is a constraint; A(X,Y), B(X), and C(Y) are literals as in regular logic programming. This clause states one condition under which the statement A(X,Y) holds: X+Y is greater than zero and both B(X) and C(Y) are true.

SLD resolution is the basic inference rule used in logic programming. It is a refinement of resolution, which is both sound and refutation complete for Horn clauses.

MAXEkSAT is a problem in computational complexity theory that is a maximization version of the Boolean satisfiability problem 3SAT. In MAXEkSAT, each clause has exactly k literals, each with distinct variables, and is in conjunctive normal form. These are called k-CNF formulas. The problem is to determine the maximum number of clauses that can be satisfied by a truth assignment to the variables in the clauses.

The Tseytin transformation, alternatively written Tseitin transformation, takes as input an arbitrary combinatorial logic circuit and produces an equisatisfiable boolean formula in conjunctive normal form (CNF). The length of the formula is linear in the size of the circuit. Input vectors that make the circuit output "true" are in 1-to-1 correspondence with assignments that satisfy the formula. This reduces the problem of circuit satisfiability on any circuit to the satisfiability problem on 3-CNF formulas. It was discovered by the Russian scientist Grigori Tseitin.

In proof theory, an area of mathematical logic, proof compression is the problem of algorithmically compressing formal proofs. The developed algorithms can be used to improve the proofs generated by automated theorem proving tools such as SAT solvers, SMT-solvers, first-order theorem provers and proof assistants.

In mathematical logic, proof compression by splitting is an algorithm that operates as a post-process on resolution proofs. It was proposed by Scott Cotton in his paper "Two Techniques for Minimizing Resolution Proof".

In computer science, conflict-driven clause learning (CDCL) is an algorithm for solving the Boolean satisfiability problem (SAT). Given a Boolean formula, the SAT problem asks for an assignment of variables so that the entire formula evaluates to true. The internal workings of CDCL SAT solvers were inspired by DPLL solvers. The main difference between CDCL and DPLL is that CDCL's backjumping is non-chronological.

The Boolean satisfiability problem can be stated formally as: given a Boolean expression with variables, finding an assignment of the variables such that is true. It is seen as the canonical NP-complete problem. While no efficient algorithm is known to solve this problem in the general case, there are certain heuristics, informally called 'rules of thumb' in programming, that can usually help solve the problem reasonably efficiently.