In mathematics, the branch of real analysis studies the behavior of real numbers, sequences and series of real numbers, and real functions. Some particular properties of real-valued sequences and functions that real analysis studies include convergence, limits, continuity, smoothness, differentiability and integrability.

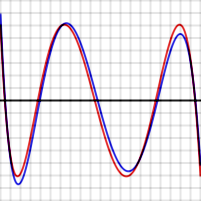

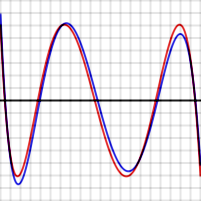

In mathematics, a real function of real numbers is said to be uniformly continuous if there is a positive real number such that function values over any function domain interval of the size are as close to each other as we want. In other words, for a uniformly continuous real function of real numbers, if we want function value differences to be less than any positive real number , then there is a positive real number such that at any and in any function interval of the size .

In mathematics, differential calculus is a subfield of calculus that studies the rates at which quantities change. It is one of the two traditional divisions of calculus, the other being integral calculus—the study of the area beneath a curve.

In mathematical optimization, the method of Lagrange multipliers is a strategy for finding the local maxima and minima of a function subject to equation constraints. It is named after the mathematician Joseph-Louis Lagrange.

The calculus of variations is a field of mathematical analysis that uses variations, which are small changes in functions and functionals, to find maxima and minima of functionals: mappings from a set of functions to the real numbers. Functionals are often expressed as definite integrals involving functions and their derivatives. Functions that maximize or minimize functionals may be found using the Euler–Lagrange equation of the calculus of variations.

In calculus, Rolle's theorem or Rolle's lemma essentially states that any real-valued differentiable function that attains equal values at two distinct points must have at least one stationary point somewhere between them—that is, a point where the first derivative is zero. The theorem is named after Michel Rolle.

In the calculus of variations and classical mechanics, the Euler–Lagrange equations are a system of second-order ordinary differential equations whose solutions are stationary points of the given action functional. The equations were discovered in the 1750s by Swiss mathematician Leonhard Euler and Italian mathematician Joseph-Louis Lagrange.

In mathematical analysis, the maximum and minimum of a function are, respectively, the largest and smallest value taken by the function. Known generically as extremum, they may be defined either within a given range or on the entire domain of a function. Pierre de Fermat was one of the first mathematicians to propose a general technique, adequality, for finding the maxima and minima of functions.

In mathematics, a differentiable function of one real variable is a function whose derivative exists at each point in its domain. In other words, the graph of a differentiable function has a non-vertical tangent line at each interior point in its domain. A differentiable function is smooth and does not contain any break, angle, or cusp.

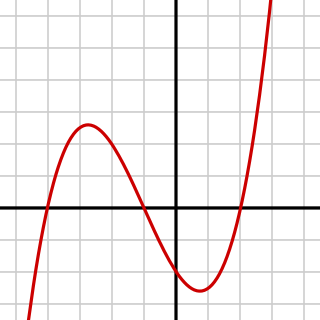

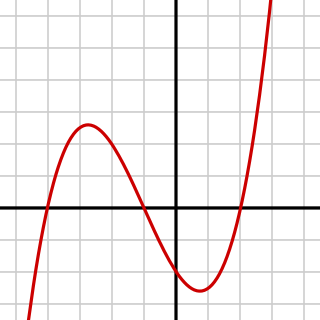

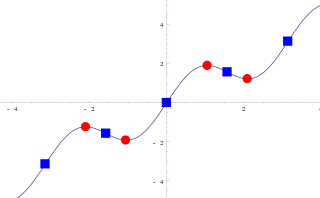

In differential calculus and differential geometry, an inflection point, point of inflection, flex, or inflection is a point on a smooth plane curve at which the curvature changes sign. In particular, in the case of the graph of a function, it is a point where the function changes from being concave to convex, or vice versa.

In mathematics, the symmetry of second derivatives refers to the possibility of interchanging the order of taking partial derivatives of a function

The Arzelà–Ascoli theorem is a fundamental result of mathematical analysis giving necessary and sufficient conditions to decide whether every sequence of a given family of real-valued continuous functions defined on a closed and bounded interval has a uniformly convergent subsequence. The main condition is the equicontinuity of the family of functions. The theorem is the basis of many proofs in mathematics, including that of the Peano existence theorem in the theory of ordinary differential equations, Montel's theorem in complex analysis, and the Peter–Weyl theorem in harmonic analysis and various results concerning compactness of integral operators.

In mathematics, nonstandard calculus is the modern application of infinitesimals, in the sense of nonstandard analysis, to infinitesimal calculus. It provides a rigorous justification for some arguments in calculus that were previously considered merely heuristic.

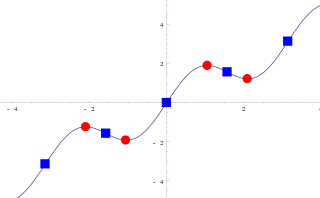

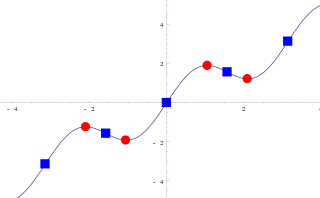

In calculus, a derivative test uses the derivatives of a function to locate the critical points of a function and determine whether each point is a local maximum, a local minimum, or a saddle point. Derivative tests can also give information about the concavity of a function.

In mathematics, particularly in calculus, a stationary point of a differentiable function of one variable is a point on the graph of the function where the function's derivative is zero. Informally, it is a point where the function "stops" increasing or decreasing.

In mathematics, approximation theory is concerned with how functions can best be approximated with simpler functions, and with quantitatively characterizing the errors introduced thereby. What is meant by best and simpler will depend on the application.

In mathematics, a critical point is the argument of a function where the function derivative is zero . The value of the function at a critical point is a critical value.

In mathematics, the Khinchin integral, also known as the Denjoy–Khinchin integral, generalized Denjoy integral or wide Denjoy integral, is one of a number of definitions of the integral of a function. It is a generalization of the Riemann and Lebesgue integrals. It is named after Aleksandr Khinchin and Arnaud Denjoy, but is not to be confused with the (narrow) Denjoy integral.

In mathematics, the Bony–Brezis theorem, due to the French mathematicians Jean-Michel Bony and Haïm Brezis, gives necessary and sufficient conditions for a closed subset of a manifold to be invariant under the flow defined by a vector field, namely at each point of the closed set the vector field must have non-positive inner product with any exterior normal vector to the set. A vector is an exterior normal at a point of the closed set if there is a real-valued continuously differentiable function maximized locally at the point with that vector as its derivative at the point. If the closed subset is a smooth submanifold with boundary, the condition states that the vector field should not point outside the subset at boundary points. The generalization to non-smooth subsets is important in the theory of partial differential equations.

Most of the terms listed in Wikipedia glossaries are already defined and explained within Wikipedia itself. However, glossaries like this one are useful for looking up, comparing and reviewing large numbers of terms together. You can help enhance this page by adding new terms or writing definitions for existing ones.