Related Research Articles

A mainframe computer, informally called a mainframe or big iron, is a computer used primarily by large organizations for critical applications like bulk data processing for tasks such as censuses, industry and consumer statistics, enterprise resource planning, and large-scale transaction processing. A mainframe computer is large but not as large as a supercomputer and has more processing power than some other classes of computers, such as minicomputers, servers, workstations, and personal computers. Most large-scale computer-system architectures were established in the 1960s, but they continue to evolve. Mainframe computers are often used as servers.

Seymour Roger Cray was an American electrical engineer and supercomputer architect who designed a series of computers that were the fastest in the world for decades, and founded Cray Research which built many of these machines. Called "the father of supercomputing", Cray has been credited with creating the supercomputer industry. Joel S. Birnbaum, then chief technology officer of Hewlett-Packard, said of him: "It seems impossible to exaggerate the effect he had on the industry; many of the things that high performance computers now do routinely were at the farthest edge of credibility when Seymour envisioned them." Larry Smarr, then director of the National Center for Supercomputing Applications at the University of Illinois said that Cray is "the Thomas Edison of the supercomputing industry."

A supercomputer is a type of computer with a high level of performance as compared to a general-purpose computer. The performance of a supercomputer is commonly measured in floating-point operations per second (FLOPS) instead of million instructions per second (MIPS). Since 2017, supercomputers have existed which can perform over 1017 FLOPS (a hundred quadrillion FLOPS, 100 petaFLOPS or 100 PFLOPS). For comparison, a desktop computer has performance in the range of hundreds of gigaFLOPS (1011) to tens of teraFLOPS (1013). Since November 2017, all of the world's fastest 500 supercomputers run on Linux-based operating systems. Additional research is being conducted in the United States, the European Union, Taiwan, Japan, and China to build faster, more powerful and technologically superior exascale supercomputers.

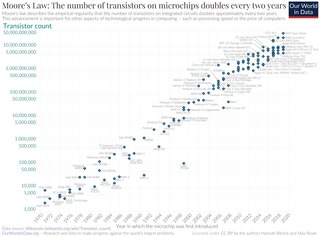

Moore's law is the observation that the number of transistors in an integrated circuit (IC) doubles about every two years. Moore's law is an observation and projection of a historical trend. Rather than a law of physics, it is an empirical relationship linked to gains from experience in production.

A workstation is a special computer designed for technical or scientific applications. Intended primarily to be used by a single user, they are commonly connected to a local area network and run multi-user operating systems. The term workstation has been used loosely to refer to everything from a mainframe computer terminal to a PC connected to a network, but the most common form refers to the class of hardware offered by several current and defunct companies such as Sun Microsystems, Silicon Graphics, Apollo Computer, DEC, HP, NeXT, and IBM which powered the 3D computer graphics revolution of the late 1990s.

Floating point operations per second is a measure of computer performance in computing, useful in fields of scientific computations that require floating-point calculations.

In computer science, algorithmic efficiency is a property of an algorithm which relates to the amount of computational resources used by the algorithm. Algorithmic efficiency can be thought of as analogous to engineering productivity for a repeating or continuous process.

The IBM 7030, also known as Stretch, was IBM's first transistorized supercomputer. It was the fastest computer in the world from 1961 until the first CDC 6600 became operational in 1964.

Datamation is a computer magazine that was published in print form in the United States between 1957 and 1998, and has since continued publication on the web. Datamation was previously owned by QuinStreet and acquired by TechnologyAdvice in 2020. Datamation is published as an online magazine at Datamation.com.

Minisupercomputers constituted a short-lived class of computers that emerged in the mid-1980s, characterized by the combination of vector processing and small-scale multiprocessing. As scientific computing using vector processors became more popular, the need for lower-cost systems that might be used at the departmental level instead of the corporate level created an opportunity for new computer vendors to enter the market. As a generalization, the price targets for these smaller computers were one-tenth of the larger supercomputers.

Commodity computing involves the use of large numbers of already-available computing components for parallel computing, to get the greatest amount of useful computation at low cost. It is computing done in commodity computers as opposed to in high-cost superminicomputers or in boutique computers. Commodity computers are computer systems - manufactured by multiple vendors - incorporating components based on open standards.

Herbert Reuben John Grosch was an early computer scientist, perhaps best known for Grosch's law, which he formulated in 1950. Grosch's Law is an aphorism that states "economy is as the square root of the speed."

International Business Machines (IBM) is a multinational corporation specializing in computer technology and information technology consulting. Headquartered in Armonk, New York, the company originated from the amalgamation of various enterprises dedicated to automating routine business transactions, notably pioneering punched card-based data tabulating machines and time clocks. In 1911, these entities were unified under the umbrella of the Computing-Tabulating-Recording Company (CTR).

The history of general-purpose CPUs is a continuation of the earlier history of computing hardware.

The ACS-1 and ACS-360 are two related supercomputers designed by IBM as part of the Advanced Computing Systems project from 1961 to 1969. Although the designs were never finished and no models ever went into production, the project spawned a number of organizational techniques and architectural innovations that have since become incorporated into nearly all high-performance computers in existence today. Many of the ideas resulting from the project directly influenced the development of the IBM RS/6000 and, more recently, have contributed to the Explicitly Parallel Instruction Computing (EPIC) computing paradigm used by Intel and HP in the Itanium processors.

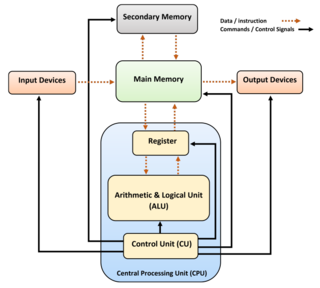

In computer science and computer engineering, computer architecture is a description of the structure of a computer system made from component parts. It can sometimes be a high-level description that ignores details of the implementation. At a more detailed level, the description may include the instruction set architecture design, microarchitecture design, logic design, and implementation.

The term is used for two different things:

- In computer science, in-memory processing (PIM) is a computer architecture in which data operations are available directly on the data memory, rather than having to be transferred to CPU registers first. This may improve the power usage and performance of moving data between the processor and the main memory.

- In software engineering, in-memory processing is a software architecture where a database is kept entirely in random-access memory (RAM) or flash memory so that usual accesses, in particular read or query operations, do not require access to disk storage. This may allow faster data operations such as "joins", and faster reporting and decision-making in business.

An Internet area network (IAN) is a concept for a communications network that connects voice and data endpoints within a cloud environment over IP, replacing an existing local area network (LAN), wide area network (WAN) or the public switched telephone network (PSTN).

DOME is a Dutch government-funded project between IBM and ASTRON in form of a public-private-partnership focussing on the Square Kilometre Array (SKA), the world's largest planned radio telescope. SKA will be built in Australia and South Africa. The DOME project objective is technology roadmap development that applies both to SKA and IBM. The 5-year project was started in 2012 and is co-funded by the Dutch government and IBM Research in Zürich, Switzerland and ASTRON in the Netherlands. The project ended officially on 30 September 2017.

References

- ↑ Grosch, H.R.J. (1953). "High Speed Arithmetic: The Digital Computer as a Research Tool". Journal of the Optical Society of America. 43 (4): 306–310. Bibcode:1953JOSA...43..306G. doi:10.1364/JOSA.43.000306.

- ↑ Lobur, Julia; Null, Linda (2006). The Essentials of Computer Organization And Architecture . Jones & Bartlett. pp. 589. ISBN 0-7637-3769-0.

- ↑ "Computers get faster than ever". Business Week . 31 August 1963. p. 28.

- ↑ Knight, Kenneth E. (September 1966). "Changes in Computer Performance" (PDF). Datamation . Vol. 12, no. 9. pp. 40–54.

- 1 2 Jones, Derek (April 30, 2016). "Cost/performance analysis of 1944-1967 computers: Knight's data".

- ↑ Knight, Kenneth E. (January 1968). "Evolving Computer Performance 1963-1967" (PDF). Datamation . pp. 31–35.

- ↑ Strassmann, Paul A. (February 1997). "Will big spending on computers guarantee profitability?". Datamation . - Excerpts from The Squandered Computer.

- ↑ Gardner, W. David (April 12, 2005). "Author Of Grosch's Law Going Strong At 87". TechWeb News. Archived from the original on 2006-03-26. - article discussing Grosch's Law and Herb Grosch's personal career.