The Intel 8080 ("eighty-eighty") is the second 8-bit microprocessor designed and manufactured by Intel. It first appeared in April 1974 and is an extended and enhanced variant of the earlier 8008 design, although without binary compatibility. The initial specified clock rate or frequency limit was 2 MHz, with common instructions using 4, 5, 7, 10, or 11 cycles. As a result, the processor is able to execute several hundred thousand instructions per second. Two faster variants, the 8080A-1 and 8080A-2, became available later with clock frequency limits of 3.125 MHz and 2.63 MHz respectively. The 8080 needs two support chips to function in most applications: the i8224 clock generator/driver and the i8228 bus controller. It is implemented in N-type metal–oxide–semiconductor logic (NMOS) using non-saturated enhancement mode transistors as loads thus demanding a +12 V and a −5 V voltage in addition to the main transistor–transistor logic (TTL) compatible +5 V.

The 8086 is a 16-bit microprocessor chip designed by Intel between early 1976 and June 8, 1978, when it was released. The Intel 8088, released July 1, 1979, is a slightly modified chip with an external 8-bit data bus, and is notable as the processor used in the original IBM PC design.

x86 is a family of complex instruction set computer (CISC) instruction set architectures initially developed by Intel based on the Intel 8086 microprocessor and its 8088 variant. The 8086 was introduced in 1978 as a fully 16-bit extension of Intel's 8-bit 8080 microprocessor, with memory segmentation as a solution for addressing more memory than can be covered by a plain 16-bit address. The term "x86" came into being because the names of several successors to Intel's 8086 processor end in "86", including the 80186, 80286, 80386 and 80486 processors. Colloquially, their names were "186", "286", "386" and "486".

Direct memory access (DMA) is a feature of computer systems that allows certain hardware subsystems to access main system memory independently of the central processing unit (CPU).

The Intel MCS-51 is a single chip microcontroller (MCU) series developed by Intel in 1980 for use in embedded systems. The architect of the Intel MCS-51 instruction set was John H. Wharton. Intel's original versions were popular in the 1980s and early 1990s, and enhanced binary compatible derivatives remain popular today. It is a complex instruction set computer, but also has some of the features of RISC architectures, such as a large register set and register windows, and has separate memory spaces for program instructions and data.

The Intel 8085 ("eighty-eighty-five") is an 8-bit microprocessor produced by Intel and introduced in March 1976. It is the last 8-bit microprocessor developed by Intel.

The Intel 4040 microprocessor was the successor to the Intel 4004, introduced in 1974. The 4040 employed a 10 μm silicon gate enhancement-load PMOS technology, was made up of 3,000 transistors and could execute approximately 62,000 instructions per second.

In computer architecture, 64-bit integers, memory addresses, or other data units are those that are 64 bits wide. Also, 64-bit central processing units (CPU) and arithmetic logic units (ALU) are those that are based on processor registers, address buses, or data buses of that size. A computer that uses such a processor is a 64-bit computer.

In computing, protected mode, also called protected virtual address mode, is an operational mode of x86-compatible central processing units (CPUs). It allows system software to use features such as segmentation, virtual memory, paging and safe multi-tasking designed to increase an operating system's control over application software.

PIC is a family of microcontrollers made by Microchip Technology, derived from the PIC1640 originally developed by General Instrument's Microelectronics Division. The name PIC initially referred to Peripheral Interface Controller, and is currently expanded as Programmable Intelligent Computer. The first parts of the family were available in 1976; by 2013 the company had shipped more than twelve billion individual parts, used in a wide variety of embedded systems.

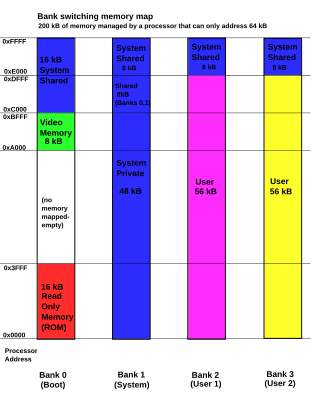

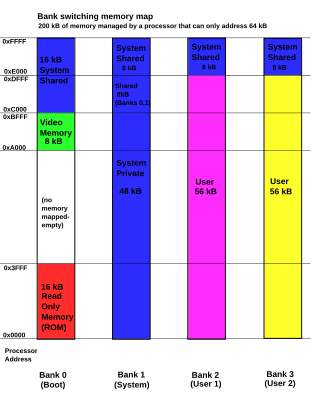

Bank switching is a technique used in computer design to increase the amount of usable memory beyond the amount directly addressable by the processor instructions. It can be used to configure a system differently at different times; for example, a ROM required to start a system from diskette could be switched out when no longer needed. In video game systems, bank switching allowed larger games to be developed for play on existing consoles.

The Z8000 is a 16-bit microprocessor introduced by Zilog in early 1979. The architecture was designed by Bernard Peuto while the logic and physical implementation was done by Masatoshi Shima, assisted by a small group of people. In contrast to most designs of the era, the Z8000 did not use microcode which allowed it to be implemented in only 17,500 transistors.

PCI configuration space is the underlying way that the Conventional PCI, PCI-X and PCI Express perform auto configuration of the cards inserted into their bus.

The Blackfin is a family of 16-/32-bit microprocessors developed, manufactured and marketed by Analog Devices. The processors have built-in, fixed-point digital signal processor (DSP) functionality performed by 16-bit multiply–accumulates (MACs), accompanied on-chip by a microcontroller. It was designed for a unified low-power processor architecture that can run operating systems while simultaneously handling complex numeric tasks such as real-time H.264 video encoding.

QEMU is a free and open-source emulator. It emulates a computer's processor through dynamic binary translation and provides a set of different hardware and device models for the machine, enabling it to run a variety of guest operating systems. It can interoperate with Kernel-based Virtual Machine (KVM) to run virtual machines at near-native speed. QEMU can also do emulation for user-level processes, allowing applications compiled for one architecture to run on another.

The Signetics 2650 was an 8-bit microprocessor introduced in July 1975. According to Adam Osborne's book An Introduction to Microprocessors Vol 2: Some Real Products, it was "the most minicomputer-like" of the microprocessors available at the time. A combination of missing features and odd memory access limited its appeal, and the system saw little use in the market.

In computing, an input–output memory management unit (IOMMU) is a memory management unit (MMU) connecting a direct-memory-access–capable (DMA-capable) I/O bus to the main memory. Like a traditional MMU, which translates CPU-visible virtual addresses to physical addresses, the IOMMU maps device-visible virtual addresses to physical addresses. Some units also provide memory protection from faulty or malicious devices.

The maximum random access memory (RAM) installed in any computer system is limited by hardware, software and economic factors. The hardware may have a limited number of address bus bits, limited by the processor package or design of the system. Some of the address space may be shared between RAM, peripherals, and read-only memory. In the case of a microcontroller with no external RAM, the size of the RAM array is limited by the size of the integrated circuit die. In a packaged system, only enough RAM may be provided for the system's required functions, with no provision for addition of memory after manufacture.

In computing, the term 3 GB barrier refers to a limitation of some 32-bit operating systems running on x86 microprocessors. It prevents the operating systems from using all of 4 GiB (4 × 10243 bytes) of main memory. The exact barrier varies by motherboard and I/O device configuration, particularly the size of video RAM; it may be in the range of 2.75 GB to 3.5 GB. The barrier is not present with a 64-bit processor and 64-bit operating system, or with certain x86 hardware and an operating system such as Linux or certain versions of Windows Server and macOS that allow use of Physical Address Extension (PAE) mode on x86 to access more than 4 GiB of RAM.

This glossary of computer hardware terms is a list of definitions of terms and concepts related to computer hardware, i.e. the physical and structural components of computers, architectural issues, and peripheral devices.