Example

The two-dimensional parity-check code, usually called the optimal rectangular code, is the most popular form of multidimensional parity-check code.

Assume that the goal is to transmit the four-digit message "1234", using a two-dimensional parity scheme. First the digits of the message are arranged in a rectangular pattern:

- 12

- 34

Parity digits are then calculated by summing each column and row separately:

- 123

- 347

- 46

The eight-digit sequence "12334746" is the message that is actually transmitted. If any single error occurs during transmission then this error can not only be detected but can also be corrected as well. Let us suppose that the received message contained an error in the first digit. The receiver rearranges the message into the grid:

- 923

- 347

- 46

The receiver can see that the first row and also the first column add up incorrectly. Using this knowledge and the assumption that only one error occurred, the receiver can correct the error. In order to handle two errors, a 4-dimensional scheme would be required, at the cost of more parity digits.

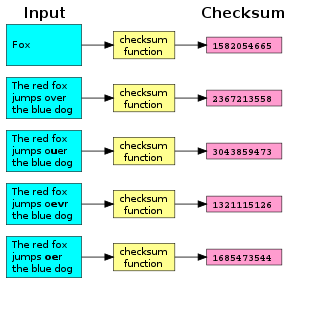

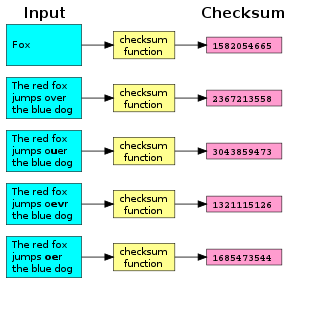

A checksum is a small-sized datum derived from a block of digital data for the purpose of detecting errors that may have been introduced during its transmission or storage. It is usually applied to an installation file after it is received from the download server. By themselves, checksums are often used to verify data integrity but are not relied upon to verify data authenticity.

In telecommunication, Hamming codes are a family of linear error-correcting codes. Hamming codes can detect up to two-bit errors or correct one-bit errors without detection of uncorrected errors. By contrast, the simple parity code cannot correct errors, and can detect only an odd number of bits in error. Hamming codes are perfect codes, that is, they achieve the highest possible rate for codes with their block length and minimum distance of three.

Richard W. Hamming invented Hamming codes in 1950 as a way of automatically correcting errors introduced by punched card readers. In his original paper, Hamming elaborated his general idea, but specifically focused on the Hamming(7,4) code which adds three parity bits to four bits of data.

In telecommunication, a longitudinal redundancy check (LRC), or horizontal redundancy check, is a form of redundancy check that is applied independently to each of a parallel group of bit streams. The data must be divided into transmission blocks, to which the additional check data is added.

Coding theory is the study of the properties of codes and their respective fitness for specific applications. Codes are used for data compression, cryptography, error detection and correction, data transmission and data storage. Codes are studied by various scientific disciplines—such as information theory, electrical engineering, mathematics, linguistics, and computer science—for the purpose of designing efficient and reliable data transmission methods. This typically involves the removal of redundancy and the correction or detection of errors in the transmitted data.

Aztec Code is a type of 2D barcode invented by Andrew Longacre, Jr. and Robert Hussey in 1995. The code was published by AIM, Inc. in 1997. Although the Aztec code was patented, that patent was officially made public domain. The aztec code is also published as ISO/IEC 24778:2008 standard.

Named after the resemblance of the central finder pattern to an Aztec pyramid, Aztec code has the potential to use less space than other matrix barcodes because it does not require a surrounding blank "quiet zone".

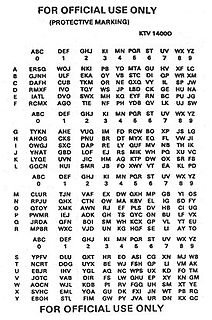

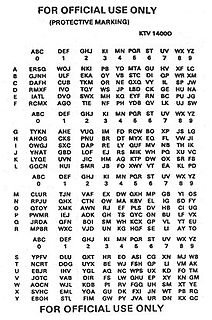

The DRYAD Numeral Cipher/Authentication System is a simple, paper cryptographic system employed by the U.S. military for authentication and for encryption of short, numerical messages. Each unit with a radio is given a set of matching DRYAD code sheets. A single sheet is valid for a limited time, called a cryptoperiod.

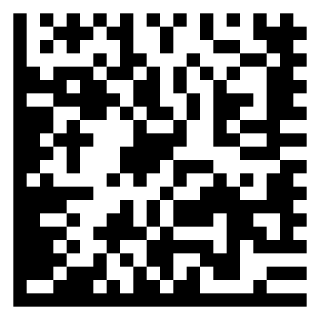

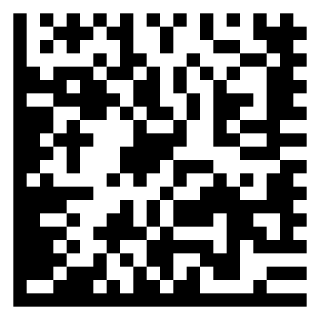

A Data Matrix is a two-dimensional barcode consisting of black and white "cells" or modules arranged in either a square or rectangular pattern, also known as a matrix. The information to be encoded can be text or numeric data. Usual data size is from a few bytes up to 1556 bytes. The length of the encoded data depends on the number of cells in the matrix. Error correction codes are often used to increase reliability: even if one or more cells are damaged so it is unreadable, the message can still be read. A Data Matrix symbol can store up to 2,335 alphanumeric characters.

In coding theory, a linear code is an error-correcting code for which any linear combination of codewords is also a codeword. Linear codes are traditionally partitioned into block codes and convolutional codes, although turbo codes can be seen as a hybrid of these two types. Linear codes allow for more efficient encoding and decoding algorithms than other codes.

In coding theory, the repetition code is one of the most basic error-correcting codes. In order to transmit a message over a noisy channel that may corrupt the transmission in a few places, the idea of the repetition code is to just repeat the message several times. The hope is that the channel corrupts only a minority of these repetitions. This way the receiver will notice that a transmission error occurred since the received data stream is not the repetition of a single message, and moreover, the receiver can recover the original message by looking at the received message in the data stream that occurs most often.

Hybrid automatic repeat request is a combination of high-rate forward error-correcting coding and ARQ error-control. In standard ARQ, redundant bits are added to data to be transmitted using an error-detecting (ED) code such as a cyclic redundancy check (CRC). Receivers detecting a corrupted message will request a new message from the sender. In Hybrid ARQ, the original data is encoded with a forward error correction (FEC) code, and the parity bits are either immediately sent along with the message or only transmitted upon request when a receiver detects an erroneous message. The ED code may be omitted when a code is used that can perform both forward error correction (FEC) in addition to error detection, such as a Reed–Solomon code. The FEC code is chosen to correct an expected subset of all errors that may occur, while the ARQ method is used as a fall-back to correct errors that are uncorrectable using only the redundancy sent in the initial transmission. As a result, hybrid ARQ performs better than ordinary ARQ in poor signal conditions, but in its simplest form this comes at the expense of significantly lower throughput in good signal conditions. There is typically a signal quality cross-over point below which simple hybrid ARQ is better, and above which basic ARQ is better.

In computing, telecommunication, information theory, and coding theory, an error correction code, sometimes error correcting code, (ECC) is used for controlling errors in data over unreliable or noisy communication channels. The central idea is the sender encodes the message with a redundant in the form of an ECC. The American mathematician Richard Hamming pioneered this field in the 1940s and invented the first error-correcting code in 1950: the Hamming (7,4) code.

The redundancy allows the receiver to detect a limited number of errors that may occur anywhere in the message, and often to correct these errors without retransmission. ECC gives the receiver the ability to correct errors without needing a reverse channel to request retransmission of data, but at the cost of a fixed, higher forward channel bandwidth. ECC is therefore applied in situations where retransmissions are costly or impossible, such as one-way communication links and when transmitting to multiple receivers in multicast. For example, in the case of a satellite orbiting around Uranus, a retransmission because of decoding errors can create a delay of 5 hours. ECC information is usually added to mass storage devices to enable recovery of corrupted data, is widely used in modems, and is used on systems where the primary memory is ECC memory.

Error-correcting code memory is a type of computer data storage that can detect and correct the most common kinds of internal data corruption. ECC memory is used in most computers where data corruption cannot be tolerated under any circumstances, such as for scientific or financial computing.

In coding theory, a generator matrix is a matrix whose rows form a basis for a linear code. The codewords are all of the linear combinations of the rows of this matrix, that is, the linear code is the row space of its generator matrix.

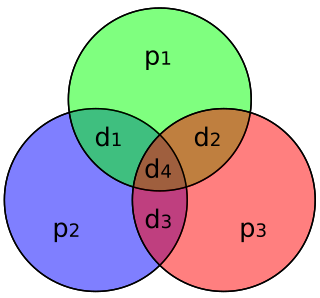

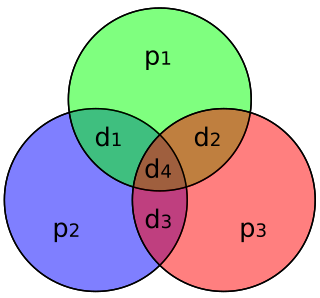

In coding theory, Hamming(7,4) is a linear error-correcting code that encodes four bits of data into seven bits by adding three parity bits. It is a member of a larger family of Hamming codes, but the term Hamming code often refers to this specific code that Richard W. Hamming introduced in 1950. At the time, Hamming worked at Bell Telephone Laboratories and was frustrated with the error-prone punched card reader, which is why he started working on error-correcting codes.

BATCO, short for Battle Code, is a hand-held, paper-based encryption system used at a low, front line level in the British Army. It was introduced along with the Clansman combat net radio in the early 1980s and was largely obsolete by 2010 due to the wide deployment of the secure Bowman radios. BATCO consists of a code, contained on a set of vocabulary cards, and cipher sheets for superencryption of the numeric code words. The cipher sheets, which are typically changed daily, also include an authentication table and a radio call sign protection system.

Sudoku codes are non-linear forward error correcting codes following rules of sudoku puzzles designed for an erasure channel. Based on this model, the transmitter sends a sequence of all symbols of a solved sudoku. The receiver either receives a symbol correctly or an erasure symbol to indicate that the symbol was not received. The decoder gets a matrix with missing entries and uses the constraints of sudoku puzzles to reconstruct a limited amount of erased symbols.