A self-organizing map (SOM) or self-organizing feature map (SOFM) is a type of artificial neural network (ANN) that is trained using unsupervised learning to produce a low-dimensional, discretized representation of the input space of the training samples, called a map, and is therefore a method to do dimensionality reduction. Self-organizing maps differ from other artificial neural networks as they apply competitive learning as opposed to error-correction learning, and in the sense that they use a neighborhood function to preserve the topological properties of the input space.

In computer science, learning vector quantization (LVQ), is a prototype-based supervised classification algorithm. LVQ is the supervised counterpart of vector quantization systems.

A neural network is a network or circuit of neurons, or in a modern sense, an artificial neural network, composed of artificial neurons or nodes. Thus a neural network is either a biological neural network, made up of biological neurons, or an artificial neural network, for solving artificial intelligence (AI) problems. The connections of the biological neuron are modeled as weights. A positive weight reflects an excitatory connection, while negative values mean inhibitory connections. All inputs are modified by a weight and summed. This activity is referred to as a linear combination. Finally, an activation function controls the amplitude of the output. For example, an acceptable range of output is usually between 0 and 1, or it could be −1 and 1.

Apache Axis is an open-source, XML based Web service framework. It consists of a Java and a C++ implementation of the SOAP server, and various utilities and APIs for generating and deploying Web service applications. Using Apache Axis, developers can create interoperable, distributed computing applications. Axis development takes place under the auspices of the Apache Software Foundation.

OpenCV is a library of programming functions mainly aimed at real-time computer vision. Originally developed by Intel, it was later supported by Willow Garage then Itseez. The library is cross-platform and free for use under the open-source Apache 2 License. Starting with 2011, OpenCV features GPU acceleration for real-time operations.

A multilayer perceptron (MLP) is a class of feedforward artificial neural network (ANN). The term MLP is used ambiguously, sometimes loosely to any feedforward ANN, sometimes strictly to refer to networks composed of multiple layers of perceptrons ; see § Terminology. Multilayer perceptrons are sometimes colloquially referred to as "vanilla" neural networks, especially when they have a single hidden layer.

Neural network software is used to simulate, research, develop, and apply artificial neural networks, software concepts adapted from biological neural networks, and in some cases, a wider array of adaptive systems such as artificial intelligence and machine learning.

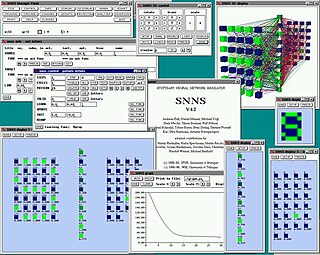

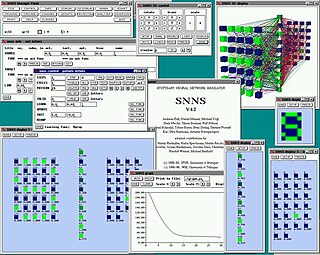

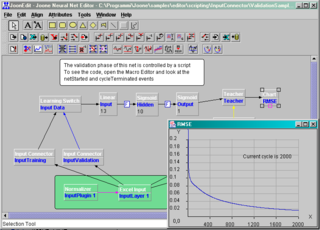

SNNS is a neural network simulator originally developed at the University of Stuttgart. While it was originally built for X11 under Unix, there are Windows ports. Its successor JavaNNS never reached the same popularity.

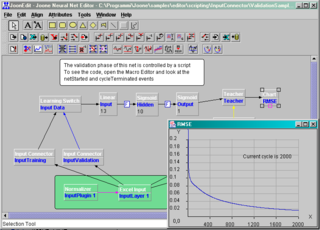

JOONE is a component based neural network framework built in Java.

GENESIS is a simulation environment for constructing realistic models of neurobiological systems at many levels of scale including: sub-cellular processes, individual neurons, networks of neurons, and neuronal systems. These simulations are “computer-based implementations of models whose primary objective is to capture what is known of the anatomical structure and physiological characteristics of the neural system of interest”. GENESIS is intended to quantify the physical framework of the nervous system in a way that allows for easy understanding of the physical structure of the nerves in question. “At present only GENESIS allows parallelized modeling of single neurons and networks on multiple-instruction-multiple-data parallel computers.” Development of GENESIS software spread from its home at Caltech to labs at the University of Texas at San Antonio, the University of Antwerp, the National Centre for Biological Sciences in Bangalore, the University of Colorado, the Pittsburgh Supercomputing Center, the San Diego Supercomputer Center, and Emory University.

Neural Lab is a free neural network simulator that designs and trains artificial neural networks for use in engineering, business, computer science and technology. It integrates with Microsoft Visual Studio using C to incorporate artificial neural networks into custom applications, research simulations or end user interfaces.

Apache Pivot is an open-source platform for building rich web applications in Java or any JVM-compatible language. It is released under the Apache License version 2.0.

Encog is a machine learning framework available for Java and .Net. Encog supports different learning algorithms such as Bayesian Networks, Hidden Markov Models and Support Vector Machines. However, its main strength lies in its neural network algorithms. Encog contains classes to create a wide variety of networks, as well as support classes to normalize and process data for these neural networks. Encog trains using many different techniques. Multithreading is used to allow optimal training performance on multicore machines.

Neural Engineering Object (Nengo) is a graphical and scripting software for simulating large-scale neural systems. As Neural network software Nengo is a tool for modelling neural networks with applications in cognitive science, psychology, Artificial Intelligence and neuroscience.

Eclipse Deeplearning4j is a programming library written in Java for the Java virtual machine (JVM). It is a framework with wide support for deep learning algorithms. Deeplearning4j includes implementations of the restricted Boltzmann machine, deep belief net, deep autoencoder, stacked denoising autoencoder and recursive neural tensor network, word2vec, doc2vec, and GloVe. These algorithms all include distributed parallel versions that integrate with Apache Hadoop and Spark.

React is a free and open-source front-end JavaScript library for building user interfaces or UI components. It is maintained by Facebook and a community of individual developers and companies. React can be used as a base in the development of single-page or mobile applications. However, React is only concerned with state management and rendering that state to the DOM, so creating React applications usually requires the use of additional libraries for routing, as well as certain client-side functionality.

spaCy is an open-source software library for advanced natural language processing, written in the programming languages Python and Cython. The library is published under the MIT license and its main developers are Matthew Honnibal and Ines Montani, the founders of the software company Explosion.

The following outline is provided as an overview of and topical guide to machine learning. Machine learning is a subfield of soft computing within computer science that evolved from the study of pattern recognition and computational learning theory in artificial intelligence. In 1959, Arthur Samuel defined machine learning as a "field of study that gives computers the ability to learn without being explicitly programmed". Machine learning explores the study and construction of algorithms that can learn from and make predictions on data. Such algorithms operate by building a model from an example training set of input observations in order to make data-driven predictions or decisions expressed as outputs, rather than following strictly static program instructions.

fast.ai is a non-profit research group focused on deep learning and artificial intelligence. It was founded in 2016 by Jeremy Howard and Rachel Thomas with the goal of democratising deep learning. They do this by providing a massive open online course (MOOC) named "Practical Deep Learning for Coders," which has no other prerequisites except for knowledge of the programming language Python.