Burnt-in timecode is a human-readable on-screen version of the timecode information for a piece of material superimposed on a video image. BITC is sometimes used in conjunction with "real" machine-readable timecode, but more often used in copies of original material on to a non-broadcast format such as VHS, so that the VHS copies can be traced back to their master tape and the original time codes easily located.

Keykode is an Eastman Kodak Company advancement on edge numbers, which are letters, numbers and symbols placed at regular intervals along the edge of 35 mm and 16 mm film to allow for frame-by-frame specific identification. It was introduced in 1990.

Linear Timecode (LTC) is an encoding of SMPTE timecode data in an audio signal, as defined in SMPTE 12M specification. The audio signal is commonly recorded on a VTR track or other storage media. The bits are encoded using the biphase mark code : a 0 bit has a single transition at the start of the bit period. A 1 bit has two transitions, at the beginning and middle of the period. This encoding is self-clocking. Each frame is terminated by a 'sync word' which has a special predefined sync relationship with any video or film content.

MIDI time code (MTC) embeds the same timing information as standard SMPTE timecode as a series of small 'quarter-frame' MIDI messages. There is no provision for the user bits in the standard MIDI time code messages, and SysEx messages are used to carry this information instead. The quarter-frame messages are transmitted in a sequence of eight messages, thus a complete timecode value is specified every two frames. If the MIDI data stream is running close to capacity, the MTC data may arrive a little behind schedule which has the effect of introducing a small amount of jitter. In order to avoid this it is ideal to use a completely separate MIDI port for MTC data. Larger full-frame messages, which encapsulate a frame worth of timecode in a single message, are used to locate to a time while timecode is not running.

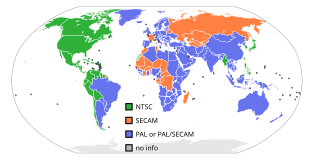

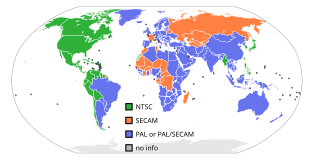

NTSC is the first American standard for analog television, published in 1941. In 1961, it was assigned the designation System M. It is also known as EIA standard.

Vertical Interval Timecode is a form of SMPTE timecode encoded on one scan line in a video signal. These lines are typically inserted into the vertical blanking interval of the video signal.

SMPTE timecode is a set of cooperating standards to label individual frames of video or film with a timecode. The system is defined by the Society of Motion Picture and Television Engineers in the SMPTE 12M specification. SMPTE revised the standard in 2008, turning it into a two-part document: SMPTE 12M-1 and SMPTE 12M-2, including new explanations and clarifications.

AES3 is a standard for the exchange of digital audio signals between professional audio devices. An AES3 signal can carry two channels of pulse-code-modulated digital audio over several transmission media including balanced lines, unbalanced lines, and optical fiber.

A clapperboard, also known as a dumb slate, clapboard, film clapper, film slate, movie slate, or production slate, is a device used in filmmaking and video production to assist in synchronizing of picture and sound, and to designate and mark the various scenes and takes as they are filmed and audio-recorded. It is operated by the clapper loader. It is said to have been invented by Australian filmmaker F. W. Thring. Due to its ubiquity on film sets, the clapperboard is frequently featured in behind-the-scenes footage and films about filmmaking, and has become an enduring symbol of the film industry as a whole.

Serial digital interface (SDI) is a family of digital video interfaces first standardized by SMPTE in 1989. For example, ITU-R BT.656 and SMPTE 259M define digital video interfaces used for broadcast-grade video. A related standard, known as high-definition serial digital interface (HD-SDI), is standardized in SMPTE 292M; this provides a nominal data rate of 1.485 Gbit/s.

Sync sound refers to sound recorded at the time of the filming of movies. It has been widely used in movies since the birth of sound movies.

MPEG transport stream or simply transport stream (TS) is a standard digital container format for transmission and storage of audio, video, and Program and System Information Protocol (PSIP) data. It is used in broadcast systems such as DVB, ATSC and IPTV.

In filmmaking, dailies or rushes are the raw, unedited footage shot during the making of a motion picture. The term "dailies" comes from when movies were all shot on film because usually at the end of each day, the footage was developed, synced to sound, and printed on film in a batch for viewing the next day by the director, selected actors, and film crew members. After the advent of digital filmmaking, "dailies" were available instantly after the take and the review process was no longer tied to the overnight processing of film and became more asynchronous. Now some reviewing may be done at the shoot, even on location, and raw footage may be immediately sent electronically to anyone in the world who needs to review the takes. For example, a director can review takes from a second unit while the crew is still on location or producers can get timely updates while travelling. Dailies serve as an indication of how the filming and the actors' performances are progressing. The term was also used to describe film dailies as "the first positive prints made by the laboratory from the negative photographed on the previous day".

Negative cutting is the process of cutting motion picture negative to match precisely the final edit as specified by the film editor. Original camera negative (OCN) is cut with scissors and joined using a film splicer and film cement. Negative cutting is part of the post-production process and occurs after editing and prior to striking internegatives and release prints. The process of negative cutting has changed little since the beginning of cinema in the early 20th century. In the early 1980s computer software was first used to aid the cutting process. Kodak introduced barcode on motion picture negative in the mid-1990s. This enabled negative cutters to more easily track shots and identify film sections based on keykode.

SMPTE 292 is a digital video transmission line standard published by the Society of Motion Picture and Television Engineers (SMPTE). This technical standard is usually referred to as HD-SDI; it is part of a family of standards that define a Serial Digital Interface based on a coaxial cable, intended to be used for transport of uncompressed digital video and audio in a television studio environment.

Control track longitudinal timecode, or CTL timecode, developed by JVC in the early 1990s, is a unique technique for embedding, or striping, reference SMPTE timecode onto a videotape.

The Rewriteable Consumer Timecode is a nearly frame accurate timecode method developed by Sony for 8mm and Hi8 analog tape formats. The RC timecode tags each frame with the hour, minute, second and frame for each frame of video recorded to tape. Officially, RCTC is accurate to within ±2 to 5 frames. The RC timecode can be used in conjunction with the datacode to record the date and the time. The data and RC codes are written between the video and the PCM audio tracks. It may be added to any 8-mm tape without altering the information already on the tape, and is invisible to machines not equipped to read it.

Audio-to-video synchronization refers to the relative timing of audio (sound) and video (image) parts during creation, post-production (mixing), transmission, reception and play-back processing. AV synchronization can be an issue in television, videoconferencing, or film.

Articles related to the field of motion pictures include:

In filmmaking and video production, shot logging is the process by which shoot metadata is captured during a film or video shoot. During the shoot, the camera assistant typically logs the start and end timecodes of shots, and the data generated is sent on to the editorial department for use in referencing those shots. At the same time, information such as scene/slate number, camera ID and take is noted. Where there are other technical systems producing metadata, their timecodes and settings are also noted.