Autocorrelation, sometimes known as serial correlation in the discrete time case, is the correlation of a signal with a delayed copy of itself as a function of delay. Informally, it is the similarity between observations of a random variable as a function of the time lag between them. The analysis of autocorrelation is a mathematical tool for finding repeating patterns, such as the presence of a periodic signal obscured by noise, or identifying the missing fundamental frequency in a signal implied by its harmonic frequencies. It is often used in signal processing for analyzing functions or series of values, such as time domain signals.

In mathematics, more specifically in functional analysis, a Banach space is a complete normed vector space. Thus, a Banach space is a vector space with a metric that allows the computation of vector length and distance between vectors and is complete in the sense that a Cauchy sequence of vectors always converges to a well-defined limit that is within the space.

In complex analysis, an entire function, also called an integral function, is a complex-valued function that is holomorphic on the whole complex plane. Typical examples of entire functions are polynomials and the exponential function, and any finite sums, products and compositions of these, such as the trigonometric functions sine and cosine and their hyperbolic counterparts sinh and cosh, as well as derivatives and integrals of entire functions such as the error function. If an entire function has a root at , then , taking the limit value at , is an entire function. On the other hand, the natural logarithm, the reciprocal function, and the square root are all not entire functions, nor can they be continued analytically to an entire function.

In mathematics, a topological space is, roughly speaking, a geometrical space in which closeness is defined but cannot necessarily be measured by a numeric distance. More specifically, a topological space is a set whose elements are called points, along with an additional structure called a topology, which can be defined as a set of neighbourhoods for each point that satisfy some axioms formalizing the concept of closeness. There are several equivalent definitions of a topology, the most commonly used of which is the definition through open sets, which is easier than the others to manipulate.

In mathematics, particularly in complex analysis, a Riemann surface is a connected one-dimensional complex manifold. These surfaces were first studied by and are named after Bernhard Riemann. Riemann surfaces can be thought of as deformed versions of the complex plane: locally near every point they look like patches of the complex plane, but the global topology can be quite different. For example, they can look like a sphere or a torus or several sheets glued together.

An instanton is a notion appearing in theoretical and mathematical physics. An instanton is a classical solution to equations of motion with a finite, non-zero action, either in quantum mechanics or in quantum field theory. More precisely, it is a solution to the equations of motion of the classical field theory on a Euclidean spacetime.

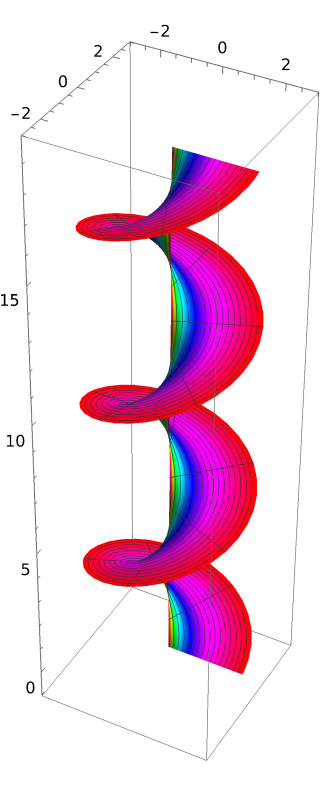

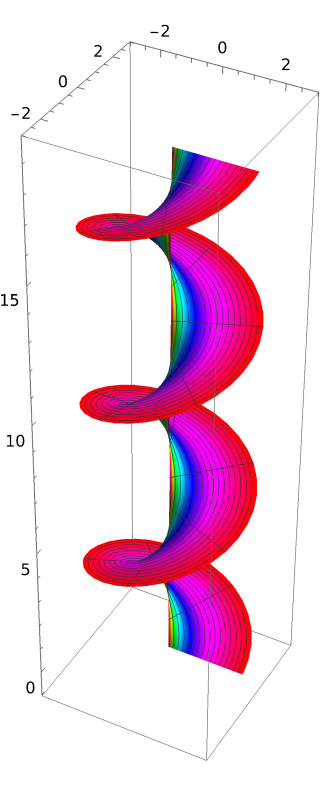

In mathematics, a foliation is an equivalence relation on an n-manifold, the equivalence classes being connected, injectively immersed submanifolds, all of the same dimension p, modeled on the decomposition of the real coordinate space Rn into the cosets x + Rp of the standardly embedded subspace Rp. The equivalence classes are called the leaves of the foliation. If the manifold and/or the submanifolds are required to have a piecewise-linear, differentiable, or analytic structure then one defines piecewise-linear, differentiable, or analytic foliations, respectively. In the most important case of differentiable foliation of class Cr it is usually understood that r ≥ 1. The number p is called the dimension of the foliation and q = n − p is called its codimension.

In mathematics, more specifically in topology, an open map is a function between two topological spaces that maps open sets to open sets. That is, a function is open if for any open set in the image is open in Likewise, a closed map is a function that maps closed sets to closed sets. A map may be open, closed, both, or neither; in particular, an open map need not be closed and vice versa.

In mathematics, the Weierstrass elliptic functions are elliptic functions that take a particularly simple form. They are named for Karl Weierstrass. This class of functions are also referred to as ℘-functions and they are usually denoted by the symbol ℘, a uniquely fancy script p. They play an important role in the theory of elliptic functions, i.e., meromorphic functions that are doubly periodic. A ℘-function together with its derivative can be used to parameterize elliptic curves and they generate the field of elliptic functions with respect to a given period lattice.

In mathematics, Hodge theory, named after W. V. D. Hodge, is a method for studying the cohomology groups of a smooth manifold M using partial differential equations. The key observation is that, given a Riemannian metric on M, every cohomology class has a canonical representative, a differential form that vanishes under the Laplacian operator of the metric. Such forms are called harmonic.

In mathematics and signal processing, the Hilbert transform is a specific singular integral that takes a function, u(t) of a real variable and produces another function of a real variable H(u)(t). The Hilbert transform is given by the Cauchy principal value of the convolution with the function (see § Definition). The Hilbert transform has a particularly simple representation in the frequency domain: It imparts a phase shift of ±90° (π/2 radians) to every frequency component of a function, the sign of the shift depending on the sign of the frequency (see § Relationship with the Fourier transform). The Hilbert transform is important in signal processing, where it is a component of the analytic representation of a real-valued signal u(t). The Hilbert transform was first introduced by David Hilbert in this setting, to solve a special case of the Riemann–Hilbert problem for analytic functions.

In signal processing, cross-correlation is a measure of similarity of two series as a function of the displacement of one relative to the other. This is also known as a sliding dot product or sliding inner-product. It is commonly used for searching a long signal for a shorter, known feature. It has applications in pattern recognition, single particle analysis, electron tomography, averaging, cryptanalysis, and neurophysiology. The cross-correlation is similar in nature to the convolution of two functions. In an autocorrelation, which is the cross-correlation of a signal with itself, there will always be a peak at a lag of zero, and its size will be the signal energy.

In system analysis, among other fields of study, a linear time-invariant (LTI) system is a system that produces an output signal from any input signal subject to the constraints of linearity and time-invariance; these terms are briefly defined in the overview below. These properties apply (exactly or approximately) to many important physical systems, in which case the response y(t) of the system to an arbitrary input x(t) can be found directly using convolution: y(t) = (x ∗ h)(t) where h(t) is called the system's impulse response and ∗ represents convolution (not to be confused with multiplication). What's more, there are systematic methods for solving any such system (determining h(t)), whereas systems not meeting both properties are generally more difficult (or impossible) to solve analytically. A good example of an LTI system is any electrical circuit consisting of resistors, capacitors, inductors and linear amplifiers.

In general topology and related areas of mathematics, the final topology on a set with respect to a family of functions from topological spaces into is the finest topology on that makes all those functions continuous.

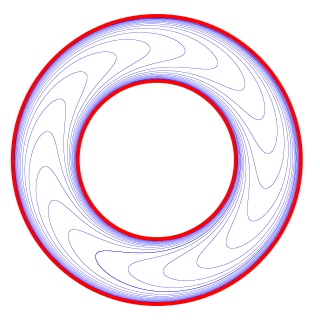

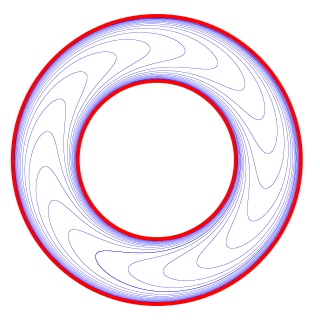

In mathematics, a complex torus is a particular kind of complex manifold M whose underlying smooth manifold is a torus in the usual sense. Here N must be the even number 2n, where n is the complex dimension of M.

In mathematics, in the area of complex analysis, Carlson's theorem is a uniqueness theorem which was discovered by Fritz David Carlson. Informally, it states that two different analytic functions which do not grow very fast at infinity can not coincide at the integers. The theorem may be obtained from the Phragmén–Lindelöf theorem, which is itself an extension of the maximum-modulus theorem.

In mathematics, in the area of complex analysis, Nachbin's theorem is a result used to establish bounds on the growth rates for analytic functions. In particular, Nachbin's theorem may be used to give the domain of convergence of the generalized Borel transform, also called Nachbin summation.

In complex analysis, a branch of mathematics, a holomorphic function is said to be of exponential type C if its growth is bounded by the exponential function for some real-valued constant as . When a function is bounded in this way, it is then possible to express it as certain kinds of convergent summations over a series of other complex functions, as well as understanding when it is possible to apply techniques such as Borel summation, or, for example, to apply the Mellin transform, or to perform approximations using the Euler–Maclaurin formula. The general case is handled by Nachbin's theorem, which defines the analogous notion of -type for a general function as opposed to .

In complex analysis, given initial data consisting of points in the complex unit disc and target data consisting of points in , the Nevanlinna–Pick interpolation problem is to find a holomorphic function that interpolates the data, that is for all ,

In mathematics, a complex Lie algebra is a Lie algebra over the complex numbers.