A colocation centre or "carrier hotel", is a type of data centre where equipment, space, and bandwidth are available for rental to retail customers. Colocation facilities provide space, power, cooling, and physical security for the server, storage, and networking equipment of other firms and also connect them to a variety of telecommunications and network service providers with a minimum of cost and complexity.

A data center or data centre is a building, dedicated space within a building, or a group of buildings used to house computer systems and associated components, such as telecommunications and storage systems.

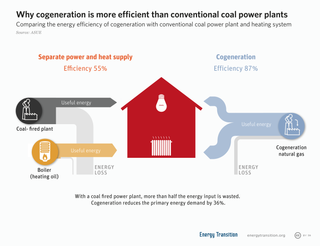

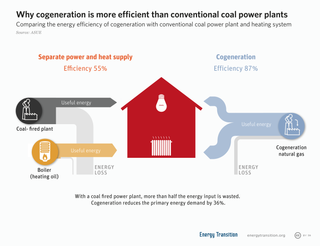

Cogeneration or combined heat and power (CHP) is the use of a heat engine or power station to generate electricity and useful heat at the same time. Trigeneration or combined cooling, heat and power (CCHP) refers to the simultaneous generation of electricity and useful heating and cooling from the combustion of a fuel or a solar heat collector. The terms cogeneration and trigeneration can be also applied to the power systems generating simultaneously electricity, heat, and industrial chemicals – e.g., syngas or pure hydrogen.

NetApp, Inc. is a hybrid cloud data services and data management company headquartered in Sunnyvale, California. It has ranked in the Fortune 500 since 2012. Founded in 1992 with an IPO in 1995, NetApp offers hybrid cloud data services for management of applications and data across cloud and on-premises environments.

The Greek Research and Technology Network or GRNET is the national research and education network of Greece. GRNET S.A. provides Internet connectivity, high-quality e-Infrastructures and advanced services to the Greek Educational, Academic and Research community, aiming at minimizing the digital divide and at ensuring equal participation of its members in the global Society of Knowledge. Additionally, GRNET develops digital applications that ensure resource optimization for the Greek State, modernize public functional structures and procedures, and introduce new models of cooperation between public bodies, research and education communities, citizens and businesses. GRnet's executives have been contributors of or occupied board positions in organisations including GÉANT, TERENA, DANTE,GR-IX, Euro-IX,, RIPE NCC. GRNET provides advanced services to the following sectors: Education, Research, Health, Culture. GRNET supports all Universities, Technological Education Institutes, Research Centers and over 9,500 schools via the Greek School Network a population of more than one million people. Video presentations of some of the services are available in Pyxida.

The National Energy Research Scientific Computing Center, or NERSC, is a high performance computing (supercomputer) user facility operated by Lawrence Berkeley National Laboratory for the United States Department of Energy Office of Science. As the mission computing center for the Office of Science, NERSC houses high performance computing and data systems used by 7,000 scientists at national laboratories and universities around the country. NERSC's newest and largest supercomputer is Cori, which was ranked 5th on the TOP500 list of world's fastest supercomputers in November 2016. NERSC is located on the main Berkeley Lab campus in Berkeley, California.

A server room is a room, usually air-conditioned, devoted to the continuous operation of computer servers. An entire building or station devoted to this purpose is a data center.

Dynamic Infrastructure is an information technology concept related to the design of data centers, whereby the underlying hardware and software can respond dynamically and more efficiently to changing levels of demand. In other words, data center assets such as storage and processing power can be provisioned to meet surges in user's needs. The concept has also been referred to as Infrastructure 2.0 and Next Generation Data Center.

Power usage effectiveness (PUE) is a ratio that describes how efficiently a computer data center uses energy; specifically, how much energy is used by the computing equipment.

The BDR Thermea Group is a European manufacturer of domestic and industrial heating appliances. The firm was created by the merger of Baxi and De Dietrich Remeha in 2009. Based in Apeldoorn, the Netherlands BDR Thermea provides heating and hot water products for UK, France, Germany, Spain, The Netherlands and Italy and has strong positions in the rapidly growing markets of Eastern Europe, Turkey, Russia, North America and China. In total BDR Thermea operates in more than 70 countries worldwide.

The HP Performance Optimized Datacenter (POD) is a range of three modular data centers manufactured by HP.

iDataCool is a high-performance computer cluster based on a modified IBM System x iDataPlex. The cluster serves as a research platform for cooling of IT equipment with hot water and efficient reuse of the waste heat. The project is carried out by the physics department of the University of Regensburg in collaboration with the IBM Research and Development Laboratory Böblingen and InvenSor. It is funded by the German Research Foundation (DFG), the German state of Bavaria, and IBM.

The NCAR-Wyoming Supercomputing Center (NWSC) is a high-performance computing (HPC) and data archival facility located in Cheyenne, Wyoming that provides advanced computing services to researchers in the Earth system sciences.

Kubernetes is an open-source container-orchestration system for automating application deployment, scaling, and management. It was originally designed by Google, and is now maintained by the Cloud Native Computing Foundation. It aims to provide a "platform for automating deployment, scaling, and operations of application containers across clusters of hosts". It works with a range of container tools, including Docker. Many cloud services offer a Kubernetes-based platform or infrastructure as a service on which Kubernetes can be deployed as a platform-providing service. Many vendors also provide their own branded Kubernetes distributions.

Mirantis Inc. is a Campbell, California, based B2B cloud computing services company. It focuses on the development and support of Kubernetes and OpenStack. The company was founded in 1999 by Alex Freedland and Boris Renski. It was one of the founding members of the OpenStack Foundation, a non-profit corporate entity established in September, 2012 to promote OpenStack software and its community.

Temperature chaining can mean temperature, thermal or energy chaining or cascading.

TiDB is an open-source NewSQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and can provide horizontal scalability, strong consistency, and high availability. It is developed and supported primarily by PingCAP, Inc. and licensed under Apache 2.0. TiDB drew its initial design inspiration from Google’s Spanner and F1 papers.