In mathematics and computer programming, exponentiating by squaring is a general method for fast computation of large positive integer powers of a number, or more generally of an element of a semigroup, like a polynomial or a square matrix. Some variants are commonly referred to as square-and-multiply algorithms or binary exponentiation. These can be of quite general use, for example in modular arithmetic or powering of matrices. For semigroups for which additive notation is commonly used, like elliptic curves used in cryptography, this method is also referred to as double-and-add.

In mathematics, the Euclidean algorithm, or Euclid's algorithm, is an efficient method for computing the greatest common divisor (GCD) of two integers (numbers), the largest number that divides them both without a remainder. It is named after the ancient Greek mathematician Euclid, who first described it in his Elements . It is an example of an algorithm, a step-by-step procedure for performing a calculation according to well-defined rules, and is one of the oldest algorithms in common use. It can be used to reduce fractions to their simplest form, and is a part of many other number-theoretic and cryptographic calculations.

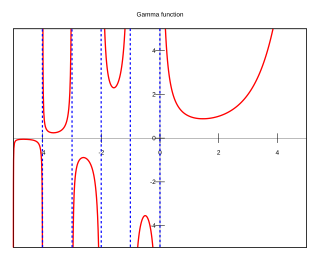

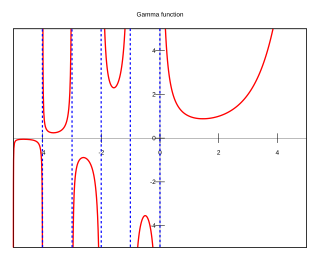

In mathematics, the gamma function is one commonly used extension of the factorial function to complex numbers. The gamma function is defined for all complex numbers except the non-positive integers. For every positive integer n,

In mathematics, the greatest common divisor (GCD) of two or more integers, which are not all zero, is the largest positive integer that divides each of the integers. For two integers x, y, the greatest common divisor of x and y is denoted . For example, the GCD of 8 and 12 is 4, that is, gcd(8, 12) = 4.

Multiplication is one of the four elementary mathematical operations of arithmetic, with the other ones being addition, subtraction, and division. The result of a multiplication operation is called a product.

A multiplication algorithm is an algorithm to multiply two numbers. Depending on the size of the numbers, different algorithms are more efficient than others. Efficient multiplication algorithms have existed since the advent of the decimal numeral system.

In mathematics, exponentiation is an operation involving two numbers: the base and the exponent or power. Exponentiation is written as bn, where b is the base and n is the power; this is pronounced as "b (raised) to the n". When n is a positive integer, exponentiation corresponds to repeated multiplication of the base: that is, bn is the product of multiplying n bases:

In mathematics, the binary logarithm is the power to which the number 2 must be raised to obtain the value n. That is, for any real number x,

Toom–Cook, sometimes known as Toom-3, named after Andrei Toom, who introduced the new algorithm with its low complexity, and Stephen Cook, who cleaned the description of it, is a multiplication algorithm for large integers.

In computer science, arbitrary-precision arithmetic, also called bignum arithmetic, multiple-precision arithmetic, or sometimes infinite-precision arithmetic, indicates that calculations are performed on numbers whose digits of precision are limited only by the available memory of the host system. This contrasts with the faster fixed-precision arithmetic found in most arithmetic logic unit (ALU) hardware, which typically offers between 8 and 64 bits of precision.

In arithmetic, Euclidean division – or division with remainder – is the process of dividing one integer by another, in a way that produces an integer quotient and a natural number remainder strictly smaller than the absolute value of the divisor. A fundamental property is that the quotient and the remainder exist and are unique, under some conditions. Because of this uniqueness, Euclidean division is often considered without referring to any method of computation, and without explicitly computing the quotient and the remainder. The methods of computation are called integer division algorithms, the best known of which being long division.

In modular arithmetic computation, Montgomery modular multiplication, more commonly referred to as Montgomery multiplication, is a method for performing fast modular multiplication. It was introduced in 1985 by the American mathematician Peter L. Montgomery.

The Schönhage–Strassen algorithm is an asymptotically fast multiplication algorithm for large integers, published by Arnold Schönhage and Volker Strassen in 1971. It works by recursively applying fast Fourier transform (FFT) over the integers modulo 2n+1. The run-time bit complexity to multiply two n-digit numbers using the algorithm is in big O notation.

A division algorithm is an algorithm which, given two integers N and D, computes their quotient and/or remainder, the result of Euclidean division. Some are applied by hand, while others are employed by digital circuit designs and software.

In mathematics and computer algebra, factorization of polynomials or polynomial factorization expresses a polynomial with coefficients in a given field or in the integers as the product of irreducible factors with coefficients in the same domain. Polynomial factorization is one of the fundamental components of computer algebra systems.

The Karatsuba algorithm is a fast multiplication algorithm. It was discovered by Anatoly Karatsuba in 1960 and published in 1962. It is a divide-and-conquer algorithm that reduces the multiplication of two n-digit numbers to three multiplications of n/2-digit numbers and, by repeating this reduction, to at most single-digit multiplications. It is therefore asymptotically faster than the traditional algorithm, which performs single-digit products.

The following tables list the computational complexity of various algorithms for common mathematical operations.

Class Library for Numbers (CLN) is a free library for arbitrary precision arithmetic. It operates on signed integers, rational numbers, floating point numbers, complex numbers, modular numbers, and univariate polynomials. Its implementation programming language is C++.

The Chudnovsky algorithm is a fast method for calculating the digits of π, based on Ramanujan's π formulae. Published by the Chudnovsky brothers in 1988, it was used to calculate π to a billion decimal places.

In mathematics, the FEE method, or fast E-function evaluation method, is the method of fast summation of series of a special form. It was constructed in 1990 by Ekaterina Karatsuba and is so-named because it makes fast computations of the Siegel E-functions possible, in particular of .