Related Research Articles

Bayesian probability is an interpretation of the concept of probability, in which, instead of frequency or propensity of some phenomenon, probability is interpreted as reasonable expectation representing a state of knowledge or as quantification of a personal belief.

In statistics, an estimator is a rule for calculating an estimate of a given quantity based on observed data: thus the rule, the quantity of interest and its result are distinguished. For example, the sample mean is a commonly used estimator of the population mean.

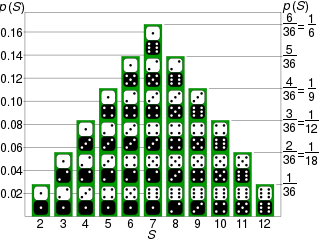

Probability is the branch of mathematics concerning numerical descriptions of how likely an event is to occur, or how likely it is that a proposition is true. The probability of an event is a number between 0 and 1, where, roughly speaking, 0 indicates impossibility of the event and 1 indicates certainty. The higher the probability of an event, the more likely it is that the event will occur. A simple example is the tossing of a fair (unbiased) coin. Since the coin is fair, the two outcomes are both equally probable; the probability of "heads" equals the probability of "tails"; and since no other outcomes are possible, the probability of either "heads" or "tails" is 1/2.

Statistics is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional, to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of surveys and experiments.

Statistical inference is the process of using data analysis to infer properties of an underlying distribution of probability. Inferential statistical analysis infers properties of a population, for example by testing hypotheses and deriving estimates. It is assumed that the observed data set is sampled from a larger population.

A statistical hypothesis test is a method of statistical inference used to decide whether the data at hand sufficiently support a particular hypothesis. Hypothesis testing allows us to make probabilistic statements about population parameters.

Bayesian inference is a method of statistical inference in which Bayes' theorem is used to update the probability for a hypothesis as more evidence or information becomes available. Bayesian inference is an important technique in statistics, and especially in mathematical statistics. Bayesian updating is particularly important in the dynamic analysis of a sequence of data. Bayesian inference has found application in a wide range of activities, including science, engineering, philosophy, medicine, sport, and law. In the philosophy of decision theory, Bayesian inference is closely related to subjective probability, often called "Bayesian probability".

A mathematical proof is an inferential argument for a mathematical statement, showing that the stated assumptions logically guarantee the conclusion. The argument may use other previously established statements, such as theorems; but every proof can, in principle, be constructed using only certain basic or original assumptions known as axioms, along with the accepted rules of inference. Proofs are examples of exhaustive deductive reasoning which establish logical certainty, to be distinguished from empirical arguments or non-exhaustive inductive reasoning which establish "reasonable expectation". Presenting many cases in which the statement holds is not enough for a proof, which must demonstrate that the statement is true in all possible cases. A proposition that has not been proved but is believed to be true is known as a conjecture, or a hypothesis if frequently used as an assumption for further mathematical work.

In computer architecture, cache coherence is the uniformity of shared resource data that ends up stored in multiple local caches. When clients in a system maintain caches of a common memory resource, problems may arise with incoherent data, which is particularly the case with CPUs in a multiprocessing system.

Coherence, coherency, or coherent may refer to the following:

In philosophical epistemology, there are two types of coherentism: the coherence theory of truth; and the coherence theory of justification.

Decision theory is a branch of applied probability theory concerned with the theory of making decisions based on assigning probabilities to various factors and assigning numerical consequences to the outcome.

In statistics, consistency of procedures, such as computing confidence intervals or conducting hypothesis tests, is a desired property of their behaviour as the number of items in the data set to which they are applied increases indefinitely. In particular, consistency requires that the outcome of the procedure with unlimited data should identify the underlying truth. Use of the term in statistics derives from Sir Ronald Fisher in 1922.

Mathematical statistics is the application of probability theory, a branch of mathematics, to statistics, as opposed to techniques for collecting statistical data. Specific mathematical techniques which are used for this include mathematical analysis, linear algebra, stochastic analysis, differential equations, and measure theory.

This glossary of statistics and probability is a list of definitions of terms and concepts used in the mathematical sciences of statistics and probability, their sub-disciplines, and related fields. For additional related terms, see Glossary of mathematics and Glossary of experimental design.

In statistics, the frequency of an event is the number of times the observation occurred/recorded in an experiment or study. These frequencies are often depicted graphically or in tabular form.

In simple terms, risk is the possibility of something bad happening. Risk involves uncertainty about the effects/implications of an activity with respect to something that humans value, often focusing on negative, undesirable consequences. Many different definitions have been proposed. The international standard definition of risk for common understanding in different applications is “effect of uncertainty on objectives”.

In marketing, Bayesian inference allows for decision making and market research evaluation under uncertainty and with limited data.

Bayesian epistemology is a formal approach to various topics in epistemology that has its roots in Thomas Bayes' work in the field of probability theory. One advantage of its formal method in contrast to traditional epistemology is that its concepts and theorems can be defined with a high degree of precision. It is based on the idea that beliefs can be interpreted as subjective probabilities. As such, they are subject to the laws of probability theory, which act as the norms of rationality. These norms can be divided into static constraints, governing the rationality of beliefs at any moment, and dynamic constraints, governing how rational agents should change their beliefs upon receiving new evidence. The most characteristic Bayesian expression of these principles is found in the form of Dutch books, which illustrate irrationality in agents through a series of bets that lead to a loss for the agent no matter which of the probabilistic events occurs. Bayesians have applied these fundamental principles to various epistemological topics but Bayesianism does not cover all topics of traditional epistemology. The problem of confirmation in the philosophy of science, for example, can be approached through the Bayesian principle of conditionalization by holding that a piece of evidence confirms a theory if it raises the likelihood that this theory is true. Various proposals have been made to define the concept of coherence in terms of probability, usually in the sense that two propositions cohere if the probability of their conjunction is higher than if they were neutrally related to each other. The Bayesian approach has also been fruitful in the field of social epistemology, for example, concerning the problem of testimony or the problem of group belief. Bayesianism still faces various theoretical objections that have not been fully solved.

References

- 1 2 3 Dodge, Y. (2003) The Oxford Dictionary of Statistical Terms, OUP. ISBN 0-19-920613-9.

- 1 2 Everitt, B.S. (2002) The Cambridge Dictionary of Statistics, CUP. ISBN 0-521-81099-X.