Machine translation, sometimes referred to by the abbreviation MT, is a sub-field of computational linguistics that investigates the use of software to translate text or speech from one language to another.

Natural language processing (NLP) is an interdisciplinary subfield of linguistics, computer science, and artificial intelligence concerned with the interactions between computers and human language, in particular how to program computers to process and analyze large amounts of natural language data. The goal is a computer capable of "understanding" the contents of documents, including the contextual nuances of the language within them. The technology can then accurately extract information and insights contained in the documents as well as categorize and organize the documents themselves.

Corpus linguistics is the study of a language as that language is expressed in its text corpus, its body of "real world" text. Corpus linguistics proposes that a reliable analysis of a language is more feasible with corpora collected in the field—the natural context ("realia") of that language—with minimal experimental interference.

In linguistics, a corpus or text corpus is a language resource consisting of a large and structured set of texts. In corpus linguistics, they are used to do statistical analysis and hypothesis testing, checking occurrences or validating linguistic rules within a specific language territory.

Word-sense disambiguation (WSD) is the process of identifying which sense of a word is meant in a sentence or other segment of context. In human language processing and cognition, it is usually subconscious/automatic but can often come to conscious attention when ambiguity impairs clarity of communication, given the pervasive polysemy in natural language. In computational linguistics, it is an open problem that affects other computer-related writing, such as discourse, improving relevance of search engines, anaphora resolution, coherence, and inference.

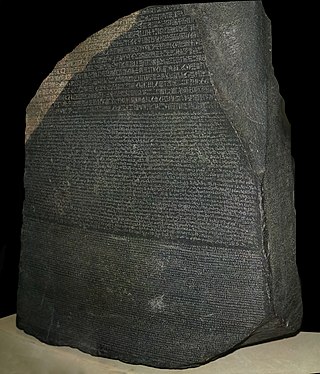

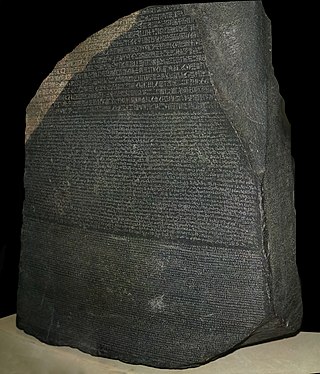

A parallel text is a text placed alongside its translation or translations. Parallel text alignment is the identification of the corresponding sentences in both halves of the parallel text. The Loeb Classical Library and the Clay Sanskrit Library are two examples of dual-language series of texts. Reference Bibles may contain the original languages and a translation, or several translations by themselves, for ease of comparison and study; Origen's Hexapla placed six versions of the Old Testament side by side. A famous example is the Rosetta Stone, whose discovery allowed the Ancient Egyptian language to begin being deciphered.

Machine translation can use a method based on dictionary entries, which means that the words will be translated as a dictionary does – word by word, usually without much correlation of meaning between them. Dictionary lookups may be done with or without morphological analysis or lemmatisation. While this approach to machine translation is probably the least sophisticated, dictionary-based machine translation is ideally suitable for the translation of long lists of phrases on the subsentential level, e.g. inventories or simple catalogs of products and services.

An intermediate representation (IR) is the data structure or code used internally by a compiler or virtual machine to represent source code. An IR is designed to be conducive to further processing, such as optimization and translation. A "good" IR must be accurate – capable of representing the source code without loss of information – and independent of any particular source or target language. An IR may take one of several forms: an in-memory data structure, or a special tuple- or stack-based code readable by the program. In the latter case it is also called an intermediate language.

A pivot language, sometimes also called a bridge language, is an artificial or natural language used as an intermediary language for translation between many different languages – to translate between any pair of languages A and B, one translates A to the pivot language P, then from P to B. Using a pivot language avoids the combinatorial explosion of having translators across every combination of the supported languages, as the number of combinations of language is linear, rather than quadratic – one need only know the language A and the pivot language P, rather than needing a different translator for every possible combination of A and B.

Interlingual machine translation is one of the classic approaches to machine translation. In this approach, the source language, i.e. the text to be translated is transformed into an interlingua, i.e., an abstract language-independent representation. The target language is then generated from the interlingua. Within the rule-based machine translation paradigm, the interlingual approach is an alternative to the direct approach and the transfer approach.

Statistical machine translation (SMT) is a machine translation paradigm where translations are generated on the basis of statistical models whose parameters are derived from the analysis of bilingual text corpora. The statistical approach contrasts with the rule-based approaches to machine translation as well as with example-based machine translation, and has more recently been superseded by neural machine translation in many applications.

The British National Corpus (BNC) is a 100-million-word text corpus of samples of written and spoken English from a wide range of sources. The corpus covers British English of the late 20th century from a wide variety of genres, with the intention that it be a representative sample of spoken and written British English of that time. It is used in corpus linguistic for analysis of corpora

Transfer-based machine translation is a type of machine translation (MT). It is currently one of the most widely used methods of machine translation. In contrast to the simpler direct model of MT, transfer MT breaks translation into three steps: analysis of the source language text to determine its grammatical structure, transfer of the resulting structure to a structure suitable for generating text in the target language, and finally generation of this text. Transfer-based MT systems are thus capable of using knowledge of the source and target languages.

Contrastive linguistics is a practice-oriented linguistic approach that seeks to describe the differences and similarities between a pair of languages.

Rule-based machine translation is machine translation systems based on linguistic information about source and target languages basically retrieved from dictionaries and grammars covering the main semantic, morphological, and syntactic regularities of each language respectively. Having input sentences, an RBMT system generates them to output sentences on the basis of morphological, syntactic, and semantic analysis of both the source and the target languages involved in a concrete translation task.

Speech translation is the process by which conversational spoken phrases are instantly translated and spoken aloud in a second language. This differs from phrase translation, which is where the system only translates a fixed and finite set of phrases that have been manually entered into the system. Speech translation technology enables speakers of different languages to communicate. It thus is of tremendous value for humankind in terms of science, cross-cultural exchange and global business.

MedSLT is a medium-ranged open source spoken language translator developed by the University of Geneva. It is funded by the Swiss National Science Foundation. The system has been designed for the medical domain. It currently covers the doctor-patient diagnosis dialogues for the domains of headache, chest and abdominal pain in English, French, Japanese, Spanish, Catalan and Arabic. The vocabulary used ranges from 350 to 1000 words depending on the domain and language pair.

Example-based machine translation (EBMT) is a method of machine translation often characterized by its use of a bilingual corpus with parallel texts as its main knowledge base at run-time. It is essentially a translation by analogy and can be viewed as an implementation of a case-based reasoning approach to machine learning.

memoQ is a proprietary computer-assisted translation software suite which runs on Microsoft Windows operating systems. It is developed by the Hungarian software company memoQ Fordítástechnológiai Zrt., formerly Kilgray, a provider of translation management software established in 2004 and cited as one of the fastest-growing companies in the translation technology sector in 2012 and 2013. memoQ provides translation memory, terminology, machine translation integration and reference information management in desktop, client/server and web application environments.

Sketch Engine is a corpus manager and text analysis software developed by Lexical Computing CZ s.r.o. since 2003. Its purpose is to enable people studying language behaviour to search large text collections according to complex and linguistically motivated queries. Sketch Engine gained its name after one of the key features, word sketches: one-page, automatic, corpus-derived summaries of a word's grammatical and collocational behaviour. Currently, it supports and provides corpora in 90+ languages.