Related Research Articles

A central processing unit (CPU), also called a central processor, main processor or just processor, is the electronic circuitry within a computer that executes instructions that make up a computer program. The CPU performs basic arithmetic, logic, controlling, and input/output (I/O) operations specified by the instructions in the program. This contrasts with external components such as main memory and I/O circuitry, and specialized processors such as graphics processing units (GPUs).

Seymour Roger Cray was an American electrical engineer and supercomputer architect who designed a series of computers that were the fastest in the world for decades, and founded Cray Research which built many of these machines. Called "the father of supercomputing", Cray has been credited with creating the supercomputer industry. Joel S. Birnbaum, then chief technology officer of Hewlett-Packard, said of him: "It seems impossible to exaggerate the effect he had on the industry; many of the things that high performance computers now do routinely were at the farthest edge of credibility when Seymour envisioned them." Larry Smarr, then director of the National Center for Supercomputing Applications at the University of Illinois said that Cray is "the Thomas Edison of the supercomputing industry."

A supercomputer is a computer with a high level of performance as compared to a general-purpose computer. The performance of a supercomputer is commonly measured in floating-point operations per second (FLOPS) instead of million instructions per second (MIPS). Since 2017, there are supercomputers which can perform over 1017 FLOPS (a hundred quadrillion FLOPS, 100 petaFLOPS or 100 PFLOPS). Since November 2017, all of the world's fastest 500 supercomputers run Linux-based operating systems. Additional research is being conducted in the United States, the European Union, Taiwan, Japan, and China to build faster, more powerful and technologically superior exascale supercomputers.

The Cray-1 was a supercomputer designed, manufactured and marketed by Cray Research. Announced in 1975, the first Cray-1 system was installed at Los Alamos National Laboratory in 1976. Eventually, over 100 Cray-1s were sold, making it one of the most successful supercomputers in history. It is perhaps best known for its unique shape, a relatively small C-shaped cabinet with a ring of benches around the outside covering the power supplies and the cooling system.

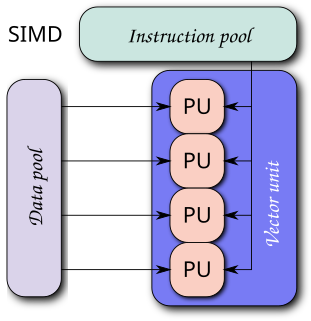

Single instruction, multiple data (SIMD) is a class of parallel computers in Flynn's taxonomy. It describes computers with multiple processing elements that perform the same operation on multiple data points simultaneously. Such machines exploit data level parallelism, but not concurrency: there are simultaneous (parallel) computations, but only a single process (instruction) at a given moment. SIMD is particularly applicable to common tasks such as adjusting the contrast in a digital image or adjusting the volume of digital audio. Most modern CPU designs include SIMD instructions to improve the performance of multimedia use. SIMD is not to be confused with SIMT, which utilizes threads.

In computing, a vector processor or array processor is a central processing unit (CPU) that implements an instruction set containing instructions that operate on one-dimensional arrays of data called vectors, compared to the scalar processors, whose instructions operate on single data items. Vector processors can greatly improve performance on certain workloads, notably numerical simulation and similar tasks. Vector machines appeared in the early 1970s and dominated supercomputer design through the 1970s into the 1990s, notably the various Cray platforms. The rapid fall in the price-to-performance ratio of conventional microprocessor designs led to the vector supercomputer's demise in the later 1990s.

The CDC 6600 was the flagship of the 6000 series of mainframe computer systems manufactured by Control Data Corporation. Generally considered to be the first successful supercomputer, it outperformed the industry's prior recordholder, the IBM 7030 Stretch, by a factor of three. With performance of up to three megaFLOPS, the CDC 6600 was the world's fastest computer from 1964 to 1969, when it relinquished that status to its successor, the CDC 7600.

Multiprocessing is the use of two or more central processing units (CPUs) within a single computer system. The term also refers to the ability of a system to support more than one processor or the ability to allocate tasks between them. There are many variations on this basic theme, and the definition of multiprocessing can vary with context, mostly as a function of how CPUs are defined.

The Advanced Scientific Computer (ASC) is a supercomputer designed and manufactured by Texas Instruments (TI) between 1966 and 1973. The ASC's central processing unit (CPU) supported vector processing, a performance-enhancing technique which was key to its high-performance. The ASC, along with the Control Data Corporation STAR-100 supercomputer, were the first computers to feature vector processing. However, this technique's potential was not fully realized by either the ASC or STAR-100 due to an insufficient understanding of the technique; it was the Cray Research Cray-1 supercomputer, announced in 1975 that would fully realize and popularize vector processing. The more successful implementation of vector processing in the Cray-1 would demarcate the ASC as first-generation vector processors, with the Cray-1 belonging in the second.

Cray Inc., a subsidiary of Hewlett Packard Enterprise, is an American supercomputer manufacturer headquartered in Seattle, Washington. It also manufactures systems for data storage and analytics. Several Cray supercomputer systems are listed in the TOP500, which ranks the most powerful supercomputers in the world.

The ETA10 is a line of vector supercomputers designed, manufactured, and marketed by ETA Systems, a spin-off division of Control Data Corporation (CDC). The ETA10 was announced in 1986, with the first deliveries made in early 1987. The system was an evolution of the CDC Cyber 205, which can trace its origins back to the CDC STAR-100.

The Cray-3 was a vector supercomputer, Seymour Cray's designated successor to the Cray-2. The system was one of the first major applications of gallium arsenide (GaAs) semiconductors in computing, using hundreds of custom built ICs packed into a 1 cubic foot (0.028 m3) CPU. The design goal was performance around 16 GFLOPS, about 12 times that of the Cray-2.

In computer engineering, microarchitecture, also called computer organization and sometimes abbreviated as µarch or uarch, is the way a given instruction set architecture (ISA) is implemented in a particular processor. A given ISA may be implemented with different microarchitectures; implementations may vary due to different goals of a given design or due to shifts in technology.

NEC SX describes a series of vector supercomputers designed, manufactured, and marketed by NEC. This computer series is notable for providing the first computer to exceed 1 gigaflop, as well as the fastest supercomputer in the world between 1992–1993, and 2002–2004. The current model, as of 2018, is the SX-Aurora TSUBASA.

In computing, hardware acceleration is the use of computer hardware specially made to perform some functions more efficiently than is possible in software running on a general-purpose central processing unit (CPU). Any transformation of data or routine that can be computed, can be calculated purely in software running on a generic CPU, purely in custom-made hardware, or in some mix of both. An operation can be computed faster in application-specific hardware designed or programmed to compute the operation than specified in software and performed on a general-purpose computer processor. Each approach has advantages and disadvantages. The implementation of computing tasks in hardware to decrease latency and increase throughput is known as hardware acceleration.

Stream processing is a computer programming paradigm, equivalent to dataflow programming, event stream processing, and reactive programming, that allows some applications to more easily exploit a limited form of parallel processing. Such applications can use multiple computational units, such as the floating point unit on a graphics processing unit or field-programmable gate arrays (FPGAs), without explicitly managing allocation, synchronization, or communication among those units.

The Goodyear Massively Parallel Processor (MPP) was a massively parallel processing supercomputer built by Goodyear Aerospace for the NASA Goddard Space Flight Center. It was designed to deliver enormous computational power at lower cost than other existing supercomputer architectures, by using thousands of simple processing elements, rather than one or a few highly complex CPUs. Development of the MPP began circa 1979; it was delivered in May 1983, and was in general use from 1985 until 1991.

Adapteva is a fabless semiconductor company focusing on low power many core microprocessor design. The company was the second company to announce a design with 1,000 specialized processing cores on a single integrated circuit.

The main credit to supercomputers goes to the inventor of CDC -6600, Seymour Cray. The history of supercomputing goes back to the early 1920s in the United States with the IBM tabulators at Columbia University and a series of computers at Control Data Corporation (CDC), designed by Seymour Cray to use innovative designs and parallelism to achieve superior computational peak performance. The CDC 6600, released in 1964, is generally considered the first supercomputer. However, some earlier computers were considered supercomputers for their day, such as the 1954 IBM NORC, the 1960 UNIVAC LARC, and the IBM 7030 Stretch and the Atlas, both in 1962.

Approaches to supercomputer architecture have taken dramatic turns since the earliest systems were introduced in the 1960s. Early supercomputer architectures pioneered by Seymour Cray relied on compact innovative designs and local parallelism to achieve superior computational peak performance. However, in time the demand for increased computational power ushered in the age of massively parallel systems.

References

- ↑ http://www.techagreements.com/agreement-preview.aspx?num=121632 CCC Annual return to end 1994

- 1 2 http://www.secinfo.com/dsVQy.a1u4.htm CCC 10Q May 1995

- ↑ Ken Iobst et al, "Processing in Memory: The Terasys Massively Parallel PIM Array", Computer, Volume 28 Issue 4 (April 1995)

- ↑ Norris Parker Smith, "Seymour & NSA Revive SIMD as Thinking Machines Buried" Archived 2012-09-14 at Archive.today , Supercomputing Review , 26 August 1994

- ↑ "Cray Computer Corp. Completes Initial Demonstration of the Cray-3 Super Scalable System", Cray Computer press release, 7 March 1995