Quadratic programming (QP) is the process of solving certain mathematical optimization problems involving quadratic functions. Specifically, one seeks to optimize a multivariate quadratic function subject to linear constraints on the variables. Quadratic programming is a type of nonlinear programming.

Linear programming (LP), also called linear optimization, is a method to achieve the best outcome in a mathematical model whose requirements and objective are represented by linear relationships. Linear programming is a special case of mathematical programming.

Mathematical optimization or mathematical programming is the selection of a best element, with regard to some criterion, from some set of available alternatives. It is generally divided into two subfields: discrete optimization and continuous optimization. Optimization problems arise in all quantitative disciplines from computer science and engineering to operations research and economics, and the development of solution methods has been of interest in mathematics for centuries.

In mathematical optimization, Dantzig's simplex algorithm is a popular algorithm for linear programming.

The curse of dimensionality refers to various phenomena that arise when analyzing and organizing data in high-dimensional spaces that do not occur in low-dimensional settings such as the three-dimensional physical space of everyday experience. The expression was coined by Richard E. Bellman when considering problems in dynamic programming. The curse generally refers to issues that arise when the number of datapoints is small relative to the intrinsic dimension of the data.

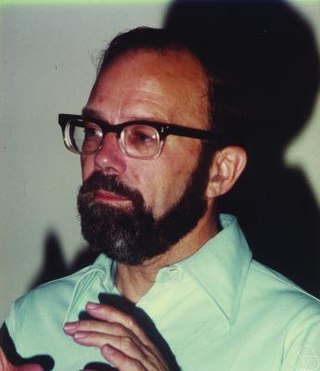

Victor LaRue Klee, Jr. was a mathematician specialising in convex sets, functional analysis, analysis of algorithms, optimization, and combinatorics. He spent almost his entire career at the University of Washington in Seattle.

In mathematical optimization, Bland's rule is an algorithmic refinement of the simplex method for linear optimization.

In theoretical computer science, smoothed analysis is a way of measuring the complexity of an algorithm. Since its introduction in 2001, smoothed analysis has been used as a basis for considerable research, for problems ranging from mathematical programming, numerical analysis, machine learning, and data mining. It can give a more realistic analysis of the practical performance of the algorithm compared to analysis that uses worst-case or average-case scenarios.

In operations research, the Big M method is a method of solving linear programming problems using the simplex algorithm. The Big M method extends the simplex algorithm to problems that contain "greater-than" constraints. It does so by associating the constraints with large negative constants which would not be part of any optimal solution, if it exists.

MOSEK is a software package for the solution of linear, mixed-integer linear, quadratic, mixed-integer quadratic, quadratically constrained, conic and convex nonlinear mathematical optimization problems. The applicability of the solver varies widely and is commonly used for solving problems in areas such as engineering, finance and computer science.

In mathematical optimization, the criss-cross algorithm is any of a family of algorithms for linear programming. Variants of the criss-cross algorithm also solve more general problems with linear inequality constraints and nonlinear objective functions; there are criss-cross algorithms for linear-fractional programming problems, quadratic-programming problems, and linear complementarity problems.

The Klee–Minty cube or Klee–Minty polytope is a unit hypercube of variable dimension whose corners have been perturbed. Klee and Minty demonstrated that George Dantzig's simplex algorithm has poor worst-case performance when initialized at one corner of their "squashed cube". On the three-dimensional version, the simplex algorithm and the criss-cross algorithm visit all 8 corners in the worst case.

In the study of algorithms, an LP-type problem is an optimization problem that shares certain properties with low-dimensional linear programs and that may be solved by similar algorithms. LP-type problems include many important optimization problems that are not themselves linear programs, such as the problem of finding the smallest circle containing a given set of planar points. They may be solved by a combination of randomized algorithms in an amount of time that is linear in the number of elements defining the problem, and subexponential in the dimension of the problem.

In mathematical optimization, the network simplex algorithm is a graph theoretic specialization of the simplex algorithm. The algorithm is usually formulated in terms of a minimum-cost flow problem. The network simplex method works very well in practice, typically 200 to 300 times faster than the simplex method applied to general linear program of same dimensions.

The Tucker Prize for outstanding theses in the area of optimization is sponsored by the Mathematical Optimization Society (MOS). Up to three finalists are presented at each (triennial) International Symposium of the MOS. The winner will receive an award of $1000 and a certificate. The Albert W. Tucker Prize was approved by the Society in 1985, and was first awarded at the Thirteenth International Symposium on Mathematical Programming in 1988.

In mathematical optimization, Zadeh's rule is an algorithmic refinement of the simplex method for linear optimization.

Oliver Friedmann is a German computer scientist and mathematician known for his work on parity games and the simplex algorithm.

Richard W. Cottle is an American mathematician. He was a professor of Management Science and Engineering at Stanford University, starting as an Acting Assistant Professor of Industrial Engineering in 1966 and retiring in 2005. He is notable for his work on mathematical programming/optimization, “Nonlinear programs”, the proposal of the linear complementarity problem, and the general field of operations research.

Tamás Terlaky is a Hungarian-Canadian-American professor of Industrial and Systems Engineering at Lehigh University. He is especially well known for his work on criss-cross algorithms, interior-point methods, Klee-Minty examples for path following algorithms, and optimization.