This article needs additional citations for verification .(July 2009) (Learn how and when to remove this template message) |

A delay-insensitive circuit is a type of asynchronous circuit which performs a digital logic operation often within a computing processor chip. Instead of using clock signals or other global control signals, the sequencing of computation in delay-insensitive circuit is determined by the data flow.

An asynchronous circuit, or self-timed circuit, is a sequential digital logic circuit which is not governed by a clock circuit or global clock signal. Instead it often uses signals that indicate completion of instructions and operations, specified by simple data transfer protocols. This type of circuit is contrasted with synchronous circuits, in which changes to the signal values in the circuit are triggered by repetitive pulses called a clock signal. Most digital devices today use synchronous circuits. However asynchronous circuits have the potential to be faster, and may also have advantages in lower power consumption, lower electromagnetic interference, and better modularity in large systems. Asynchronous circuits are an active area of research in digital logic design.

In electronics and especially synchronous digital circuits, a clock signal is a particular type of signal that oscillates between a high and a low state and is used like a metronome to coordinate actions of digital circuits.

Contents

Data flows from one circuit element to another using "handshakes", or sequences of voltage transitions to indicate readiness to receive data, or readiness to offer data. Typically, inputs of a circuit module will indicate their readiness to receive, which will be "acknowledged" by the connected output by sending data (encoded in such a way that the receiver can detect the validity directly [1] ), and once that data has been safely received, the receiver will explicitly acknowledge it, allowing the sender to remove the data, thus completing the handshake, and allowing another datum to be transmitted.

In a delay-insensitive circuit, there is therefore no need to provide a clock signal to determine a starting time for a computation. Instead, the arrival of data to the input of a sub-circuit triggers the computation to start. Consequently, the next computation can be initiated immediately when the result of the first computation is completed.

The main advantage of such circuits is their ability to optimize processing of activities that can take arbitrary periods of time depending on the data or requested function. An example of a process with a variable time for completion would be mathematical division or recovery of data where such data might be in a cache.

Division is one of the four basic operations of arithmetic, the others being addition, subtraction, and multiplication. The mathematical symbols used for the division operator are the obelus (÷) and the slash (/).

In computing, a cache is a hardware or software component that stores data so that future requests for that data can be served faster; the data stored in a cache might be the result of an earlier computation or a copy of data stored elsewhere. A cache hit occurs when the requested data can be found in a cache, while a cache miss occurs when it cannot. Cache hits are served by reading data from the cache, which is faster than recomputing a result or reading from a slower data store; thus, the more requests that can be served from the cache, the faster the system performs.

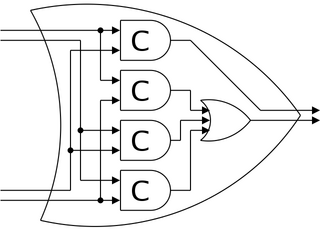

The Delay-Insensitive (DI) class is the most robust of all asynchronous circuit delay models. It makes no assumptions on the delay of wires or gates. In this model all transitions on gates or wires must be acknowledged before transitioning again. This condition stops unseen transitions from occurring. In DI circuits any transition on an input to a gate must be seen on the output of the gate before a subsequent transition on that input is allowed to happen. This forces some input states or sequences to become illegal. For example OR gates must never go into the state where both inputs are one, as the entry and exit from this state will not be seen on the output of the gate. Although this model is very robust, no practical circuits are possible due to the lack of expressible conditionals in DI circuits. [2] Instead the Quasi-Delay-Insensitive model is the smallest compromise model yet capable of generating useful computing circuits. For this reason circuits are often incorrectly referred to as Delay-Insensitive when they are Quasi Delay-Insensitive.