Dekker's algorithm is the first known correct solution to the mutual exclusion problem in concurrent programming where processes only communicate via shared memory. The solution is attributed to Dutch mathematician Th. J. Dekker by Edsger W. Dijkstra in an unpublished paper on sequential process descriptions and his manuscript on cooperating sequential processes. It allows two threads to share a single-use resource without conflict, using only shared memory for communication.

In computer science, mutual exclusion is a property of concurrency control, which is instituted for the purpose of preventing race conditions. It is the requirement that one thread of execution never enters a critical section while a concurrent thread of execution is already accessing said critical section, which refers to an interval of time during which a thread of execution accesses a shared resource or shared memory.

In computer science, a semaphore is a variable or abstract data type used to control access to a common resource by multiple threads and avoid critical section problems in a concurrent system such as a multitasking operating system. Semaphores are a type of synchronization primitive. A trivial semaphore is a plain variable that is changed depending on programmer-defined conditions.

In computing, scheduling is the action of assigning resources to perform tasks. The resources may be processors, network links or expansion cards. The tasks may be threads, processes or data flows.

Peterson's algorithm is a concurrent programming algorithm for mutual exclusion that allows two or more processes to share a single-use resource without conflict, using only shared memory for communication. It was formulated by Gary L. Peterson in 1981. While Peterson's original formulation worked with only two processes, the algorithm can be generalized for more than two.

In computing, a Las Vegas algorithm is a randomized algorithm that always gives correct results; that is, it always produces the correct result or it informs about the failure. However, the runtime of a Las Vegas algorithm differs depending on the input. The usual definition of a Las Vegas algorithm includes the restriction that the expected runtime be finite, where the expectation is carried out over the space of random information, or entropy, used in the algorithm. An alternative definition requires that a Las Vegas algorithm always terminates, but may output a symbol not part of the solution space to indicate failure in finding a solution. The nature of Las Vegas algorithms makes them suitable in situations where the number of possible solutions is limited, and where verifying the correctness of a candidate solution is relatively easy while finding a solution is complex.

In concurrent programming, concurrent accesses to shared resources can lead to unexpected or erroneous behavior, so parts of the program where the shared resource is accessed need to be protected in ways that avoid the concurrent access. One way to do so is known as a critical section or critical region. This protected section cannot be entered by more than one process or thread at a time; others are suspended until the first leaves the critical section. Typically, the critical section accesses a shared resource, such as a data structure, a peripheral device, or a network connection, that would not operate correctly in the context of multiple concurrent accesses.

In computational number theory, the index calculus algorithm is a probabilistic algorithm for computing discrete logarithms. Dedicated to the discrete logarithm in where is a prime, index calculus leads to a family of algorithms adapted to finite fields and to some families of elliptic curves. The algorithm collects relations among the discrete logarithms of small primes, computes them by a linear algebra procedure and finally expresses the desired discrete logarithm with respect to the discrete logarithms of small primes.

Lamport's bakery algorithm is a computer algorithm devised by computer scientist Leslie Lamport, as part of his long study of the formal correctness of concurrent systems, which is intended to improve the safety in the usage of shared resources among multiple threads by means of mutual exclusion.

In computer science, the readers–writers problems are examples of a common computing problem in concurrency. There are at least three variations of the problems, which deal with situations in which many concurrent threads of execution try to access the same shared resource at one time.

URI normalization is the process by which URIs are modified and standardized in a consistent manner. The goal of the normalization process is to transform a URI into a normalized URI so it is possible to determine if two syntactically different URIs may be equivalent.

In computer science, synchronization is the task of coordinating multiple of processes to join up or handshake at a certain point, in order to reach an agreement or commit to a certain sequence of action.

CPU time is the amount of time for which a central processing unit (CPU) was used for processing instructions of a computer program or operating system, as opposed to elapsed time, which includes for example, waiting for input/output (I/O) operations or entering low-power (idle) mode. The CPU time is measured in clock ticks or seconds. Often, it is useful to measure CPU time as a percentage of the CPU's capacity, which is called the CPU usage. CPU time and CPU usage have two main uses.

Connected-component labeling (CCL), connected-component analysis (CCA), blob extraction, region labeling, blob discovery, or region extraction is an algorithmic application of graph theory, where subsets of connected components are uniquely labeled based on a given heuristic. Connected-component labeling is not to be confused with segmentation.

In computing, the producer-consumer problem is a family of problems described by Edsger W. Dijkstra since 1965.

In multiprocessor computer systems, software lockout is the issue of performance degradation due to the idle wait times spent by the CPUs in kernel-level critical sections. Software lockout is the major cause of scalability degradation in a multiprocessor system, posing a limit on the maximum useful number of processors. To mitigate the phenomenon, the kernel must be designed to have its critical sections as short as possible, therefore decomposing each data structure in smaller substructures.

Flashsort is a distribution sorting algorithm showing linear computational complexity O(n) for uniformly distributed data sets and relatively little additional memory requirement. The original work was published in 1998 by Karl-Dietrich Neubert.

In mathematics, the Lucas–Lehmer–Riesel test is a primality test for numbers of the form N = k ⋅ 2n − 1 with odd k < 2n. The test was developed by Hans Riesel and it is based on the Lucas–Lehmer primality test. It is the fastest deterministic algorithm known for numbers of that form. For numbers of the form N = k ⋅ 2n + 1, either application of Proth's theorem or one of the deterministic proofs described in Brillhart–Lehmer–Selfridge 1975 are used.

Szymański's Mutual Exclusion Algorithm is a mutual exclusion algorithm devised by computer scientist Dr. Bolesław Szymański, which has many favorable properties including linear wait, and which extension solved the open problem posted by Leslie Lamport whether there is an algorithm with a constant number of communication bits per process that satisfies every reasonable fairness and failure-tolerance requirement that Lamport conceived of.

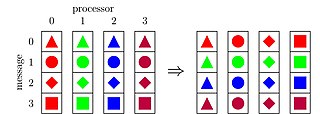

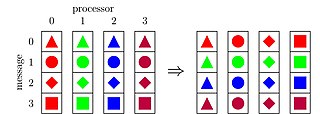

In parallel computing, all-to-all is a collective operation, where each processor sends an individual message to every other processor.