In mathematics, the harmonic mean is one of several kinds of average, and in particular one of the Pythagorean means. Typically, it is appropriate for situations when the average of rates is desired.

The Pareto distribution, named after the Italian civil engineer, economist, and sociologist Vilfredo Pareto, is a power-law probability distribution that is used in description of social, scientific, geophysical, actuarial, and many other types of observable phenomena. Originally applied to describing the distribution of wealth in a society, fitting the trend that a large portion of wealth is held by a small fraction of the population, the Pareto distribution has colloquially become known and referred to as the Pareto principle, or "80-20 rule", and is sometimes called the "Matthew principle". This rule states that, for example, 80% of the wealth of a society is held by 20% of its population. However, one should not conflate the Pareto distribution for the Pareto Principle as the former only produces this result for a particular power value, (α = log45 ≈ 1.16). While is variable, empirical observation has found the 80-20 distribution to fit a wide range of cases, including natural phenomena and human activities.

Quantum superposition is a fundamental principle of quantum mechanics. It states that, much like waves in classical physics, any two quantum states can be added together ("superposed") and the result will be another valid quantum state; and conversely, that every quantum state can be represented as a sum of two or more other distinct states. Mathematically, it refers to a property of solutions to the Schrödinger equation; since the Schrödinger equation is linear, any linear combination of solutions will also be a solution.

Calculus of variations is a field of mathematical analysis that uses variations, which are small changes in functions and functionals, to find maxima and minima of functionals: mappings from a set of functions to the real numbers. Functionals are often expressed as definite integrals involving functions and their derivatives. Functions that maximize or minimize functionals may be found using the Euler–Lagrange equation of the calculus of variations.

In probability theory, the Girsanov theorem describes how the dynamics of stochastic processes change when the original measure is changed to an equivalent probability measure. The theorem is especially important in the theory of financial mathematics as it tells how to convert from the physical measure, which describes the probability that an underlying instrument will take a particular value or values, to the risk-neutral measure which is a very useful tool for pricing derivatives on the underlying instrument.

An odds ratio (OR) is a statistic that quantifies the strength of the association between two events, A and B. The odds ratio is defined as the ratio of the odds of A in the presence of B and the odds of A in the absence of B, or equivalently, the ratio of the odds of B in the presence of A and the odds of B in the absence of A. Two events are independent if and only if the OR equals 1: the odds of one event are the same in either the presence or absence of the other event. If the OR is greater than 1, then A and B are associated (correlated) in the sense that, compared to the absence of B, the presence of B raises the odds of A, and symmetrically the presence of A raises the odds of B. Conversely, if the OR is less than 1, then A and B are negatively correlated, and the presence of one event reduces the odds of the other event. The OR plays an important role in the logistic model, which generalizes beyond two events.

In mathematical statistics, the Kullback–Leibler divergence is a measure of how one probability distribution is different from a second, reference probability distribution. Applications include characterizing the relative(Shannon) entropy in information systems, randomness in continuous time-series, and information gain when comparing statistical models of inference. In contrast to variation of information, it is a distribution-wise asymmetric measure and thus does not qualify as a statistical metric of spread. In the simple case, a Kullback–Leibler divergence of 0 indicates that the two distributions in question are identical. In simplified terms, it is a measure of surprise, with diverse applications such as applied statistics, fluid mechanics, neuroscience and machine learning.

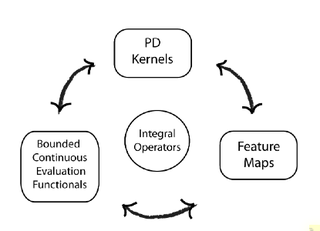

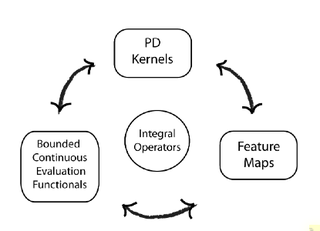

In functional analysis, a reproducing kernel Hilbert space (RKHS) is a Hilbert space of functions in which point evaluation is a continuous linear functional. Roughly speaking, this means that if two functions and in the RKHS are close in norm, i.e., is small, then and are also pointwise close, i.e., is small for all . The reverse need not be true.

The equity premium puzzle refers to the inability of an important class of economic models to explain the average premium of a well-diversified U.S. equity portfolio over U.S. Treasury Bills observed for more than 100 years. The term was coined by Rajnish Mehra and Edward C. Prescott in a study published in 1985 titled The Equity Premium: A Puzzle,. An earlier version of the paper was published in 1982 under the title A test of the intertemporal asset pricing model. The authors found that a standard general equilibrium model, calibrated to display key U.S. business cycle fluctuations, generated an equity premium of less than 1% for reasonable risk aversion levels. This result stood in sharp contrast with the average equity premium of 6% observed during the historical period.

Empirical risk minimization (ERM) is a principle in statistical learning theory which defines a family of learning algorithms and is used to give theoretical bounds on their performance.

In probability theory, the theory of large deviations concerns the asymptotic behaviour of remote tails of sequences of probability distributions. While some basic ideas of the theory can be traced to Laplace, the formalization started with insurance mathematics, namely ruin theory with Cramér and Lundberg. A unified formalization of large deviation theory was developed in 1966, in a paper by Varadhan. Large deviations theory formalizes the heuristic ideas of concentration of measures and widely generalizes the notion of convergence of probability measures.

In operator theory, Naimark's dilation theorem is a result that characterizes positive operator valued measures. It can be viewed as a consequence of Stinespring's dilation theorem.

In actuarial science and applied probability ruin theory uses mathematical models to describe an insurer's vulnerability to insolvency/ruin. In such models key quantities of interest are the probability of ruin, distribution of surplus immediately prior to ruin and deficit at time of ruin.

In financial mathematics, a conditional risk measure is a random variable of the financial risk as if measured at some point in the future. A risk measure can be thought of as a conditional risk measure on the trivial sigma algebra.

In financial mathematics, a deviation risk measure is a function to quantify financial risk in a different method than a general risk measure. Deviation risk measures generalize the concept of standard deviation.

In financial mathematics, a distortion risk measure is a type of risk measure which is related to the cumulative distribution function of the return of a financial portfolio.

The stochastic discount factor (SDF) is a concept in financial economics and mathematical finance.

In financial mathematics and stochastic optimization, the concept of risk measure is used to quantify the risk involved in a random outcome or risk position. Many risk measures have hitherto been proposed, each having certain characteristics. The entropic value-at-risk (EVaR) is a coherent risk measure introduced by Ahmadi-Javid, which is an upper bound for the value at risk (VaR) and the conditional value-at-risk (CVaR), obtained from the Chernoff inequality. The EVaR can also be represented by using the concept of relative entropy. Because of its connection with the VaR and the relative entropy, this risk measure is called "entropic value-at-risk". The EVaR was developed to tackle some computational inefficiencies of the CVaR. Getting inspiration from the dual representation of the EVaR, Ahmadi-Javid developed a wide class of coherent risk measures, called g-entropic risk measures. Both the CVaR and the EVaR are members of this class.

The theory of causal fermion systems is an approach to describe fundamental physics. Its proponents claim it gives quantum mechanics, general relativity and quantum field theory as limiting cases and is therefore a candidate for a unified physical theory.