Related Research Articles

In information security and programming, a buffer overflow, or buffer overrun, is an anomaly where a program, while writing data to a buffer, overruns the buffer's boundary and overwrites adjacent memory locations.

C is a general-purpose, procedural computer programming language supporting structured programming, lexical variable scope, and recursion, with a static type system. By design, C provides constructs that map efficiently to typical machine instructions. It has found lasting use in applications previously coded in assembly language. Such applications include operating systems and various application software for computer architectures that range from supercomputers to PLCs and embedded systems.

In computing, a segmentation fault or access violation is a fault, or failure condition, raised by hardware with memory protection, notifying an operating system (OS) the software has attempted to access a restricted area of memory. On standard x86 computers, this is a form of general protection fault. The operating system kernel will, in response, usually perform some corrective action, generally passing the fault on to the offending process by sending the process a signal. Processes can in some cases install a custom signal handler, allowing them to recover on their own, but otherwise the OS default signal handler is used, generally causing abnormal termination of the process, and sometimes a core dump.

In computing, virtual memory, or virtual storage is a memory management technique that provides an "idealized abstraction of the storage resources that are actually available on a given machine" which "creates the illusion to users of a very large (main) memory".

In computer science, a thread of execution is the smallest sequence of programmed instructions that can be managed independently by a scheduler, which is typically a part of the operating system. The implementation of threads and processes differs between operating systems, but in most cases a thread is a component of a process. The multiple threads of a given process may be executed concurrently, sharing resources such as memory, while different processes do not share these resources. In particular, the threads of a process share its executable code and the values of its dynamically allocated variables and non-thread-local global variables at any given time.

In computing, a core dump, memory dump, crash dump, system dump, or ABEND dump consists of the recorded state of the working memory of a computer program at a specific time, generally when the program has crashed or otherwise terminated abnormally. In practice, other key pieces of program state are usually dumped at the same time, including the processor registers, which may include the program counter and stack pointer, memory management information, and other processor and operating system flags and information. A snapshot dump is a memory dump requested by the computer operator or by the running program, after which the program is able to continue. Core dumps are often used to assist in diagnosing and debugging errors in computer programs.

In computing, DLL Hell is a term for the complications that arise when one works with dynamic-link libraries (DLLs) used with Microsoft Windows operating systems, particularly legacy 16-bit editions, which all run in a single memory space.

A heap overflow, heap overrun, or heap smashing is a type of buffer overflow that occurs in the heap data area. Heap overflows are exploitable in a different manner to that of stack-based overflows. Memory on the heap is dynamically allocated at runtime and typically contains program data. Exploitation is performed by corrupting this data in specific ways to cause the application to overwrite internal structures such as linked list pointers. The canonical heap overflow technique overwrites dynamic memory allocation linkage and uses the resulting pointer exchange to overwrite a program function pointer.

In computing, a crash, or system crash, occurs when a computer program such as a software application or an operating system stops functioning properly and exits. On some operating systems or individual applications, a crash reporting service will report the crash and any details relating to it, usually to the developer(s) of the application. If the program is a critical part of the operating system, the entire system may crash or hang, often resulting in a kernel panic or fatal system error.

Buffer overflow protection is any of various techniques used during software development to enhance the security of executable programs by detecting buffer overflows on stack-allocated variables, and preventing them from causing program misbehavior or from becoming serious security vulnerabilities. A stack buffer overflow occurs when a program writes to a memory address on the program's call stack outside of the intended data structure, which is usually a fixed-length buffer. Stack buffer overflow bugs are caused when a program writes more data to a buffer located on the stack than what is actually allocated for that buffer. This almost always results in corruption of adjacent data on the stack, which could lead to program crashes, incorrect operation, or security issues.

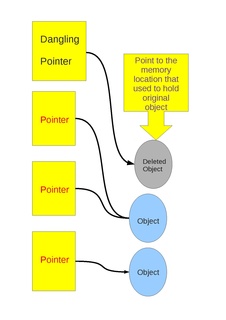

Dangling pointers and wild pointers in computer programming are pointers that do not point to a valid object of the appropriate type. These are special cases of memory safety violations. More generally, dangling references and wild references are references that do not resolve to a valid destination, and include such phenomena as link rot on the internet.

In the context of computer programming, magic is an informal term for abstraction; it is used to describe code that handles complex tasks while hiding that complexity to present a simple interface. The term is somewhat tongue-in-cheek, and often carries bad connotations, implying that the true behavior of the code is not immediately apparent. For example, Perl's polymorphic typing and closure mechanisms are often called "magic". The term implies that the hidden complexity is at least in principle understandable, in contrast to black magic and deep magic, which describe arcane techniques that are deliberately hidden or extremely difficult to understand. However, the term can also be applied endearingly, suggesting a "charm" about the code. The action of such abstractions is described as being done "automagically", a portmanteau of "automatically" and "magically".

In computer programming jargon, a heisenbug is a software bug that seems to disappear or alter its behavior when one attempts to study it. The term is a pun on the name of Werner Heisenberg, the physicist who first asserted the observer effect of quantum mechanics, which states that the act of observing a system inevitably alters its state. In electronics the traditional term is probe effect, where attaching a test probe to a device changes its behavior.

A guard byte is a part of a computer program's memory that helps software developers find buffer overflows while developing the program.

In computer programming, a handle is an abstract reference to a resource that is used when application software references blocks of memory or objects that are managed by another system like a database or an operating system.

In software, a stack buffer overflow or stack buffer overrun occurs when a program writes to a memory address on the program's call stack outside of the intended data structure, which is usually a fixed-length buffer. Stack buffer overflow bugs are caused when a program writes more data to a buffer located on the stack than what is actually allocated for that buffer. This almost always results in corruption of adjacent data on the stack, and in cases where the overflow was triggered by mistake, will often cause the program to crash or operate incorrectly. Stack buffer overflow is a type of the more general programming malfunction known as buffer overflow. Overfilling a buffer on the stack is more likely to derail program execution than overfilling a buffer on the heap because the stack contains the return addresses for all active function calls.

In software engineering and computer science, clobbering a file, processor register or a region of computer memory is the process of overwriting its contents completely, whether intentionally or unintentionally, or to indicate that such an action will likely occur. The Jargon File defines clobbering as

To overwrite, usually unintentionally: "I walked off the end of the array and clobbered the stack." Compare mung, scribble, trash, and smash the stack.

shred is a command on Unix-like operating systems that can be used to securely delete files and devices so that it is extremely difficult to recover them, even with specialized hardware and technology; assuming it's even possible to recover the file at all. It is a part of GNU Core Utilities.

Return-oriented programming (ROP) is a computer security exploit technique that allows an attacker to execute code in the presence of security defenses such as executable space protection and code signing.

Runtime error detection is a software verification method that analyzes a software application as it executes and reports defects that are detected during that execution. It can be applied during unit testing, component testing, integration testing, system testing, or penetration testing.

References

This article or an earlier version of it came from the Jargon File.