See also

| This disambiguation page lists articles associated with the title Frame of reference. If an internal link led you here, you may wish to change the link to point directly to the intended article. |

A frame of reference consists of an abstract coordinate system and the set of physical reference points.

Frame of reference or reference frame may also refer to:

| This disambiguation page lists articles associated with the title Frame of reference. If an internal link led you here, you may wish to change the link to point directly to the intended article. |

An inertial frame of reference in classical physics and special relativity possesses the property that in this frame of reference a body with zero net force acting upon it does not accelerate; that is, such a body is at rest or moving at a constant velocity. An inertial frame of reference can be defined in analytical terms as a frame of reference that describes time and space homogeneously, isotropically, and in a time-independent manner. Conceptually, the physics of a system in an inertial frame have no causes external to the system. An inertial frame of reference may also be called an inertial reference frame, inertial frame, Galilean reference frame, or inertial space.

MPEG-1 is a standard for lossy compression of video and audio. It is designed to compress VHS-quality raw digital video and CD audio down to about 1.5 Mbit/s without excessive quality loss, making video CDs, digital cable/satellite TV and digital audio broadcasting (DAB) possible.

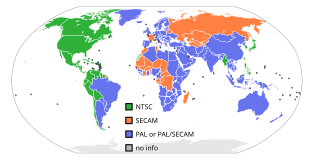

NTSC, named after the National Television System Committee, is the analog television color system that was introduced in North America in 1954 and stayed in use until digital conversion. It was one of three major analog color television standards, the others being PAL and SECAM.

Video is an electronic medium for the recording, copying, playback, broadcasting, and display of moving visual media. Video was first developed for mechanical television systems, which were quickly replaced by cathode ray tube (CRT) systems which were later replaced by flat panel displays of several types.

Frame rate is the frequency (rate) at which consecutive images called frames appear on a display. The term applies equally to film and video cameras, computer graphics, and motion capture systems. Frame rate may also be called the frame frequency, and be expressed in hertz.

Motion compensation is an algorithmic technique used to predict a frame in a video, given the previous and/or future frames by accounting for motion of the camera and/or objects in the video. It is employed in the encoding of video data for video compression, for example in the generation of MPEG-2 files. Motion compensation describes a picture in terms of the transformation of a reference picture to the current picture. The reference picture may be previous in time or even from the future. When images can be accurately synthesized from previously transmitted/stored images, the compression efficiency can be improved.

In physics, a frame of reference consists of an abstract coordinate system and the set of physical reference points that uniquely fix the coordinate system and standardize measurements within that frame.

SMPTE timecode is a set of cooperating standards to label individual frames of video or film with a timecode. The system is defined by the Society of Motion Picture and Television Engineers in the SMPTE 12M specification. SMPTE revised the standard in 2008, turning it into a two-part document: SMPTE 12M-1 and SMPTE 12M-2, including new explanations and clarifications.

Telecine is the process of transferring motion picture film into video and is performed in a color suite. The term is also used to refer to the equipment used in the post-production process. Telecine enables a motion picture, captured originally on film stock, to be viewed with standard video equipment, such as television sets, video cassette recorders (VCR), DVD, Blu-ray Disc or computers. Initially, this allowed television broadcasters to produce programmes using film, usually 16mm stock, but transmit them in the same format, and quality, as other forms of television production. Furthermore, telecine allows film producers, television producers and film distributors working in the film industry to release their products on video and allows producers to use video production equipment to complete their filmmaking projects. Within the film industry, it is also referred to as a TK, because TC is already used to designate timecode.

Slow motion is an effect in film-making whereby time appears to be slowed down. It was invented by the Austrian priest August Musger in the early 20th century.

CIF, also known as FCIF, is a standardized format for the picture resolution, frame rate, color space, and color subsampling of digital video sequences used in video teleconferencing systems. It was first defined in the H.261 standard in 1988.

In the field of video compression a video frame is compressed using different algorithms with different advantages and disadvantages, centered mainly around amount of data compression. These different algorithms for video frames are called picture types or frame types. The three major picture types used in the different video algorithms are I, P and B. They are different in the following characteristics:

720p is a progressive HDTV signal format with 720 horizontal lines and an aspect ratio (AR) of 16:9, normally known as widescreen HDTV (1.78:1). All major HDTV broadcasting standards include a 720p format, which has a resolution of 1280×720; however, there are other formats, including HDV Playback and AVCHD for camcorders, that use 720p images with the standard HDTV resolution. The frame rate is standards-dependent, and for conventional broadcasting appears in 50 progressive frames per second in former PAL/SECAM countries, and 59.94 frames per second in former NTSC countries.

1080i is an abbreviation referring to a combination of frame resolution and scan type, used in high-definition television (HDTV) and high-definition video. The number "1080" refers to the number of horizontal lines on the screen. The "i" is an abbreviation for "interlaced"; this indicates that only the odd lines, then the even lines of each frame are drawn alternately, so that only half the number of actual image frames are used to produce video. A related display resolution is 1080p, which also has 1080 lines of resolution; the "p" refers to progressive scan, which indicates that the lines of resolution for each frame are "drawn" in on the screen sequence.

An inter frame is a frame in a video compression stream which is expressed in terms of one or more neighboring frames. The "inter" part of the term refers to the use of Inter frame prediction. This kind of prediction tries to take advantage from temporal redundancy between neighboring frames enabling higher compression rates.

576i is a standard-definition video mode originally used for terrestrial television in most countries of the world where the utility frequency for electric power distribution is 50 Hz. Because of its close association with the color encoding system, it is often referred to as simply PAL, PAL/SECAM or SECAM when compared to its 60 Hz NTSC-color-encoded counterpart, 480i. In digital applications it is usually referred to as "576i"; in analogue contexts it is often called "625 lines", and the aspect ratio is usually 4:3 in analogue transmission and 16:9 in digital transmission.

H.262 or MPEG-2 Part 2 is a video coding format standardised and jointly maintained by ITU-T Video Coding Experts Group (VCEG) and ISO/IEC Moving Picture Experts Group (MPEG), and developed with the involvement of many companies. It is the second part of the ISO/IEC MPEG-2 standard. The ITU-T Recommendation H.262 and ISO/IEC 13818-2 documents are identical.

In filmmaking, video production, animation, and related fields, a frame is one of the many still images which compose the complete moving picture. The term is derived from the fact that, from the beginning of modern filmmaking toward the end of the 20th century, and in many places still up to the present, the single images have been recorded on a strip of photographic film that quickly increased in length, historically; each image on such a strip looks rather like a framed picture when examined individually.

In video coding, a group of pictures, or GOP structure, specifies the order in which intra- and inter-frames are arranged. The GOP is a collection of successive pictures within a coded video stream. Each coded video stream consists of successive GOPs, from which the visible frames are generated. Encountering a new GOP in a compressed video stream means that the decoder doesn't need any previous frames in order to decode the next ones, and allows fast seeking through the video.

Reference frames are frames of a compressed video that are used to define future frames. As such, they are only used in inter-frame compression techniques. In older video encoding standards, such as MPEG-2, only one reference frame – the previous frame – was used for P-frames. Two reference frames were used for B-frames.