The graphical user interface is a form of user interface that allows users to interact with electronic devices through graphical icons and visual indicators such as secondary notation, instead of text-based user interfaces, typed command labels or text navigation. GUIs were introduced in reaction to the perceived steep learning curve of command-line interfaces (CLIs), which require commands to be typed on a computer keyboard.

In computing, a pointing device gesture or mouse gesture is a way of combining pointing device or finger movements and clicks that the software recognizes as a specific computer event and responds in a manner particular to that software. They can be useful for people who have difficulties typing on a keyboard. For example, in a web browser, a user can navigate to the previously viewed page by pressing the right pointing device button, moving the pointing device briefly to the left, then releasing the button.

A pointing device is an input interface that allows a user to input spatial data to a computer. CAD systems and graphical user interfaces (GUI) allow the user to control and provide data to the computer using physical gestures by moving a hand-held mouse or similar device across the surface of the physical desktop and activating switches on the mouse. Movements of the pointing device are echoed on the screen by movements of the pointer and other visual changes. Common gestures are point and click and drag and drop.

A touchpad or trackpad is a pointing device featuring a tactile sensor, a specialized surface that can translate the motion and position of a user's fingers to a relative position on the operating system that is made output to the screen. Touchpads are a common feature of laptop computers, and are also used as a substitute for a mouse where desk space is scarce. Because they vary in size, they can also be found on personal digital assistants (PDAs) and some portable media players. Wireless touchpads are also available as detached accessories.

In computer displays, filmmaking, television production, and other kinetic displays, scrolling is sliding text, images or video across a monitor or display, vertically or horizontally. "Scrolling," as such, does not change the layout of the text or pictures but moves the user's view across what is apparently a larger image that is not wholly seen. A common television and movie special effect is to scroll credits, while leaving the background stationary. Scrolling may take place completely without user intervention or, on an interactive device, be triggered by touchscreen or a keypress and continue without further intervention until a further user action, or be entirely controlled by input devices.

Neonode started out as a company producing mobile phones, founded in Sweden by Magnus Goertz and Thomas Eriksson. Currently Neonode Inc, the parent company, focuses solely on the development, licensing and selling of the company's patented optical touch technology. The technology uses a web of light beams and was named zForce. It is used for mobile phones, tablets, e-readers and other devices featuring touch screens. The company operates from Stockholm, Sweden with offices in San Jose, Detroit, Seoul, Tokyo, Taipei.

A virtual keyboard is a software component that allows the input of characters without the need for physical keys. The interaction with the virtual keyboard happens mostly via a touchscreen interface, but can also take place in a different form in virtual or augmented reality.

A touch user interface (TUI) is a computer-pointing technology based upon the sense of touch (haptics). Whereas a graphical user interface (GUI) relies upon the sense of sight, a TUI enables not only the sense of touch to innervate and activate computer-based functions, it also allows the user, particularly those with visual impairments, an added level of interaction based upon tactile or Braille input.

Microsoft PixelSense is an interactive surface computing platform that allows one or more people to use and touch real-world objects, and share digital content at the same time. The PixelSense platform consists of software and hardware products that combine vision based multitouch PC hardware, 360-degree multiuser application design, and Windows software to create a natural user interface (NUI).

Surface computing is the use of a specialized computer GUI in which traditional GUI elements are replaced by intuitive, everyday objects. Instead of a keyboard and mouse, the user interacts with a surface. Typically the surface is a touch-sensitive screen, though other surface types like non-flat three-dimensional objects have been implemented as well. It has been said that this more closely replicates the familiar hands-on experience of everyday object manipulation.

In electrical engineering, a resistive touchscreen is a touch-sensitive computer display composed of two flexible sheets coated with a resistive material and separated by an air gap or microdots.

In electrical engineering, capacitive sensing is a technology, based on capacitive coupling, that can detect and measure anything that is conductive or has a dielectric different from air.

Microsoft Tablet PC is a term coined by Microsoft for tablet computers conforming to a set of specifications announced in 2001 by Microsoft, for a pen-enabled personal computer, conforming to hardware specifications devised by Microsoft and running a licensed copy of Windows XP Tablet PC Edition operating system or a derivative thereof.

The Sony Ericsson Xperia X8 is a mid-range 3G Android smartphone developed by Sony Ericsson in the Xperia series in released in Q4 2010. It is sold in many countries worldwide, including the United States on AT&T Mobility and low-end pay-monthly contracts in the UK. It originally shipped running Android 1.6 but was upgraded in early 2011 to Android 2.1.

The HTC Evo Shift 4G is a smartphone developed by HTC Corporation and marketed as the concurrent/sequel to Sprint's flagship Android smartphone, running on its 4G WiMAX network. The smartphone launched on January 9, 2011.

The BlackBerry Z30 is a high-end 4G touchscreen smartphone developed by BlackBerry. Announced on September 18, 2013, it succeeds the Z10 as the second totally touchscreen device to run the BlackBerry 10 operating system. The Z30 includes a 5-inch 720p Super AMOLED display with "quad-core graphics", speakers and microphones with "Natural Sound" technology, six processor cores and a non-removable 2880 mAh battery. The BlackBerry Z30 also uses Paratek Antenna Technology. This refers to the number of proprietary advancements in antenna hardware and tuning technology that is aimed to improve performance especially in regions with low signal.

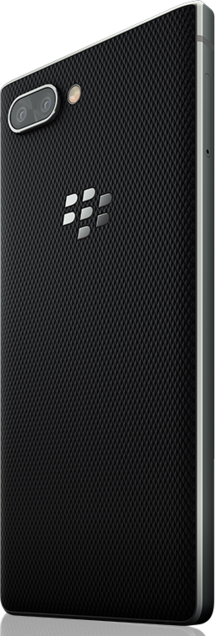

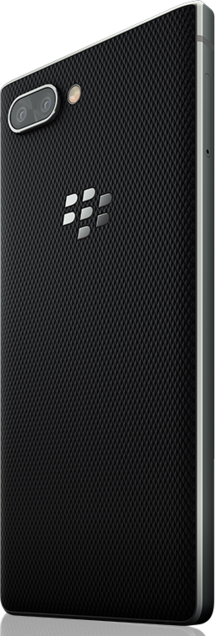

The BlackBerry Key2 is a touchscreen-based Android smartphone with a portrait-oriented, fixed integrated hardware keyboard that is manufactured by TCL Corporation under the brand name of BlackBerry Mobile. Originally known by its unofficial codename "Athena", the Key2 was officially announced in New York on June 7, 2018. The Key2 is the successor to the BlackBerry KeyOne, and the seventh BlackBerry smartphone to run the Android operating system.