A photograph is an image created by light falling on a photosensitive surface, usually photographic film or an electronic image sensor, such as a CCD or a CMOS chip. Most photographs are now created using a smartphone or camera, which uses a lens to focus the scene's visible wavelengths of light into a reproduction of what the human eye would see. The process and practice of creating such images is called photography.

Restoration is the act of restoring something to its original state. This may refer to:

Visualization or visualisation may refer to:

Photograph manipulation involves the transformation or alteration of a photograph. Some photograph manipulations are considered to be skillful artwork, while others are considered to be unethical practices, especially when used to deceive. Motives for manipulating photographs include political propaganda, altering the appearance of a subject, entertainment and humor.

Tele-snaps were off-screen photographs of British television broadcasts, taken and sold commercially by John Cura. From 1947 until 1968, Cura ran a business selling the 250,000-plus tele-snaps he took. The photographs were snapped in half of a normal frame of 35mm film, at an exposure of 1/25th of a second. Generally around 70–80 tele-snaps were taken of each programme. They were mostly purchased by actors and directors to use as records and examples of their work before the prevalence of videocassette recorders.

The Shroud of Turin, also known as the Holy Shroud, is a length of linen cloth that bears a faint image of the front and back of a man. It has been venerated for centuries, especially by members of the Catholic Church, as the actual burial shroud used to wrap the body of Jesus of Nazareth after his crucifixion, and upon which Jesus's bodily image is miraculously imprinted. The human image on the shroud can be discerned more clearly in a black and white photographic negative than in its natural sepia color, an effect discovered in 1898 by Secondo Pia, who produced the first photographs of the shroud. This negative image is associated with a popular Catholic devotion to the Holy Face of Jesus.

Geograph Britain and Ireland is a web-based project, begun in March 2005, to create a freely accessible archive of geographically located photographs of Great Britain and Ireland. Photographs in the Geograph collection are chosen to illustrate significant or typical features of each 1 km × 1 km (100 ha) grid square in the Ordnance Survey National Grid and the Irish national grid reference system. There are 332,216 such grid squares containing at least some land or permanent structure, of which 281,131 have Geographs.

Trinity and Beyond: The Atomic Bomb Movie is a 1995 American documentary film directed by Peter Kuran and narrated by William Shatner.

The following outline is provided as an overview of and topical guide to photography:

Lunch atop a Skyscraper is a black-and-white photograph taken on September 20, 1932, of eleven ironworkers sitting on a steel beam of the RCA Building, 850 feet above the ground during the construction of Rockefeller Center in Manhattan, New York City. It was arranged as a publicity stunt, part of a campaign promoting the skyscraper.

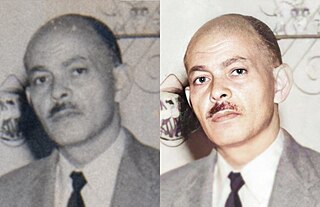

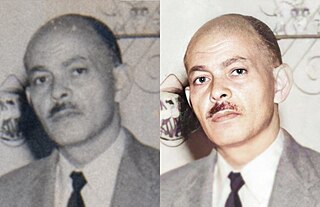

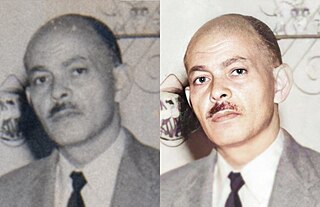

Digital photograph restoration is the practice of restoring the appearance of a digital copy of a physical photograph that has been damaged by natural, man-made, or environmental causes, or affected by age or neglect.

Inpainting is a conservation process where damaged, deteriorated, or missing parts of an artwork are filled in to present a complete image. This process is commonly used in image restoration. It can be applied to both physical and digital art mediums such as oil or acrylic paintings, chemical photographic prints, sculptures, or digital images and video.

Photo editing can refer to:

Image restoration is the operation of taking a corrupt/noisy image and estimating the clean, original image. Corruption may come in many forms such as motion blur, noise and camera mis-focus. Image restoration is performed by reversing the process that blurred the image and such is performed by imaging a point source and use the point source image, which is called the Point Spread Function (PSF) to restore the image information lost to the blurring process.

Conservation photography is the active use of the photographic process and its products, within the parameters of photojournalism, to advocate for conservation outcomes.

Carol McKinney Highsmith is known as American's documentarian and publisher who has photographed in all the states of the United States as well as the District of Columbia and Puerto Rico. She photographs the entire American vista in all fifty U.S. states as a visual record of the nation in the late-20th and early-21st centuries. For 45 years, Highsmith has traveled across more than 2 million miles of American roads with her husband, writer-editor Ted Landphair, with whom she is now documenting their final state, their home venue of Maryland in partnership with Maryland Public Television.

During its history, the Shroud of Turin has been subjected to repairs and restoration, such as after the fire which damaged it in 1532. Since 1578 the Shroud has been kept in the Royal Chapel of Turin Cathedral. Currently it is stored under the laminated bulletproof glass of an airtight case, filled with chemically-neutral gasses. The temperature and humidity controlled-case is filled with argon (99.5%) and oxygen (0.5%) to prevent chemical changes. The Shroud itself is kept on an aluminum support sliding on runners and stored flat within the case.

A photograph conservator is a professional who examines, documents, researches, and treats photographs, including documenting the structure and condition of art works through written and photographic records, monitoring conditions of works in storage and exhibition and transit environments. This person also performs all aspects of the treatment of photographs and related artworks with adherence to the professional Code of Ethics.

The conservation and restoration of film is the physical care and treatment of film-based materials. These include photographic film and motion picture film stock.

Hair Like Mine is a 2009 photograph by Pete Souza of a five-year-old child, Jacob Philadelphia, touching the head of Barack Obama, then president of the United States. He invited Philadelphia to touch his hair after the boy asked whether Obama's hair was similar to his own afro-textured hair. Time called the image "iconic", and it was later described by First Lady Michelle Obama as symbolizing progress made in the African-American struggle for civil rights.