An operating system (OS) is system software that manages computer hardware and software resources, and provides common services for computer programs.

A real-time operating system (RTOS) is an operating system (OS) for real-time computing applications that processes data and events that have critically defined time constraints. An RTOS is distinct from a time-sharing operating system, such as Unix, which manages the sharing of system resources with a scheduler, data buffers, or fixed task prioritization in multitasking or multiprogramming environments. Processing time requirements need to be fully understood and bound rather than just kept as a minimum. All processing must occur within the defined constraints. Real-time operating systems are event-driven and preemptive, meaning the OS can monitor the relevant priority of competing tasks, and make changes to the task priority. Event-driven systems switch between tasks based on their priorities, while time-sharing systems switch the task based on clock interrupts.

In computing, a process is the instance of a computer program that is being executed by one or many threads. There are many different process models, some of which are light weight, but almost all processes are rooted in an operating system (OS) process which comprises the program code, assigned system resources, physical and logical access permissions, and data structures to initiate, control and coordinate execution activity. Depending on the OS, a process may be made up of multiple threads of execution that execute instructions concurrently.

In computing, scheduling is the action of assigning resources to perform tasks. The resources may be processors, network links or expansion cards. The tasks may be threads, processes or data flows.

Round-robin (RR) is one of the algorithms employed by process and network schedulers in computing. As the term is generally used, time slices are assigned to each process in equal portions and in circular order, handling all processes without priority. Round-robin scheduling is simple, easy to implement, and starvation-free. Round-robin scheduling can be applied to other scheduling problems, such as data packet scheduling in computer networks. It is an operating system concept.

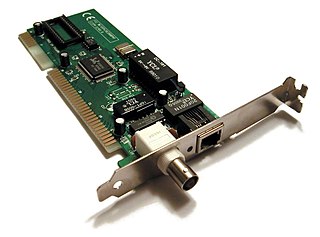

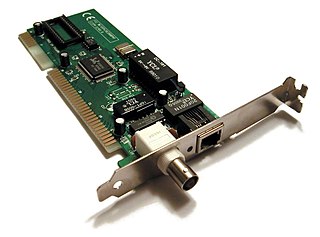

A network interface controller is a computer hardware component that connects a computer to a computer network.

In computer science, resource starvation is a problem encountered in concurrent computing where a process is perpetually denied necessary resources to process its work. Starvation may be caused by errors in a scheduling or mutual exclusion algorithm, but can also be caused by resource leaks, and can be intentionally caused via a denial-of-service attack such as a fork bomb.

Weighted round robin (WRR) is a network scheduler for data flows, but also used to schedule processes.

The MCP is the operating system of the Burroughs B5000/B5500/B5700 and the B6500 and successors, including the Unisys Clearpath/MCP systems.

Micro-Controller Operating Systems is a real-time operating system (RTOS) designed by Jean J. Labrosse in 1991. It is a priority-based preemptive real-time kernel for microprocessors, written mostly in the programming language C. It is intended for use in embedded systems.

In computer science, a multilevel feedback queue is a scheduling algorithm. Scheduling algorithms are designed to have some process running at all times to keep the central processing unit (CPU) busy. The multilevel feedback queue extends standard algorithms with the following design requirements:

- Separate processes into multiple ready queues based on their need for the processor.

- Give preference to processes with short CPU bursts.

- Give preference to processes with high I/O bursts.

In computer science, asynchronous I/O is a form of input/output processing that permits other processing to continue before the I/O operation has finished. A name used for asynchronous I/O in the Windows API is overlapped I/O.

A job scheduler is a computer application for controlling unattended background program execution of jobs. This is commonly called batch scheduling, as execution of non-interactive jobs is often called batch processing, though traditional job and batch are distinguished and contrasted; see that page for details. Other synonyms include batch system, distributed resource management system (DRMS), distributed resource manager (DRM), and, commonly today, workload automation (WLA). The data structure of jobs to run is known as the job queue.

Fair queuing is a family of scheduling algorithms used in some process and network schedulers. The algorithm is designed to achieve fairness when a limited resource is shared, for example to prevent flows with large packets or processes that generate small jobs from consuming more throughput or CPU time than other flows or processes.

Maximum throughput scheduling is a procedure for scheduling data packets in a packet-switched best-effort network, typically a wireless network, in view to maximize the total throughput of the network, or the system spectral efficiency in a wireless network. This is achieved by giving scheduling priority to the least "expensive" data flows in terms of consumed network resources per transferred amount of information.

The Completely Fair Scheduler (CFS) was a process scheduler that was merged into the 2.6.23 release of the Linux kernel. It was the default scheduler of the tasks of the SCHED_NORMAL class and handled CPU resource allocation for executing processes, aiming to maximize overall CPU utilization while also maximizing interactive performance.

A process is a program in execution, and an integral part of any modern-day operating system (OS). The OS must allocate resources to processes, enable processes to share and exchange information, protect the resources of each process from other processes and enable synchronization among processes. To meet these requirements, the OS must maintain a data structure for each process, which describes the state and resource ownership of that process, and which enables the OS to exert control over each process.

Nano-RK is a wireless sensor networking real-time operating system (RTOS) from Carnegie Mellon University, designed to run on microcontrollers for use in sensor networks. Nano-RK supports a fixed-priority fully preemptive scheduler with fine-grained timing primitives to support real-time task sets. "Nano" implies that the RTOS is small, using 2 KB of random-access memory (RAM) and using 18 KB of flash memory, while RK is short for resource kernel. A resource kernel provides reservations on how often system resources can be used. For example, a task might only be allowed to execute 10 ms every 150 ms, or a node might only be allowed to transmit 10 network packets per minute. These reservations form a virtual energy budget to ensure a node meets its designed battery lifetime and to prevent a failed node from generating excessive network traffic. Nano-RK is open-source software, is written in C and runs on the Atmel-based FireFly sensor networking platform, the MicaZ motes, and the MSP430 processor.

The Brain Fuck Scheduler (BFS) is a process scheduler designed for the Linux kernel in August 2009 based on earliest eligible virtual deadline first scheduling (EEVDF), as an alternative to the Completely Fair Scheduler (CFS) and the O(1) scheduler. BFS was created by Con Kolivas.

Processor sharing or egalitarian processor sharing is a service policy where the customers, clients or jobs are all served simultaneously, each receiving an equal fraction of the service capacity available. In such a system all jobs start service immediately.