Related Research Articles

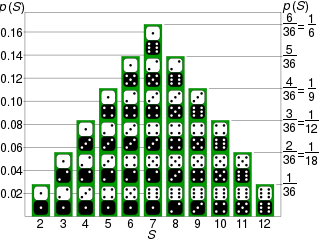

Probability is the branch of mathematics concerning numerical descriptions of how likely an event is to occur, or how likely it is that a proposition is true. The probability of an event is a number between 0 and 1, where, roughly speaking, 0 indicates impossibility of the event and 1 indicates certainty. The higher the probability of an event, the more likely it is that the event will occur. A simple example is the tossing of a fair (unbiased) coin. Since the coin is fair, the two outcomes are both equally probable; the probability of "heads" equals the probability of "tails"; and since no other outcomes are possible, the probability of either "heads" or "tails" is 1/2.

Statistics is the discipline that concerns the collection, organization, analysis, interpretation and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of surveys and experiments. See glossary of probability and statistics.

A statistical hypothesis is a hypothesis that is testable on the basis of observed data modeled as the realised values taken by a collection of random variables. A set of data are modelled as being realised values of a collection of random variables having a joint probability distribution in some set of possible joint distributions. The hypothesis being tested is exactly that set of possible probability distributions. A statistical hypothesis test is a method of statistical inference. An alternative hypothesis is proposed for the probability distribution of the data, either explicitly or only informally. The comparison of the two models is deemed statistically significant if, according to a threshold probability -- the significance level -- the data is very unlikely to have occurred under the null hypothesis. A hypothesis test specifies which outcomes of a study may lead to a rejection of the null hypothesis at a pre-specified level of significance, while using a pre-chosen measure of deviation from that hypothesis. The pre-chosen level of significance is the maximal allowed "false positive rate". One wants to control the risk of incorrectly rejecting a true null hypothesis.

The prisoner's dilemma is a standard example of a game analyzed in game theory that shows why two completely rational individuals might not cooperate, even if it appears that it is in their best interests to do so. It was originally framed by Merrill Flood and Melvin Dresher while working at RAND in 1950. Albert W. Tucker formalized the game with prison sentence rewards and named it "prisoner's dilemma", presenting it as follows:

Two members of a criminal gang are arrested and imprisoned. Each prisoner is in solitary confinement with no means of communicating with the other. The prosecutors lack sufficient evidence to convict the pair on the principal charge, but they have enough to convict both on a lesser charge. Simultaneously, the prosecutors offer each prisoner a bargain. Each prisoner is given the opportunity either to betray the other by testifying that the other committed the crime, or to cooperate with the other by remaining silent. The possible outcomes are:

Quantum suicide is a thought experiment in quantum mechanics and the philosophy of physics. Purportedly, it can falsify any interpretation of quantum mechanics other than the Everett many-worlds interpretation by means of a variation of the Schrödinger's cat thought experiment, from the cat's point of view. Quantum immortality refers to the subjective experience of surviving quantum suicide. This concept is sometimes conjectured to be applicable to real-world causes of death as well.

In statistics and statistical physics, the Metropolis–Hastings algorithm is a Markov chain Monte Carlo (MCMC) method for obtaining a sequence of random samples from a probability distribution from which direct sampling is difficult. This sequence can be used to approximate the distribution or to compute an integral. Metropolis–Hastings and other MCMC algorithms are generally used for sampling from multi-dimensional distributions, especially when the number of dimensions is high. For single-dimensional distributions, there are usually other methods that can directly return independent samples from the distribution, and these are free from the problem of autocorrelated samples that is inherent in MCMC methods.

The method of least squares is a standard approach in regression analysis to approximate the solution of overdetermined systems by minimizing the sum of the squares of the residuals made in the results of every single equation.

Sampling is the use of a subset of the population to represent the whole population or to inform about (social) processes that are meaningful beyond the particular cases, individuals or sites studied. Probability sampling, or random sampling, is a sampling technique in which the probability of getting any particular sample may be calculated. Nonprobability sampling does not meet this criterion. Nonprobability sampling techniques are not intended to be used to infer from the sample to the general population in statistical terms. Instead, for example, grounded theory can be produced through iterative nonprobability sampling until theoretical saturation is reached.

Bayesian statistics is a theory in the field of statistics based on the Bayesian interpretation of probability where probability expresses a degree of belief in an event. The degree of belief may be based on prior knowledge about the event, such as the results of previous experiments, or on personal beliefs about the event. This differs from a number of other interpretations of probability, such as the frequentist interpretation that views probability as the limit of the relative frequency of an event after many trials.

A randomized algorithm is an algorithm that employs a degree of randomness as part of its logic. The algorithm typically uses uniformly random bits as an auxiliary input to guide its behavior, in the hope of achieving good performance in the "average case" over all possible choices of random bits. Formally, the algorithm's performance will be a random variable determined by the random bits; thus either the running time, or the output are random variables.

In probability theory, the law of the iterated logarithm describes the magnitude of the fluctuations of a random walk. The original statement of the law of the iterated logarithm is due to A. Ya. Khinchin (1924). Another statement was given by A. N. Kolmogorov in 1929.

Aleksandr Yakovlevich Khinchin was a Soviet mathematician and one of the most significant contributors to the Soviet school of probability theory.

The positive and negative predictive values are the proportions of positive and negative results in statistics and diagnostic tests that are true positive and true negative results, respectively. The PPV and NPV describe the performance of a diagnostic test or other statistical measure. A high result can be interpreted as indicating the accuracy of such a statistic. The PPV and NPV are not intrinsic to the test ; they depend also on the prevalence. Both PPV and NPV can be derived using Bayes' theorem.

In statistics, isotonic regression or monotonic regression is the technique of fitting a free-form line to a sequence of observations such that the fitted line is non-decreasing everywhere, and lies as close to the observations as possible.

In probability theory and statistical mechanics, a Gibbs state is an equilibrium probability distribution which remains invariant under future evolution of the system. For example, a stationary or steady-state distribution of a Markov chain, such as that achieved by running a Markov chain Monte Carlo iteration for a sufficiently long time, is a Gibbs state.

In statistics, Grubbs's test or the Grubbs test, also known as the maximum normalized residual test or extreme studentized deviate test, is a test used to detect outliers in a univariate data set assumed to come from a normally distributed population.

In statistics: asymptotic theory, or large sample theory, is a framework for assessing properties of estimators and statistical tests. Within this framework, it is often assumed that the sample size n may grow indefinitely; the properties of estimators and tests are then evaluated under the limit of n → ∞. In practice, a limit evaluation is considered to be approximately valid for large finite sample sizes too.

In computer science and information theory, Tunstall coding is a form of entropy coding used for lossless data compression.

References

- Besag, J. E. (1986), "On the Statistical Analysis of Dirty Pictures", Journal of the Royal Statistical Society, Series B, 48 (3): 259–302, JSTOR 2345426

| This statistics-related article is a stub. You can help Wikipedia by expanding it. |