Related Research Articles

The Transmission Control Protocol (TCP) is one of the main protocols of the Internet protocol suite. It originated in the initial network implementation in which it complemented the Internet Protocol (IP). Therefore, the entire suite is commonly referred to as TCP/IP. TCP provides reliable, ordered, and error-checked delivery of a stream of octets (bytes) between applications running on hosts communicating via an IP network. Major internet applications such as the World Wide Web, email, remote administration, and file transfer rely on TCP, which is part of the Transport Layer of the TCP/IP suite. SSL/TLS often runs on top of TCP.

In computer networking, the User Datagram Protocol (UDP) is one of the core communication protocols of the Internet protocol suite used to send messages to other hosts on an Internet Protocol (IP) network. Within an IP network, UDP does not require prior communication to set up communication channels or data paths.

Automatic repeat request (ARQ), also known as automatic repeat query, is an error-control method for data transmission that uses acknowledgements and timeouts to achieve reliable data transmission over an unreliable communication channel. If the sender does not receive an acknowledgment before the timeout, it re-transmits the packet until it receives an acknowledgment or exceeds a predefined number of retransmissions.

Transmission Control Protocol (TCP) uses a network congestion-avoidance algorithm that includes various aspects of an additive increase/multiplicative decrease (AIMD) scheme, along with other schemes including slow start and congestion window (CWND), to achieve congestion avoidance. The TCP congestion-avoidance algorithm is the primary basis for congestion control in the Internet. Per the end-to-end principle, congestion control is largely a function of internet hosts, not the network itself. There are several variations and versions of the algorithm implemented in protocol stacks of operating systems of computers that connect to the Internet.

Nagle's algorithm is a means of improving the efficiency of TCP/IP networks by reducing the number of packets that need to be sent over the network. It was defined by John Nagle while working for Ford Aerospace. It was published in 1984 as a Request for Comments (RFC) with title Congestion Control in IP/TCP Internetworks in RFC 896.

TCP tuning techniques adjust the network congestion avoidance parameters of Transmission Control Protocol (TCP) connections over high-bandwidth, high-latency networks. Well-tuned networks can perform up to 10 times faster in some cases. However, blindly following instructions without understanding their real consequences can hurt performance as well.

Sorcerer's Apprentice Syndrome (SAS) is a network protocol flaw in the original versions of TFTP. It was named after Goethe's 1797 poem "Der Zauberlehrling", because the details of its operation closely resemble the disaster that befalls the sorcerer's apprentice: the problem resulted in an ever-growing replication of every packet in the transfer.

Retransmission, essentially identical with automatic repeat request (ARQ), is the resending of packets which have been either damaged or lost. Retransmission is one of the basic mechanisms used by protocols operating over a packet switched computer network to provide reliable communication.

Packet loss occurs when one or more packets of data travelling across a computer network fail to reach their destination. Packet loss is either caused by errors in data transmission, typically across wireless networks, or network congestion. Packet loss is measured as a percentage of packets lost with respect to packets sent.

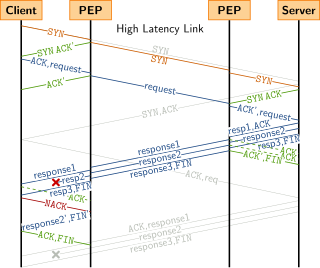

Performance-enhancing proxies (PEPs) are network agents designed to improve the end-to-end performance of some communication protocols. PEP standards are defined in RFC 3135 and RFC 3449.

In computer networks, goodput is the application-level throughput of a communication; i.e. the number of useful information bits delivered by the network to a certain destination per unit of time. The amount of data considered excludes protocol overhead bits as well as retransmitted data packets. This is related to the amount of time from the first bit of the first packet sent until the last bit of the last packet is delivered.

The internet layer is a group of internetworking methods, protocols, and specifications in the Internet protocol suite that are used to transport network packets from the originating host across network boundaries; if necessary, to the destination host specified by an IP address. The internet layer derives its name from its function facilitating internetworking, which is the concept of connecting multiple networks with each other through gateways.

A sliding window protocol is a feature of packet-based data transmission protocols. Sliding window protocols are used where reliable in-order delivery of packets is required, such as in the data link layer as well as in the Transmission Control Protocol (TCP). They are also used to improve efficiency when the channel may include high latency.

Extremely Opportunistic Routing (ExOR) is a combination of routing protocol and media access control for a wireless ad hoc network, invented by Sanjit Biswas and Robert Morris of the MIT Artificial Intelligence Laboratory, and described in a 2005 paper. A very similar opportunistic routing scheme was also independently proposed by Zhenzhen Ye and Yingbo Hua from University of California, Riverside and presented in a paper in 2005. Previously open source, ExOR was available in 2005 but is no longer obtainable. The broadcast and retransmission strategies used by the algorithm were already described in the literature. ExOR is valuable because it can operate available digital radios to use some previously impractical algorithmic optimizations.

WTCP is a proxy-based modification of TCP that preserves the end-to-end semantics of TCP. As its name suggests, it is used in wireless networks to improve the performance of TCP.

TCP delayed acknowledgment is a technique used by some implementations of the Transmission Control Protocol in an effort to improve network performance. In essence, several ACK responses may be combined into a single response, reducing protocol overhead. However, in some circumstances, the technique can reduce application performance.

A communication protocol is a system of rules that allows two or more entities of a communications system to transmit information via any kind of variation of a physical quantity. The protocol defines the rules, syntax, semantics and synchronization of communication and possible error recovery methods. Protocols may be implemented by hardware, software, or a combination of both.

Zeta-TCP refers to a set of proprietary Transmission Control Protocol (TCP) algorithms aiming at improving the end-to-end performance of TCP, regardless of whether the peer is Zeta-TCP or any other TCP protocol stack, in other words, to be compatible with the existing TCP algorithms. It was designed and implemented by AppEx Networks Corporation.

NACK-Oriented Reliable Multicast (NORM) is a transport layer Internet protocol designed to provide reliable transport in multicast groups in data networks. It is formally defined by the Internet Engineering Task Force (IETF) in Request for Comments (RFC) 5740, which was published in November 2009.

Dr. Craig Partridge is an American computer scientist, best known for his contributions to the technical development of the Internet.

References

- ↑ Computer Networks: A Systems Approach, The Morgan Kaufmann Series in Networking, Larry L. Peterson, Bruce S. Davie Edition 5, Elsevier, 2011 p.418

- ↑ Karn, Phil; Partridge, Craig (1987). Improving Round-Trip Time Estimates in Reliable Transport Protocols (PostScript). Proc. ACM SIGCOMM. pp. 2–7.

- ↑ Comer, Douglas (2006). Internetworking with TCP/IP (Fifth ed.). Prentice Hall.

- ↑ "What Is Karn's Algorithm?". Archived from the original on 2016-11-14. Retrieved 2016-09-07.