In mathematical physics and mathematics, the Pauli matrices are a set of three 2 × 2 complex matrices which are Hermitian and unitary. Usually indicated by the Greek letter sigma, they are occasionally denoted by tau when used in connection with isospin symmetries. They are

In probability theory and statistics, the exponential distribution is the probability distribution that describes the time between events in a Poisson point process, i.e., a process in which events occur continuously and independently at a constant average rate. It is a particular case of the gamma distribution. It is the continuous analogue of the geometric distribution, and it has the key property of being memoryless. In addition to being used for the analysis of Poisson point processes it is found in various other contexts.

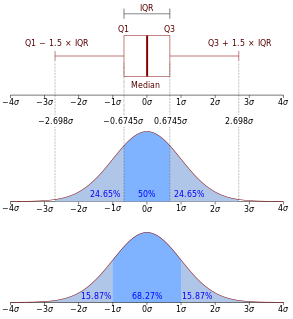

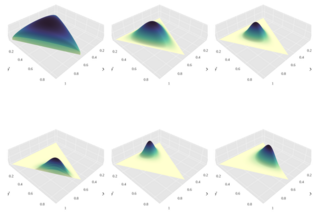

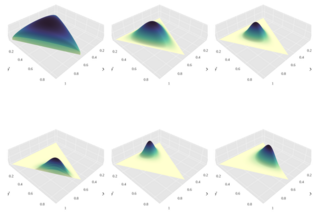

In probability theory and statistics, the multivariate normal distribution, multivariate Gaussian distribution, or joint normal distribution is a generalization of the one-dimensional (univariate) normal distribution to higher dimensions. One definition is that a random vector is said to be k-variate normally distributed if every linear combination of its k components has a univariate normal distribution. Its importance derives mainly from the multivariate central limit theorem. The multivariate normal distribution is often used to describe, at least approximately, any set of (possibly) correlated real-valued random variables each of which clusters around a mean value.

Angular displacement of a body is the angle in radians through which a point revolves around a centre or line has been rotated in a specified sense about a specified axis. When a body rotates about its axis, the motion cannot simply be analyzed as a particle, as in circular motion it undergoes a changing velocity and acceleration at any time (t). When dealing with the rotation of a body, it becomes simpler to consider the body itself rigid. A body is generally considered rigid when the separations between all the particles remains constant throughout the body's motion, so for example parts of its mass are not flying off. In a realistic sense, all things can be deformable, however this impact is minimal and negligible. Thus the rotation of a rigid body over a fixed axis is referred to as rotational motion.

In probability theory and statistics, the gamma distribution is a two-parameter family of continuous probability distributions. The exponential distribution, Erlang distribution, and chi-squared distribution are special cases of the gamma distribution. There are three different parametrizations in common use:

- With a shape parameter k and a scale parameter θ.

- With a shape parameter α = k and an inverse scale parameter β = 1/θ, called a rate parameter.

- With a shape parameter k and a mean parameter μ = kθ = α/β.

In linear algebra, an n-by-n square matrix A is called invertible if there exists an n-by-n square matrix B such that

In statistics, the matrix normal distribution or matrix Gaussian distribution is a probability distribution that is a generalization of the multivariate normal distribution to matrix-valued random variables.

In physics, the S-matrix or scattering matrix relates the initial state and the final state of a physical system undergoing a scattering process. It is used in quantum mechanics, scattering theory and quantum field theory (QFT).

In geometry, Euler's rotation theorem states that, in three-dimensional space, any displacement of a rigid body such that a point on the rigid body remains fixed, is equivalent to a single rotation about some axis that runs through the fixed point. It also means that the composition of two rotations is also a rotation. Therefore the set of rotations has a group structure, known as a rotation group.

In probability and statistics, the Dirichlet distribution, often denoted , is a family of continuous multivariate probability distributions parameterized by a vector of positive reals. It is a multivariate generalization of the beta distribution, hence its alternative name of multivariate beta distribution (MBD). Dirichlet distributions are commonly used as prior distributions in Bayesian statistics, and in fact the Dirichlet distribution is the conjugate prior of the categorical distribution and multinomial distribution.

In natural language processing, latent Dirichlet allocation (LDA) is a generative statistical model that allows sets of observations to be explained by unobserved groups that explain why some parts of the data are similar. For example, if observations are words collected into documents, it posits that each document is a mixture of a small number of topics and that each word's presence is attributable to one of the document's topics. LDA is an example of a topic model.

In statistics, the generalized Dirichlet distribution (GD) is a generalization of the Dirichlet distribution with a more general covariance structure and almost twice the number of parameters. Random variables with a GD distribution are not completely neutral.

In statistics, principal component regression (PCR) is a regression analysis technique that is based on principal component analysis (PCA). Typically, it considers regressing the outcome on a set of covariates based on a standard linear regression model, but uses PCA for estimating the unknown regression coefficients in the model.

In linear algebra, the computation of the permanent of a matrix is a problem that is thought to be more difficult than the computation of the determinant of a matrix despite the apparent similarity of the definitions.

The multivariate stable distribution is a multivariate probability distribution that is a multivariate generalisation of the univariate stable distribution. The multivariate stable distribution defines linear relations between stable distribution marginals. In the same way as for the univariate case, the distribution is defined in terms of its characteristic function.

In mathematics, the Lindström–Gessel–Viennot lemma provides a way to count the number of tuples of non-intersecting lattice paths.

In statistics, the matrix t-distribution is the generalization of the multivariate t-distribution from vectors to matrices. The matrix t-distribution shares the same relationship with the multivariate t-distribution that the matrix normal distribution shares with the multivariate normal distribution. For example, the matrix t-distribution is the compound distribution that results from sampling from a matrix normal distribution having sampled the covariance matrix of the matrix normal from an inverse Wishart distribution.

In linear algebra, a branch of mathematics, a compound matrix is a matrix whose entries are all minors, of a given size, of another matrix. Compound matrices are closely related to exterior algebras.

In statistics, the inverse Dirichlet distribution is a derivation of the matrix variate Dirichlet distribution. It is related to the inverse Wishart distribution.

A. K. Gupta and D. K. Nagar 1999. "Matrix variate distributions". Chapman and Hall.