In probability theory and statistics, the binomial distribution with parameters n and p is the discrete probability distribution of the number of successes in a sequence of n independent experiments, each asking a yes–no question, and each with its own Boolean-valued outcome: success or failure. A single success/failure experiment is also called a Bernoulli trial or Bernoulli experiment, and a sequence of outcomes is called a Bernoulli process; for a single trial, i.e., n = 1, the binomial distribution is a Bernoulli distribution. The binomial distribution is the basis for the binomial test of statistical significance.

In complex analysis, an entire function, also called an integral function, is a complex-valued function that is holomorphic on the whole complex plane. Typical examples of entire functions are polynomials and the exponential function, and any finite sums, products and compositions of these, such as the trigonometric functions sine and cosine and their hyperbolic counterparts sinh and cosh, as well as derivatives and integrals of entire functions such as the error function. If an entire function has a root at , then , taking the limit value at , is an entire function. On the other hand, the natural logarithm, the reciprocal function, and the square root are all not entire functions, nor can they be continued analytically to an entire function.

In mathematics, the prime number theorem (PNT) describes the asymptotic distribution of the prime numbers among the positive integers. It formalizes the intuitive idea that primes become less common as they become larger by precisely quantifying the rate at which this occurs. The theorem was proved independently by Jacques Hadamard and Charles Jean de la Vallée Poussin in 1896 using ideas introduced by Bernhard Riemann.

The Cauchy–Schwarz inequality is an upper bound on the inner product between two vectors in an inner product space in terms of the product of the vector norms. It is considered one of the most important and widely used inequalities in mathematics.

In probability theory, Chebyshev's inequality provides an upper bound on the probability of deviation of a random variable from its mean. More specifically, the probability that a random variable deviates from its mean by more than is at most , where is any positive constant and is the standard deviation.

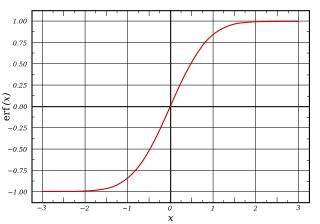

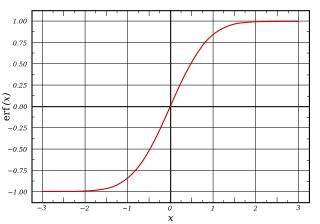

In mathematics, the error function, often denoted by erf, is a function :\mathbb {C} \to \mathbb {C} } defined as:

In mathematics, Jensen's inequality, named after the Danish mathematician Johan Jensen, relates the value of a convex function of an integral to the integral of the convex function. It was proved by Jensen in 1906, building on an earlier proof of the same inequality for doubly-differentiable functions by Otto Hölder in 1889. Given its generality, the inequality appears in many forms depending on the context, some of which are presented below. In its simplest form the inequality states that the convex transformation of a mean is less than or equal to the mean applied after convex transformation.

In probability theory, the Azuma–Hoeffding inequality gives a concentration result for the values of martingales that have bounded differences.

In probability theory, a Chernoff bound is an exponentially decreasing upper bound on the tail of a random variable based on its moment generating function. The minimum of all such exponential bounds forms the Chernoff or Chernoff-Cramér bound, which may decay faster than exponential. It is especially useful for sums of independent random variables, such as sums of Bernoulli random variables.

In probability theory, Hoeffding's inequality provides an upper bound on the probability that the sum of bounded independent random variables deviates from its expected value by more than a certain amount. Hoeffding's inequality was proven by Wassily Hoeffding in 1963.

In mathematics, the Fredholm determinant is a complex-valued function which generalizes the determinant of a finite dimensional linear operator. It is defined for bounded operators on a Hilbert space which differ from the identity operator by a trace-class operator. The function is named after the mathematician Erik Ivar Fredholm.

In mathematics, Doob's martingale inequality, also known as Kolmogorov’s submartingale inequality is a result in the study of stochastic processes. It gives a bound on the probability that a submartingale exceeds any given value over a given interval of time. As the name suggests, the result is usually given in the case that the process is a martingale, but the result is also valid for submartingales.

In probability theory, Bernstein inequalities give bounds on the probability that the sum of random variables deviates from its mean. In the simplest case, let X1, ..., Xn be independent Bernoulli random variables taking values +1 and −1 with probability 1/2, then for every positive ,

In probability theory and statistics, the Poisson distribution is a discrete probability distribution that expresses the probability of a given number of events occurring in a fixed interval of time if these events occur with a known constant mean rate and independently of the time since the last event. It can also be used for the number of events in other types of intervals than time, and in dimension greater than 1.

In probability theory, Bennett's inequality provides an upper bound on the probability that the sum of independent random variables deviates from its expected value by more than any specified amount. Bennett's inequality was proved by George Bennett of the University of New South Wales in 1962.

In probability theory, concentration inequalities provide mathematical bounds on the probability of a random variable deviating from some value. The deviation or other function of the random variable can be thought of as a secondary random variable. The simplest example of the concentration of such a secondary random variable is the CDF of the first random variable which concentrates the probability to unity. If an analytic form of the CDF is available this provides a concentration equality that provides the exact probability of concentration. It is precisely when the CDF is difficult to calculate or even the exact form of the first random variable is unknown that the applicable concentration inequalities provide useful insight.

For certain applications in linear algebra, it is useful to know properties of the probability distribution of the largest eigenvalue of a finite sum of random matrices. Suppose is a finite sequence of random matrices. Analogous to the well-known Chernoff bound for sums of scalars, a bound on the following is sought for a given parameter t:

In probability theory, a subgaussian distribution, the distribution of a subgaussian random variable, is a probability distribution with strong tail decay. More specifically, the tails of a subgaussian distribution are dominated by the tails of a Gaussian. This property gives subgaussian distributions their name.

In mathematics and probability, the Borell–TIS inequality is a result bounding the probability of a deviation of the uniform norm of a centered Gaussian stochastic process above its expected value. The result is named for Christer Borell and its independent discoverers Boris Tsirelson, Ildar Ibragimov, and Vladimir Sudakov. The inequality has been described as "the single most important tool in the study of Gaussian processes."

In information theory, the Bretagnolle–Huber inequality bounds the total variation distance between two probability distributions and by a concave and bounded function of the Kullback–Leibler divergence . The bound can be viewed as an alternative to the well-known Pinsker's inequality: when is large, Pinsker's inequality is vacuous, while Bretagnolle–Huber remains bounded and hence non-vacuous. It is used in statistics and machine learning to prove information-theoretic lower bounds relying on hypothesis testing (Bretagnolle–Huber–Carol Inequality is a variation of Concentration inequality for multinomially distributed random variables which bounds the total variation distance.)