Syntax

| | This section is empty. You can help by adding to it. (July 2010) |

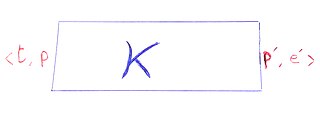

Nominal terms are a metalanguage for embedding object languages with binding constructs into. Intuitively, they may be seen as an extension of first-order terms with support for name binding.[ clarification needed ] Consequently, the native notion of equality between two nominal terms is alpha-equivalence (equivalence up to a permutative renaming of bound names). Nominal terms came out of a programme of research into nominal sets, and have a concrete semantics in those sets.

Where the regular unification found in Prolog is linear in the size of terms compared, the extension to faithfully capture equivalence of nominal terms, called nominal unification in the literature, is quadratic (Calvès 2013). Based on an earlier PTIME algorithm for nominal unification, alphaProlog is a Prolog-like logic programming language with facilities for binding names in terms, which was intended to be useful for programs acting on program syntax (Cheney 2004).

Nominal term embeddings may be seen as alternatives to de Bruijn encodings and higher-order abstract syntax, where the latter uses the simply typed lambda calculus as a metalanguage.

Many interesting calculi, logics and programming languages that are commonly seen in computer science feature name binding constructs. For instance, the universal quantifier from first-order logic, the lambda-binder from the lambda-calculus, and the pi-binder from the pi-calculus are all examples of name-binding constructs.

Computer scientists often need to manipulate abstract syntax trees. For instance, compiler writers perform many manipulations of abstract syntax trees during the various optimisation and elaboration phases of compiler execution. In particular, when working with abstract syntax trees with name binding constructs, we often want to work on alpha-equivalence classes, implement capture-avoiding substitutions, and make it easy to generate fresh names. How best to do this, in a bug free and reliable manner, motivates a large amount of research.

Prior attempts at solving this problem include 'nameless approaches' such as de Bruijn indices and levels, and higher-order approaches such as higher-order abstract syntax. Nominal terms are another, relatively new, approach that retain explicit names for bound variables like higher-order abstract syntax, whilst retaining the first-order flavour (and first-order computational properties) of de Bruijn encodings.

| | This section is empty. You can help by adding to it. (July 2010) |

| | This section is empty. You can help by adding to it. (July 2010) |

| | This section is empty. You can help by adding to it. (July 2010) |

Higher-order unification is known to be undecidable. This motivates the search for subsets of lambda-terms that enjoy a computationally well-behaved unification procedure. Higher-order patterns, proposed by Miller, are one such set.

Higher-order patterns are lambda-terms where the arguments of a free variable are all distinct bound variables. They possess an efficiently decidable unification procedure, and as a result, have been widely implemented, notably in the logic programming language lambdaProlog.

A recent body of work has investigated the connections between nominal terms and higher-order patterns, and consequently between nominal unification and higher-order pattern unification. Cheney proposed an extension of nominal terms called nominal patterns. He then provided a translation between nominal patterns and higher-order patterns which preserved unifiers. Later, Levy and Villaret demonstrated a translation between nominal terms and higher-order patterns that preserves the notion of unifiability. That is, if two nominal terms are unifiable, then their translated pattern counterparts are also unifiable.

Dowek and Gabbay later sharpened Levy and Villaret's translation, proving that in some sense their translation is the best that there can be, and proved that the improved translation preserves unifiers. That is, if two nominal terms are unifiable by some substitution, then the corresponding higher-order pattern unification problem under the translation is solved by the translated substitution. For their proof, Dowek and Gabbay used a variation of nominal terms called permissive nominal terms. However, a translation from permissive nominal terms and back again also exists, completing the translation between nominal terms and higher-order patterns.

Lambda calculus is a formal system in mathematical logic for expressing computation based on function abstraction and application using variable binding and substitution. Untyped lambda calculus, the topic of this article, is a universal model of computation that can be used to simulate any Turing machine. It was introduced by the mathematician Alonzo Church in the 1930s as part of his research into the foundations of mathematics. In 1936, Church found a formulation which was logically consistent, and documented it in 1940.

Prolog is a logic programming language that has its origins in artificial intelligence, automated theorem proving and computational linguistics.

In logic and computer science, specifically automated reasoning, unification is an algorithmic process of solving equations between symbolic expressions, each of the form Left-hand side = Right-hand side. For example, using x,y,z as variables, and taking f to be an uninterpreted function, the singleton equation set { f(1,y) = f(x,2) } is a syntactic first-order unification problem that has the substitution { x ↦ 1, y ↦ 2 } as its only solution.

In programming language theory and proof theory, the Curry–Howard correspondence is the direct relationship between computer programs and mathematical proofs.

In mathematics and logic, a higher-order logic is a form of logic that is distinguished from first-order logic by additional quantifiers and, sometimes, stronger semantics. Higher-order logics with their standard semantics are more expressive, but their model-theoretic properties are less well-behaved than those of first-order logic.

In logic, a logical framework provides a means to define a logic as a signature in a higher-order type theory in such a way that provability of a formula in the original logic reduces to a type inhabitation problem in the framework type theory. This approach has been used successfully for (interactive) automated theorem proving. The first logical framework was Automath; however, the name of the idea comes from the more widely known Edinburgh Logical Framework, LF. Several more recent proof tools like Isabelle are based on this idea. Unlike a direct embedding, the logical framework approach allows many logics to be embedded in the same type system.

Categorical logic is the branch of mathematics in which tools and concepts from category theory are applied to the study of mathematical logic. It is also notable for its connections to theoretical computer science. In broad terms, categorical logic represents both syntax and semantics by a category, and an interpretation by a functor. The categorical framework provides a rich conceptual background for logical and type-theoretic constructions. The subject has been recognisable in these terms since around 1970.

The simply typed lambda calculus, a form of type theory, is a typed interpretation of the lambda calculus with only one type constructor that builds function types. It is the canonical and simplest example of a typed lambda calculus. The simply typed lambda calculus was originally introduced by Alonzo Church in 1940 as an attempt to avoid paradoxical use of the untyped lambda calculus.

In computer science, higher-order abstract syntax is a technique for the representation of abstract syntax trees for languages with variable binders.

In computer science, Programming Computable Functions (PCF) is a typed functional language introduced by Gordon Plotkin in 1977, based on previous unpublished material by Dana Scott. It can be considered to be an extended version of the typed lambda calculus or a simplified version of modern typed functional languages such as ML or Haskell.

In computing, a meta-circular evaluator (MCE) or meta-circular interpreter (MCI) is an interpreter which defines each feature of the interpreted language using a similar facility of the interpreter's host language. For example, interpreting a lambda application may be implemented using function application. Meta-circular evaluation is most prominent in the context of Lisp. A self-interpreter is a meta-circular interpreter where the interpreted language is nearly identical to the host language; the two terms are often used synonymously.

λProlog, also written lambda Prolog, is a logic programming language featuring polymorphic typing, modular programming, and higher-order programming. These extensions to Prolog are derived from the higher-order hereditary Harrop formulas used to justify the foundations of λProlog. Higher-order quantification, simply typed λ-terms, and higher-order unification gives λProlog the basic supports needed to capture the λ-tree syntax approach to higher-order abstract syntax, an approach to representing syntax that maps object-level bindings to programming language bindings. Programmers in λProlog need not deal with bound variable names: instead various declarative devices are available to deal with binder scopes and their instantiations.

Logic is the formal science of using reason and is considered a branch of both philosophy and mathematics and to a lesser extent computer science. Logic investigates and classifies the structure of statements and arguments, both through the study of formal systems of inference and the study of arguments in natural language. The scope of logic can therefore be very large, ranging from core topics such as the study of fallacies and paradoxes, to specialized analyses of reasoning such as probability, correct reasoning, and arguments involving causality. One of the aims of logic is to identify the correct and incorrect inferences. Logicians study the criteria for the evaluation of arguments.

The ACM–IEEE Symposium on Logic in Computer Science (LICS) is an annual academic conference on the theory and practice of computer science in relation to mathematical logic. Extended versions of selected papers of each year's conference appear in renowned international journals such as Logical Methods in Computer Science and ACM Transactions on Computational Logic.

In mathematical logic, the de Bruijn index is a tool invented by the Dutch mathematician Nicolaas Govert de Bruijn for representing terms of lambda calculus without naming the bound variables. Terms written using these indices are invariant with respect to α-conversion, so the check for α-equivalence is the same as that for syntactic equality. Each de Bruijn index is a natural number that represents an occurrence of a variable in a λ-term, and denotes the number of binders that are in scope between that occurrence and its corresponding binder. The following are some examples:

In mathematical logic, the De Bruijn notation is a syntax for terms in the λ calculus invented by the Dutch mathematician Nicolaas Govert de Bruijn. It can be seen as a reversal of the usual syntax for the λ calculus where the argument in an application is placed next to its corresponding binder in the function instead of after the latter's body.

Nominal techniques in computer science are a range of techniques, based on nominal sets, for handling names and binding, e.g. in abstract syntax. Research into nominal sets gave rise to nominal terms, a metalanguage for embedding object languages with name binding constructs.

Anti-unification is the process of constructing a generalization common to two given symbolic expressions. As in unification, several frameworks are distinguished depending on which expressions are allowed, and which expressions are considered equal. If variables representing functions are allowed in an expression, the process is called "higher-order anti-unification", otherwise "first-order anti-unification". If the generalization is required to have an instance literally equal to each input expression, the process is called "syntactical anti-unification", otherwise "E-anti-unification", or "anti-unification modulo theory".

In theoretical computer science, the Krivine machine is an abstract machine. As an abstract machine, it shares features with Turing machines and the SECD machine. The Krivine machine explains how to compute a recursive function. More specifically it aims to define rigorously head normal form reduction of a lambda term using call-by-name reduction. Thanks to its formalism, it tells in details how a kind of reduction works and sets the theoretical foundation of the operational semantics of functional programming languages. On the other hand, Krivine machine implements call-by-name because it evaluates the body of a β-redex before it applies the body to its parameter. In other words, in an expression u it evaluates first λx. t before applying it to u. In functional programming, this would mean that in order to evaluate a function applied to a parameter, it evaluates first the function before applying it to the parameter.

Dale Miller is an American computer scientist and author. He is a Director of Research at Inria Saclay and one of the designers of the λProlog programming language and the Abella interactive theorem prover.