A central processing unit (CPU), also called a central processor, main processor, or just processor, is the most important processor in a given computer. Its electronic circuitry executes instructions of a computer program, such as arithmetic, logic, controlling, and input/output (I/O) operations. This role contrasts with that of external components, such as main memory and I/O circuitry, and specialized coprocessors such as graphics processing units (GPUs).

Digital Equipment Corporation (DEC)'s PDP-10, later marketed as the DECsystem-10, is a mainframe computer family manufactured beginning in 1966 and discontinued in 1983. 1970s models and beyond were marketed under the DECsystem-10 name, especially as the TOPS-10 operating system became widely used.

In computing, virtual memory, or virtual storage, is a memory management technique that provides an "idealized abstraction of the storage resources that are actually available on a given machine" which "creates the illusion to users of a very large (main) memory".

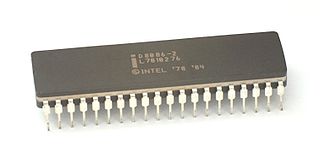

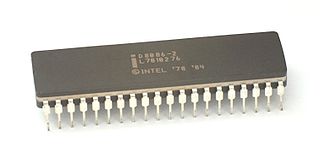

x86 is a family of complex instruction set computer (CISC) instruction set architectures initially developed by Intel based on the 8086 microprocessor and its variant, the 8088. The 8086 was introduced in 1978 as a fully 16-bit extension of 8-bit Intel's 8080 microprocessor, with memory segmentation as a solution for addressing more memory than can be covered by a plain 16-bit address. The term "x86" came into being because the names of several successors to Intel's 8086 processor end in "86", including the 80186, 80286, 80386 and 80486. Colloquially, their names were "186", "286", "386" and "486".

In computer architecture, 64-bit integers, memory addresses, or other data units are those that are 64 bits wide. Also, 64-bit central processing units (CPU) and arithmetic logic units (ALU) are those that are based on processor registers, address buses, or data buses of that size. A computer that uses such a processor is a 64-bit computer.

A memory management unit (MMU), sometimes called paged memory management unit (PMMU), is a computer hardware unit that examines all memory references on the memory bus, translating these requests, known as virtual memory addresses, into physical addresses in main memory.

x86-64 is a 64-bit version of the x86 instruction set, first announced in 1999. It introduced two new modes of operation, 64-bit mode and compatibility mode, along with a new 4-level paging mode.

In computer operating systems, memory paging is a memory management scheme by which a computer stores and retrieves data from secondary storage for use in main memory. In this scheme, the operating system retrieves data from secondary storage in same-size blocks called pages. Paging is an important part of virtual memory implementations in modern operating systems, using secondary storage to let programs exceed the size of available physical memory.

Copy-on-write (COW), sometimes referred to as implicit sharing or shadowing, is a resource-management technique used in computer programming to efficiently implement a "duplicate" or "copy" operation on modifiable resources.

In computing, Physical Address Extension (PAE), sometimes referred to as Page Address Extension, is a memory management feature for the x86 architecture. PAE was first introduced by Intel in the Pentium Pro, and later by AMD in the Athlon processor. It defines a page table hierarchy of three levels (instead of two), with table entries of 64 bits each instead of 32, allowing these CPUs to directly access a physical address space larger than 4 gigabytes (232 bytes).

Memory protection is a way to control memory access rights on a computer, and is a part of most modern instruction set architectures and operating systems. The main purpose of memory protection is to prevent a process from accessing memory that has not been allocated to it. This prevents a bug or malware within a process from affecting other processes, or the operating system itself. Protection may encompass all accesses to a specified area of memory, write accesses, or attempts to execute the contents of the area. An attempt to access unauthorized memory results in a hardware fault, e.g., a segmentation fault, storage violation exception, generally causing abnormal termination of the offending process. Memory protection for computer security includes additional techniques such as address space layout randomization and executable-space protection.

A translation lookaside buffer (TLB) is a memory cache that stores the recent translations of virtual memory to physical memory. It is used to reduce the time taken to access a user memory location. It can be called an address-translation cache. It is a part of the chip's memory-management unit (MMU). A TLB may reside between the CPU and the CPU cache, between CPU cache and the main memory or between the different levels of the multi-level cache. The majority of desktop, laptop, and server processors include one or more TLBs in the memory-management hardware, and it is nearly always present in any processor that utilizes paged or segmented virtual memory.

In computing, a memory address is a reference to a specific memory location used at various levels by software and hardware. Memory addresses are fixed-length sequences of digits conventionally displayed and manipulated as unsigned integers. Such numerical semantic bases itself upon features of CPU, as well upon use of the memory like an array endorsed by various programming languages.

In computer science, thrashing occurs in a system with virtual memory when a computer's real storage resources are overcommitted, leading to a constant state of paging and page faults, slowing most application-level processing. This causes the performance of the computer to degrade or collapse. The situation can continue indefinitely until the user closes some running applications or the active processes free up additional virtual memory resources.

A page table is a data structure used by a virtual memory system in a computer to store mappings between virtual addresses and physical addresses. Virtual addresses are used by the program executed by the accessing process, while physical addresses are used by the hardware, or more specifically, by the random-access memory (RAM) subsystem. The page table is a key component of virtual address translation that is necessary to access data in memory. The page table is set up by the computer's operating system, and may be read and written during the virtual address translation process by the memory management unit or by low-level system software or firmware.

A CPU cache is a hardware cache used by the central processing unit (CPU) of a computer to reduce the average cost to access data from the main memory. A cache is a smaller, faster memory, located closer to a processor core, which stores copies of the data from frequently used main memory locations. Most CPUs have a hierarchy of multiple cache levels, with different instruction-specific and data-specific caches at level 1. The cache memory is typically implemented with static random-access memory (SRAM), in modern CPUs by far the largest part of them by chip area, but SRAM is not always used for all levels, or even any level, sometimes some latter or all levels are implemented with eDRAM.

Memory segmentation is an operating system memory management technique of dividing a computer's primary memory into segments or sections. In a computer system using segmentation, a reference to a memory location includes a value that identifies a segment and an offset within that segment. Segments or sections are also used in object files of compiled programs when they are linked together into a program image and when the image is loaded into memory.

Out of memory (OOM) is an often undesired state of computer operation where no additional memory can be allocated for use by programs or the operating system. Such a system will be unable to load any additional programs, and since many programs may load additional data into memory during execution, these will cease to function correctly. This usually occurs because all available memory, including disk swap space, has been allocated.

Performance Monitor is a system monitoring program introduced in Windows NT 3.1. It monitors various activities on a computer such as CPU or memory usage. This type of application may be used to determine the cause of problems on a local or remote computer by measuring performance of hardware, software services, and applications. The program can define thresholds for alerts and automatic actions, generate reports, and view past performance data.

In operating systems, memory management is the function responsible for managing the computer's primary memory.