In mathematics and computer programming, exponentiating by squaring is a general method for fast computation of large positive integer powers of a number, or more generally of an element of a semigroup, like a polynomial or a square matrix. Some variants are commonly referred to as square-and-multiply algorithms or binary exponentiation. These can be of quite general use, for example in modular arithmetic or powering of matrices. For semigroups for which additive notation is commonly used, like elliptic curves used in cryptography, this method is also referred to as double-and-add.

In mathematics, the factorial of a non-negative integer , denoted by , is the product of all positive integers less than or equal to . The factorial of also equals the product of with the next smaller factorial:

In computer science, a heap is a specialized tree-based data structure that satisfies the heap property: In a max heap, for any given node C, if P is a parent node of C, then the key of P is greater than or equal to the key of C. In a min heap, the key of P is less than or equal to the key of C. The node at the "top" of the heap is called the root node.

In mathematics, the logarithm is the inverse function to exponentiation. That means that the logarithm of a number x to the base b is the exponent to which b must be raised to produce x. For example, since 1000 = 103, the logarithm base 10 of 1000 is 3, or log10 (1000) = 3. The logarithm of x to base b is denoted as logb (x), or without parentheses, logb x, or even without the explicit base, log x, when no confusion is possible, or when the base does not matter such as in big O notation.

In mathematics, the trigonometric functions are real functions which relate an angle of a right-angled triangle to ratios of two side lengths. They are widely used in all sciences that are related to geometry, such as navigation, solid mechanics, celestial mechanics, geodesy, and many others. They are among the simplest periodic functions, and as such are also widely used for studying periodic phenomena through Fourier analysis.

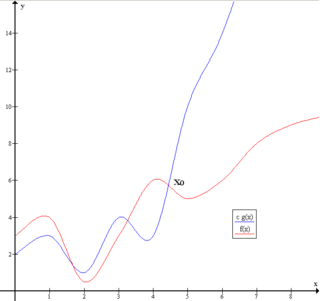

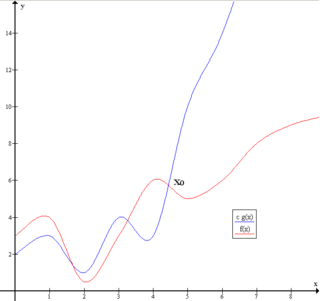

Big O notation is a mathematical notation that describes the limiting behavior of a function when the argument tends towards a particular value or infinity. Big O is a member of a family of notations invented by Paul Bachmann, Edmund Landau, and others, collectively called Bachmann–Landau notation or asymptotic notation. The letter O was chosen by Bachmann to stand for Ordnung, meaning the order of approximation.

In mathematics, exponentiation is an operation involving two numbers, the base and the exponent or power. Exponentiation is written as bn, where b is the base and n is the power; this is pronounced as "b (raised) to the n". When n is a positive integer, exponentiation corresponds to repeated multiplication of the base: that is, bn is the product of multiplying n bases:

In mathematics, function composition is an operation ∘ that takes two functions f and g, and produces a function h = g ∘ f such that h(x) = g(f(x)). In this operation, the function g is applied to the result of applying the function f to x. That is, the functions f : X → Y and g : Y → Z are composed to yield a function that maps x in domain X to g(f(x)) in codomain Z. Intuitively, if z is a function of y, and y is a function of x, then z is a function of x. The resulting composite function is denoted g ∘ f : X → Z, defined by (g ∘ f )(x) = g(f(x)) for all x in X.

In mathematics, the binary logarithm is the power to which the number 2 must be raised to obtain the value n. That is, for any real number x,

In mathematics, an identity is an equality relating one mathematical expression A to another mathematical expression B, such that A and B produce the same value for all values of the variables within a certain range of validity. In other words, A = B is an identity if A and B define the same functions, and an identity is an equality between functions that are differently defined. For example, and are identities. Identities are sometimes indicated by the triple bar symbol ≡ instead of =, the equals sign. Formally, an identity is a universally quantified equality.

In computer science, the time complexity is the computational complexity that describes the amount of computer time it takes to run an algorithm. Time complexity is commonly estimated by counting the number of elementary operations performed by the algorithm, supposing that each elementary operation takes a fixed amount of time to perform. Thus, the amount of time taken and the number of elementary operations performed by the algorithm are taken to be related by a constant factor.

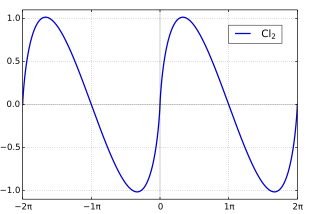

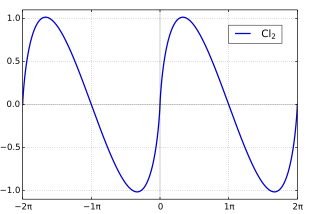

In mathematics, the Clausen function, introduced by Thomas Clausen (1832), is a transcendental, special function of a single variable. It can variously be expressed in the form of a definite integral, a trigonometric series, and various other forms. It is intimately connected with the polylogarithm, inverse tangent integral, polygamma function, Riemann zeta function, Dirichlet eta function, and Dirichlet beta function.

In statistics, an expectation–maximization (EM) algorithm is an iterative method to find (local) maximum likelihood or maximum a posteriori (MAP) estimates of parameters in statistical models, where the model depends on unobserved latent variables. The EM iteration alternates between performing an expectation (E) step, which creates a function for the expectation of the log-likelihood evaluated using the current estimate for the parameters, and a maximization (M) step, which computes parameters maximizing the expected log-likelihood found on the E step. These parameter-estimates are then used to determine the distribution of the latent variables in the next E step.

In the analysis of algorithms, the master theorem for divide-and-conquer recurrences provides an asymptotic analysis for recurrence relations of types that occur in the analysis of many divide and conquer algorithms. The approach was first presented by Jon Bentley, Dorothea Blostein, and James B. Saxe in 1980, where it was described as a "unifying method" for solving such recurrences. The name "master theorem" was popularized by the widely-used algorithms textbook Introduction to Algorithms by Cormen, Leiserson, Rivest, and Stein.

The Schönhage–Strassen algorithm is an asymptotically fast multiplication algorithm for large integers, published by Arnold Schönhage and Volker Strassen in 1971. It works by recursively applying fast Fourier transform (FFT) over the integers modulo 2n+1. The run-time bit complexity to multiply two n-digit numbers using the algorithm is in big O notation.

In mathematics, the Chebyshev function is either a scalarising function (Tchebycheff function) or one of two related functions. The first Chebyshev functionϑ (x) or θ (x) is given by

Clenshaw–Curtis quadrature and Fejér quadrature are methods for numerical integration, or "quadrature", that are based on an expansion of the integrand in terms of Chebyshev polynomials. Equivalently, they employ a change of variables and use a discrete cosine transform (DCT) approximation for the cosine series. Besides having fast-converging accuracy comparable to Gaussian quadrature rules, Clenshaw–Curtis quadrature naturally leads to nested quadrature rules, which is important for both adaptive quadrature and multidimensional quadrature (cubature).

In mathematics, the axis–angle representation parameterizes a rotation in a three-dimensional Euclidean space by two quantities: a unit vector e indicating the direction (geometry) of an axis of rotation, and an angle of rotation θ describing the magnitude and sense of the rotation about the axis. Only two numbers, not three, are needed to define the direction of a unit vector e rooted at the origin because the magnitude of e is constrained. For example, the elevation and azimuth angles of e suffice to locate it in any particular Cartesian coordinate frame.

The Bailey–Borwein–Plouffe formula is a formula for π. It was discovered in 1995 by Simon Plouffe and is named after the authors of the article in which it was published, David H. Bailey, Peter Borwein, and Plouffe. Before that, it had been published by Plouffe on his own site. The formula is

In quantum computing, the quantum phase estimation algorithm is a quantum algorithm to estimate the phase corresponding to an eigenvalue of a given unitary operator. Because the eigenvalues of a unitary operator always have unit modulus, they are characterized by their phase, and therefore the algorithm can be equivalently described as retrieving either the phase or the eigenvalue itself. The algorithm was initially introduced by Alexei Kitaev in 1995.