In computer science, a search algorithm is any algorithm which solves the search problem, namely, to retrieve information stored within some data structure, or calculated in the search space of a problem domain, either with discrete or continuous values. Specific applications of search algorithms include:

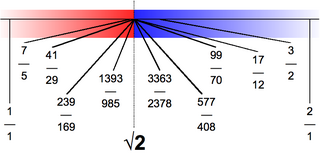

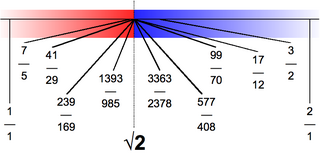

In mathematics, Dedekind cuts, named after German mathematician Richard Dedekind but previously considered by Joseph Bertrand, are а method of construction of the real numbers from the rational numbers. A Dedekind cut is a partition of the rational numbers into two non-empty sets A and B, such that all elements of A are less than all elements of B, and A contains no greatest element. The set B may or may not have a smallest element among the rationals. If B has a smallest element among the rationals, the cut corresponds to that rational. Otherwise, that cut defines a unique irrational number which, loosely speaking, fills the "gap" between A and B. In other words, A contains every rational number less than the cut, and B contains every rational number greater than or equal to the cut. An irrational cut is equated to an irrational number which is in neither set. Every real number, rational or not, is equated to one and only one cut of rationals.

Subtraction is an arithmetic operation that represents the operation of removing objects from a collection. The result of a subtraction is called a difference. Subtraction is signified by the minus sign (−). For example, in the adjacent picture, there are 5 − 2 apples—meaning 5 apples with 2 taken away, which is a total of 3 apples. Therefore, the difference of 5 and 2 is 3, that is, 5 − 2 = 3. Subtraction represents removing or decreasing physical and abstract quantities using different kinds of objects including negative numbers, fractions, irrational numbers, vectors, decimals, functions, and matrices.

Electrical discharge machining (EDM), also known as spark machining, spark eroding, burning, die sinking, wire burning or wire erosion, is a manufacturing process whereby a desired shape is obtained by using electrical discharges (sparks). Material is removed from the work piece by a series of rapidly recurring current discharges between two electrodes, separated by a dielectric liquid and subject to an electric voltage. One of the electrodes is called the tool-electrode, or simply the "tool" or "electrode," while the other is called the workpiece-electrode, or "work piece." The process depends upon the tool and work piece not making actual contact.

In elementary geometry, the property of being perpendicular (perpendicularity) is the relationship between two lines which meet at a right angle. The property extends to other related geometric objects.

The third on Hilbert's list of mathematical problems, presented in 1900, was the first to be solved. The problem is related to the following question: given any two polyhedra of equal volume, is it always possible to cut the first into finitely many polyhedral pieces which can be reassembled to yield the second? Based on earlier writings by Gauss, Hilbert conjectured that this is not always possible. This was confirmed within the year by his student Max Dehn, who proved that the answer in general is "no" by producing a counterexample.

A randomized algorithm is an algorithm that employs a degree of randomness as part of its logic. The algorithm typically uses uniformly random bits as an auxiliary input to guide its behavior, in the hope of achieving good performance in the "average case" over all possible choices of random bits. Formally, the algorithm's performance will be a random variable determined by the random bits; thus either the running time, or the output are random variables.

Stroke play, also known as medal play, is a scoring system in the sport of golf in which the total number of strokes is counted over one, or more rounds, of 18 holes; as opposed to match play, in which the player, or team, earns a point for each hole in which they have bested their opponents. In stroke play the winner is the player who has taken the fewest strokes over the course of the round, or rounds.

Plasma cutting is a process that cuts through electrically conductive materials by means of an accelerated jet of hot plasma. Typical materials cut with a plasma torch include steel, stainless steel, aluminum, brass and copper, although other conductive metals may be cut as well. Plasma cutting is often used in fabrication shops, automotive repair and restoration, industrial construction, and salvage and scrapping operations. Due to the high speed and precision cuts combined with low cost, plasma cutting sees widespread use from large-scale industrial CNC applications down to small hobbyist shops.

In proving results in combinatorics several useful combinatorial rules or combinatorial principles are commonly recognized and used.

In mathematics and computer science, connectivity is one of the basic concepts of graph theory: it asks for the minimum number of elements that need to be removed to separate the remaining nodes into isolated subgraphs. It is closely related to the theory of network flow problems. The connectivity of a graph is an important measure of its resilience as a network.

Lapping is a machining process in which two surfaces are rubbed together with an abrasive between them, by hand movement or using a machine.

The Atterberg limits are a basic measure of the critical water contents of a fine-grained soil: its shrinkage limit, plastic limit, and liquid limit.

In duplicate bridge, a sacrifice is a deliberate bid of a contract that is unlikely to make in the hope that the penalty points will be less than the points likely to be gained by the opponents in making their contract. In rubber bridge, a sacrifice is an attempt to prevent the opponents scoring a game or rubber on the expectation that positive scores on subsequent deals will offset the negative score.

A fillet or filet is a cut or slice of boneless meat or fish. The fillet is often a prime ingredient in many cuisines, and many dishes call for a specific type of fillet as one of the ingredients.

Oxy-fuel welding and oxy-fuel cutting are processes that use fuel gases and oxygen to weld or cut metals. French engineers Edmond Fouché and Charles Picard became the first to develop oxygen-acetylene welding in 1903. Pure oxygen, instead of air, is used to increase the flame temperature to allow localized melting of the workpiece material in a room environment. A common propane/air flame burns at about 2,250 K, a propane/oxygen flame burns at about 2,526 K, an oxyhydrogen flame burns at 3,073 K and an acetylene/oxygen flame burns at about 3,773 K.

The term machinability refers to the ease with which a metal can be cut (machined) permitting the removal of the material with a satisfactory finish at low cost. Materials with good machinability require little power to cut, can be cut quickly, easily obtain a good finish, and do not wear the tooling much; such materials are said to be free machining. The factors that typically improve a material's performance often degrade its machinability. Therefore, to manufacture components economically, engineers are challenged to find ways to improve machinability without harming performance.

The Newman–Keuls or Student–Newman–Keuls (SNK) method is a stepwise multiple comparisons procedure used to identify sample means that are significantly different from each other. It was named after Student (1927), D. Newman, and M. Keuls. This procedure is often used as a post-hoc test whenever a significant difference between three or more sample means has been revealed by an analysis of variance (ANOVA). The Newman–Keuls method is similar to Tukey's range test as both procedures use studentized range statistics. Unlike Tukey's range test, the Newman–Keuls method uses different critical values for different pairs of mean comparisons. Thus, the procedure is more likely to reveal significant differences between group means and to commit type I errors by incorrectly rejecting a null hypothesis when it is true. In other words, the Neuman-Keuls procedure is more powerful but less conservative than Tukey's range test.

Rodger's method is a statistical procedure for examining research data post hoc following an 'omnibus' analysis. The various components of this methodology were fully worked out by R. S. Rodger in the 1960s and 70s, and seven of his articles about it were published in the British Journal of Mathematical and Statistical Psychology between 1967 and 1978.

In private equity investing, distribution waterfall is a method by which the capital gained by the fund is allocated between the limited partners (LPs) and the general partner (GP).