In calculus, an antiderivative, inverse derivative, primitive function, primitive integral or indefinite integral of a function f is a differentiable function F whose derivative is equal to the original function f. This can be stated symbolically as F' = f. The process of solving for antiderivatives is called antidifferentiation, and its opposite operation is called differentiation, which is the process of finding a derivative. Antiderivatives are often denoted by capital Roman letters such as F and G.

In complex analysis, an entire function, also called an integral function, is a complex-valued function that is holomorphic on the whole complex plane. Typical examples of entire functions are polynomials and the exponential function, and any finite sums, products and compositions of these, such as the trigonometric functions sine and cosine and their hyperbolic counterparts sinh and cosh, as well as derivatives and integrals of entire functions such as the error function. If an entire function has a root at , then , taking the limit value at , is an entire function. On the other hand, the natural logarithm, the reciprocal function, and the square root are all not entire functions, nor can they be continued analytically to an entire function.

In topology, the Tietze extension theorem states that any real-valued, continuous function on a closed subset of a normal topological space can be extended to the entire space, preserving boundedness if necessary.

In calculus, Taylor's theorem gives an approximation of a -times differentiable function around a given point by a polynomial of degree , called the -th-order Taylor polynomial. For a smooth function, the Taylor polynomial is the truncation at the order of the Taylor series of the function. The first-order Taylor polynomial is the linear approximation of the function, and the second-order Taylor polynomial is often referred to as the quadratic approximation. There are several versions of Taylor's theorem, some giving explicit estimates of the approximation error of the function by its Taylor polynomial.

In complex analysis, a branch of mathematics, analytic continuation is a technique to extend the domain of definition of a given analytic function. Analytic continuation often succeeds in defining further values of a function, for example in a new region where the infinite series representation which initially defined the function becomes divergent.

In the mathematical field of real analysis, the monotone convergence theorem is any of a number of related theorems proving the convergence of monotonic sequences that are also bounded. Informally, the theorems state that if a sequence is increasing and bounded above by a supremum, then the sequence will converge to the supremum; in the same way, if a sequence is decreasing and is bounded below by an infimum, it will converge to the infimum.

In calculus, Rolle's theorem or Rolle's lemma essentially states that any real-valued differentiable function that attains equal values at two distinct points must have at least one stationary point somewhere between them—that is, a point where the first derivative is zero. The theorem is named after Michel Rolle.

In vector calculus, Green's theorem relates a line integral around a simple closed curve C to a double integral over the plane region D bounded by C. It is the two-dimensional special case of Stokes' theorem.

In calculus, the squeeze theorem is a theorem regarding the limit of a function that is bounded between two other functions.

In mathematics, the Gauss–Kuzmin–Wirsing operator is the transfer operator of the Gauss map that takes a positive number to the fractional part of its reciprocal. It is named after Carl Gauss, Rodion Kuzmin, and Eduard Wirsing. It occurs in the study of continued fractions; it is also related to the Riemann zeta function.

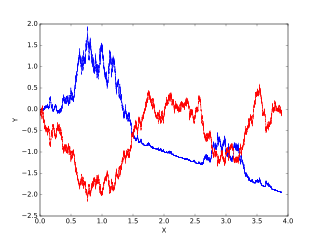

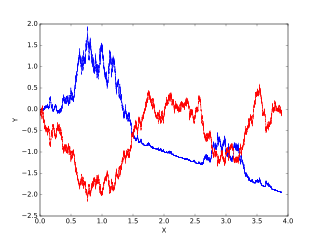

Itô calculus, named after Kiyosi Itô, extends the methods of calculus to stochastic processes such as Brownian motion. It has important applications in mathematical finance and stochastic differential equations.

Renewal theory is the branch of probability theory that generalizes the Poisson process for arbitrary holding times. Instead of exponentially distributed holding times, a renewal process may have any independent and identically distributed (IID) holding times that have finite mean. A renewal-reward process additionally has a random sequence of rewards incurred at each holding time, which are IID but need not be independent of the holding times.

In mathematics, the Artin–Hasse exponential, introduced by Artin and Hasse, is the power series given by

In the mathematical discipline of functional analysis, the concept of a compact operator on Hilbert space is an extension of the concept of a matrix acting on a finite-dimensional vector space; in Hilbert space, compact operators are precisely the closure of finite-rank operators in the topology induced by the operator norm. As such, results from matrix theory can sometimes be extended to compact operators using similar arguments. By contrast, the study of general operators on infinite-dimensional spaces often requires a genuinely different approach.

In mathematics, auxiliary functions are an important construction in transcendental number theory. They are functions that appear in most proofs in this area of mathematics and that have specific, desirable properties, such as taking the value zero for many arguments, or having a zero of high order at some point.

The fundamental theorem of calculus is a theorem that links the concept of differentiating a function with the concept of integrating a function. The two operations are inverses of each other apart from a constant value which depends on where one starts to compute area.

The exponential mechanism is a technique for designing differentially private algorithms. It was developed by Frank McSherry and Kunal Talwar in 2007. Their work was recognized as a co-winner of the 2009 PET Award for Outstanding Research in Privacy Enhancing Technologies.

In graph theory, the graph removal lemma states that when a graph contains few copies of a given subgraph, then all of the copies can be eliminated by removing a small number of edges. The special case in which the subgraph is a triangle is known as the triangle removal lemma.

Roth's theorem on arithmetic progressions is a result in additive combinatorics concerning the existence of arithmetic progressions in subsets of the natural numbers. It was first proven by Klaus Roth in 1953. Roth's theorem is a special case of Szemerédi's theorem for the case .

In mathematics, calculus on Euclidean space is a generalization of calculus of functions in one or several variables to calculus of functions on Euclidean space as well as a finite-dimensional real vector space. This calculus is also known as advanced calculus, especially in the United States. It is similar to multivariable calculus but is somewhat more sophisticated in that it uses linear algebra more extensively and covers some concepts from differential geometry such as differential forms and Stokes' formula in terms of differential forms. This extensive use of linear algebra also allows a natural generalization of multivariable calculus to calculus on Banach spaces or topological vector spaces.