Two-level scheduling is a computer science term to describe a method to more efficiently perform process scheduling that involves swapped out processes.

Computer science is the study of processes that interact with data and that can be represented as data in the form of programs. It enables the use of algorithms to manipulate, store, and communicate digital information. A computer scientist studies the theory of computation and the practice of designing software systems.

In computing, scheduling is the method by which work is assigned to resources that complete the work. The work may be virtual computation elements such as threads, processes or data flows, which are in turn scheduled onto hardware resources such as processors, network links or expansion cards.

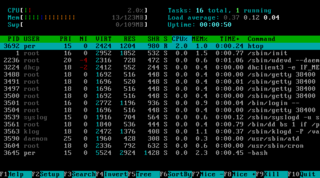

In computing, a process is the instance of a computer program that is being executed. It contains the program code and its activity. Depending on the operating system (OS), a process may be made up of multiple threads of execution that execute instructions concurrently.

Consider this problem: A system contains 50 running processes all with equal priority. However, the system's memory can only hold 10 processes in memory simultaneously. Therefore, there will always be 40 processes swapped out written on virtual memory on the hard disk. The time taken to swap out and swap in a process is 50 ms respectively.

In computing, virtual memory is a memory management technique that provides an "idealized abstraction of the storage resources that are actually available on a given machine" which "creates the illusion to users of a very large (main) memory."

With straightforward Round-robin scheduling, every time a context switch occurs, a process would need to be swapped in (because only the 10 least recently used processes are swapped in). Choosing randomly among the processes would diminish the probability to 80% (40/50). If that occurs, then obviously a process also need to be swapped out. Swapping in and out of is costly, and the scheduler would waste much of its time doing unneeded swaps.

Round-robin (RR) is one of the algorithms employed by process and network schedulers in computing. As the term is generally used, time slices are assigned to each process in equal portions and in circular order, handling all processes without priority. Round-robin scheduling is simple, easy to implement, and starvation-free. Round-robin scheduling can also be applied to other scheduling problems, such as data packet scheduling in computer networks. It is an operating system concept.

In computing, a context switch is the process of storing the state of a process or of a thread, so that it can be restored and execution resumed from the same point later. This allows multiple processes to share a single CPU, and is an essential feature of a multitasking operating system.

That is where two-level scheduling enters the picture. It uses two different schedulers, one lower-level scheduler which can only select among those processes in memory to run. That scheduler could be a Round-robin scheduler. The other scheduler is the higher-level scheduler whose only concern is to swap in and swap out processes from memory. It does its scheduling much less often than the lower-level scheduler since swapping takes so much time.

Thus, the higher-level scheduler selects among those processes in memory that have run for a long time and swaps them out. They are replaced with processes on disk that have not run for a long time. Exactly how it selects processes is up to the implementation of the higher-level scheduler. A compromise has to be made involving the following variables:

- Response time: A process should not be swapped out for too long. Then some other process (or the user) will have to wait needlessly long. If this variable is not considered resource starvation may occur and a process may not complete at all.

- Size of the process: Larger processes must be subject to fewer swaps than smaller ones because they take longer time to swap. Because they are larger, fewer processes can share the memory with the process.

- Priority: The higher the priority of the process, the longer it should stay in memory so that it completes faster.

In technology, response time is the time a system or functional unit takes to react to a given input.