Computer vision tasks include methods for acquiring, processing, analyzing and understanding digital images, and extraction of high-dimensional data from the real world in order to produce numerical or symbolic information, e.g. in the forms of decisions. Understanding in this context means the transformation of visual images into descriptions of the world that make sense to thought processes and can elicit appropriate action. This image understanding can be seen as the disentangling of symbolic information from image data using models constructed with the aid of geometry, physics, statistics, and learning theory.

In digital imaging, a pixel, pel, or picture element is the smallest addressable element in a raster image, or the smallest addressable element in a dot matrix display device. In most digital display devices, pixels are the smallest element that can be manipulated through software.

A camera is an instrument used to capture and store images and videos, either digitally via an electronic image sensor, or chemically via a light-sensitive material such as photographic film. As a pivotal technology in the fields of photography and videography, cameras have played a significant role in the progression of visual arts, media, entertainment, surveillance, and scientific research. The invention of the camera dates back to the 19th century and has since evolved with advancements in technology, leading to a vast array of types and models in the 21st century.

A digital camera, also called a digicam, is a camera that captures photographs in digital memory. Most cameras produced today are digital, largely replacing those that capture images on photographic film or film stock. Digital cameras are now widely incorporated into mobile devices like smartphones with the same or more capabilities and features of dedicated cameras. High-end, high-definition dedicated cameras are still commonly used by professionals and those who desire to take higher-quality photographs.

Digital image processing is the use of a digital computer to process digital images through an algorithm. As a subcategory or field of digital signal processing, digital image processing has many advantages over analog image processing. It allows a much wider range of algorithms to be applied to the input data and can avoid problems such as the build-up of noise and distortion during processing. Since images are defined over two dimensions digital image processing may be modeled in the form of multidimensional systems. The generation and development of digital image processing are mainly affected by three factors: first, the development of computers; second, the development of mathematics ; third, the demand for a wide range of applications in environment, agriculture, military, industry and medical science has increased.

In photography and videography, multi-exposure HDR capture is a technique that creates high dynamic range (HDR) images by taking and combining multiple exposures of the same subject matter at different exposures. Combining multiple images in this way results in an image with a greater dynamic range than what would be possible by taking one single image. The technique can also be used to capture video by taking and combining multiple exposures for each frame of the video. The term "HDR" is used frequently to refer to the process of creating HDR images from multiple exposures. Many smartphones have an automated HDR feature that relies on computational imaging techniques to capture and combine multiple exposures.

In photography, angle of view (AOV) describes the angular extent of a given scene that is imaged by a camera. It is used interchangeably with the more general term field of view.

Infrared thermography (IRT), thermal video and/or thermal imaging, is a process where a thermal camera captures and creates an image of an object by using infrared radiation emitted from the object in a process, which are examples of infrared imaging science. Thermographic cameras usually detect radiation in the long-infrared range of the electromagnetic spectrum and produce images of that radiation, called thermograms. Since infrared radiation is emitted by all objects with a temperature above absolute zero according to the black body radiation law, thermography makes it possible to see one's environment with or without visible illumination. The amount of radiation emitted by an object increases with temperature; therefore, thermography allows one to see variations in temperature. When viewed through a thermal imaging camera, warm objects stand out well against cooler backgrounds; humans and other warm-blooded animals become easily visible against the environment, day or night. As a result, thermography is particularly useful to the military and other users of surveillance cameras.

Motion capture is the process of recording the movement of objects or people. It is used in military, entertainment, sports, medical applications, and for validation of computer vision and robots. In filmmaking and video game development, it refers to recording actions of human actors and using that information to animate digital character models in 2D or 3D computer animation. When it includes face and fingers or captures subtle expressions, it is often referred to as performance capture. In many fields, motion capture is sometimes called motion tracking, but in filmmaking and games, motion tracking usually refers more to match moving.

A camera phone is a mobile phone which is able to capture photographs and often record video using one or more built-in digital cameras. It can also send the resulting image wirelessly and conveniently. The first commercial phone with color camera was the Kyocera Visual Phone VP-210, released in Japan in May 1999.

Motion detection is the process of detecting a change in the position of an object relative to its surroundings or a change in the surroundings relative to an object. It can be achieved by either mechanical or electronic methods. When it is done by natural organisms, it is called motion perception.

A digital single-lens reflex camera is a digital camera that combines the optics and mechanisms of a single-lens reflex camera with a solid-state image sensor and digitally records the images from the sensor.

Image noise is random variation of brightness or color information in images, and is usually an aspect of electronic noise. It can be produced by the image sensor and circuitry of a scanner or digital camera. Image noise can also originate in film grain and in the unavoidable shot noise of an ideal photon detector. Image noise is an undesirable by-product of image capture that obscures the desired information. Typically the term “image noise” is used to refer to noise in 2D images, not 3D images.

An image sensor or imager is a sensor that detects and conveys information used to form an image. It does so by converting the variable attenuation of light waves into signals, small bursts of current that convey the information. The waves can be light or other electromagnetic radiation. Image sensors are used in electronic imaging devices of both analog and digital types, which include digital cameras, camera modules, camera phones, optical mouse devices, medical imaging equipment, night vision equipment such as thermal imaging devices, radar, sonar, and others. As technology changes, electronic and digital imaging tends to replace chemical and analog imaging.

The following are common definitions related to the machine vision field.

Image stabilization (IS) is a family of techniques that reduce blurring associated with the motion of a camera or other imaging device during exposure.

A time-of-flight camera, also known as time-of-flight sensor, is a range imaging camera system for measuring distances between the camera and the subject for each point of the image based on time-of-flight, the round trip time of an artificial light signal, as provided by a laser or an LED. Laser-based time-of-flight cameras are part of a broader class of scannerless LIDAR, in which the entire scene is captured with each laser pulse, as opposed to point-by-point with a laser beam such as in scanning LIDAR systems. Time-of-flight camera products for civil applications began to emerge around 2000, as the semiconductor processes allowed the production of components fast enough for such devices. The systems cover ranges of a few centimeters up to several kilometers.

In computing, an input device is a piece of equipment used to provide data and control signals to an information processing system, such as a computer or information appliance. Examples of input devices include keyboards, computer mice, scanners, cameras, joysticks, and microphones.

The Nikon Expeed image/video processors are media processors for Nikon's digital cameras. They perform a large number of tasks: Bayer filtering, demosaicing, image sensor corrections/dark-frame subtraction, image noise reduction, image sharpening, image scaling, gamma correction, image enhancement/Active D-Lighting, colorspace conversion, chroma subsampling, framerate conversion, lens distortion/chromatic aberration correction, image compression/JPEG encoding, video compression, display/video interface driving, digital image editing, face detection, audio processing/compression/encoding and computer data storage/data transmission.

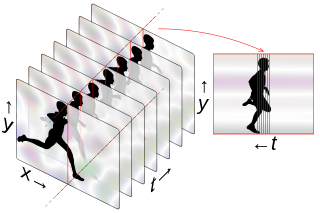

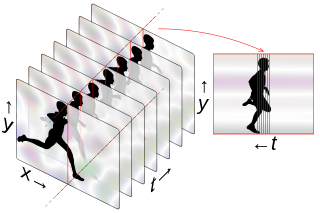

Strip photography, or slit photography, is a photographic technique of capturing a two-dimensional image as a sequence of one-dimensional images over time, in contrast to a normal photo which is a single two-dimensional image at one point in time. A moving scene is recorded, over a period of time, using a camera that observes a narrow strip rather than the full field. If the subject is moving through this observed strip at constant speed, they will appear in the finished photo as a visible object. Stationary objects, like the background, will be the same the whole way across the photo and appear as stripes along the time axis; see examples on this page.