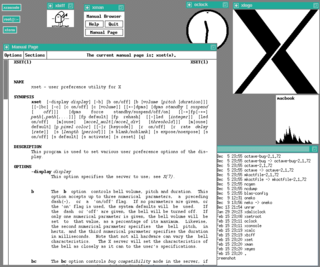

The graphical user interface is a form of user interface that allows users to interact with electronic devices through graphical icons and visual indicators such as secondary notation, instead of text-based user interfaces, typed command labels or text navigation. GUIs were introduced in reaction to the perceived steep learning curve of command-line interfaces (CLIs), which require commands to be typed on a computer keyboard.

In computing, a visual programming language (VPL) is any programming language that lets users create programs by manipulating program elements graphically rather than by specifying them textually. A VPL allows programming with visual expressions, spatial arrangements of text and graphic symbols, used either as elements of syntax or secondary notation. For example, many VPLs are based on the idea of "boxes and arrows", where boxes or other screen objects are treated as entities, connected by arrows, lines or arcs which represent relations.

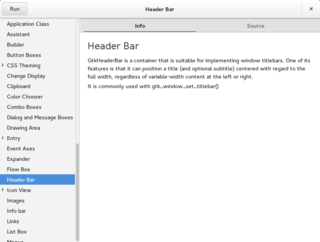

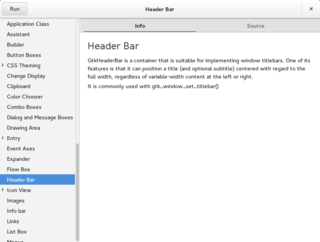

A control element in a graphical user interface is an element of interaction, such as a button or a scroll bar. Controls are software components that a computer user interacts with through direct manipulation to read or edit information about an application. User interface libraries such as Windows Presentation Foundation, GTK, and Cocoa, contain a collection of controls and the logic to render these.

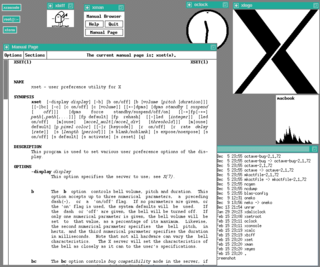

In computing, a shell is a user interface for access to an operating system's services. In general, operating system shells use either a command-line interface (CLI) or graphical user interface (GUI), depending on a computer's role and particular operation. It is named a shell because it is the outermost layer around the operating system kernel.

Orange is an open-source data visualization, machine learning and data mining toolkit. It features a visual programming front-end for explorative data analysis and interactive data visualization, and can also be used as a Python library.

ITK is a cross-platform, open-source application development framework widely used for the development of image segmentation and image registration programs. Segmentation is the process of identifying and classifying data found in a digitally sampled representation. Typically the sampled representation is an image acquired from such medical instrumentation as CT or MRI scanners. Registration is the task of aligning or developing correspondences between data. For example, in the medical environment, a CT scan may be aligned with an MRI scan in order to combine the information contained in both.

NeuroSolutions is a neural network development environment developed by NeuroDimension. It combines a modular, icon-based (component-based) network design interface with an implementation of advanced learning procedures, such as conjugate gradients, Levenberg-Marquardt and backpropagation through time. The software is used to design, train and deploy neural network models to perform a wide variety of tasks such as data mining, classification, function approximation, multivariate regression and time-series prediction.

In software engineering, graphical user interface testing is the process of testing a product's graphical user interface to ensure it meets its specifications. This is normally done through the use of a variety of test cases.

Shogun is a free, open-source machine learning software library written in C++. It offers numerous algorithms and data structures for machine learning problems. It offers interfaces for Octave, Python, R, Java, Lua, Ruby and C# using SWIG.

VisIt is an open-source interactive parallel visualization and graphical analysis tool for viewing scientific data. It can be used to visualize scalar and vector fields defined on 2D and 3D structured and unstructured meshes. VisIt was designed to handle very large data set sizes in the terascale range and yet can also handle small data sets in the kilobyte range.

KNIME, the Konstanz Information Miner, is a free and open-source data analytics, reporting and integration platform. KNIME integrates various components for machine learning and data mining through its modular data pipelining concept. A graphical user interface and use of JDBC allows assembly of nodes blending different data sources, including preprocessing, for modeling, data analysis and visualization without, or with only minimal, programming.

Liquid XML Studio IDE is a Windows based XML editor and XML data binding toolkit. It includes graphical editors for authoring XML documents, XML Schema, WSDL documents, XSLT documents and HTML documents. It also includes user interface extension to Microsoft Visual Studio through the Visual Studio Industry Partner (VSIP) program.

Turi is a graph-based, high performance, distributed computation framework written in C++. The GraphLab project was started by Prof. Carlos Guestrin of Carnegie Mellon University in 2009. It is an open source project using an Apache License. While GraphLab was originally developed for Machine Learning tasks, it has found great success at a broad range of other data-mining tasks; out-performing other abstractions by orders of magnitude.

pSeven is a design space exploration software platform developed by DATADVANCE, extending design, simulation and analysis capabilities and assisting in smarter and faster design decisions. It provides a seamless integration with third party CAD and CAE software tools, powerful multi-objective and robust optimization algorithms, data analysis and uncertainty quantification tools.

Automated machine learning (AutoML) is the process of automating end-to-end the process of applying machine learning to real-world problems. In a typical machine learning application, practitioners have a dataset consisting of input data points to train on. The raw data itself may not be in a form that all algorithms may be applicable to it out of the box. An expert may have to apply the appropriate data pre-processing, feature engineering, feature extraction, and feature selection methods that make the dataset amenable for machine learning. Following those preprocessing steps, practitioners must then perform algorithm selection and hyperparameter optimization to maximize the predictive performance of their final machine learning model. As many of these steps are often beyond the abilities of non-experts, AutoML was proposed as an artificial intelligence-based solution to the ever-growing challenge of applying machine learning. Automating the process of applying machine learning end-to-end offers the advantages of producing simpler solutions, faster creation of those solutions, and models that often outperform models that were designed by hand. However, AutoML is not a silver bullet and can introduce additional parameters of its own, called hyperhyperparameters, which may need some expertise to be set themselves. But it does make application of Machine Learning easier for non-experts.