In statistics, the non-central chi-squared distribution with zero degrees of freedom can be used in testing the null hypothesis that a sample is from a uniform distribution on the interval (0, 1). This distribution was introduced by Andrew F. Siegel in 1979. [1]

Statistics is a branch of mathematics dealing with data collection, organization, analysis, interpretation and presentation. In applying statistics to, for example, a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model process to be studied. Populations can be diverse topics such as "all people living in a country" or "every atom composing a crystal". Statistics deals with all aspects of data, including the planning of data collection in terms of the design of surveys and experiments. See glossary of probability and statistics.

A statistical hypothesis, sometimes called confirmatory data analysis, is a hypothesis that is testable on the basis of observing a process that is modeled via a set of random variables. A statistical hypothesis test is a method of statistical inference. Commonly, two statistical data sets are compared, or a data set obtained by sampling is compared against a synthetic data set from an idealized model. A hypothesis is proposed for the statistical relationship between the two data sets, and this is compared as an alternative to an idealized null hypothesis that proposes no relationship between two data sets. The comparison is deemed statistically significant if the relationship between the data sets would be an unlikely realization of the null hypothesis according to a threshold probability—the significance level. Hypothesis tests are used in determining what outcomes of a study would lead to a rejection of the null hypothesis for a pre-specified level of significance. The process of distinguishing between the null hypothesis and the alternative hypothesis is aided by identifying two conceptual types of errors. The first type occurs when the null hypothesis is falsely rejected. The second type of error occurs when the null hypothesis is falsely assumed to be true. By specifying a threshold probability ('alpha') on, e.g., the admissible risk of making a type 1 error, the statistical decision process can be controlled.

In inferential statistics, the null hypothesis is a general statement or default position that there is no relationship between two measured phenomena, or no association among groups. Testing the null hypothesis—and thus concluding that there are or are not grounds for believing that there is a relationship between two phenomena —is a central task in the modern practice of science; the field of statistics gives precise criteria for rejecting a null hypothesis.

The chi-squared distribution with n degrees of freedom is the probability distribution of the sum

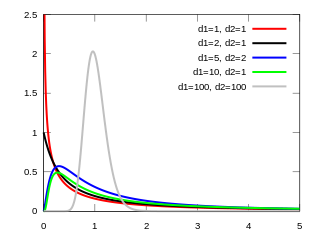

In probability theory and statistics, the chi-squared distribution with k degrees of freedom is the distribution of a sum of the squares of k independent standard normal random variables. The chi-square distribution is a special case of the gamma distribution and is one of the most widely used probability distributions in inferential statistics, notably in hypothesis testing or in construction of confidence intervals. When it is being distinguished from the more general noncentral chi-squared distribution, this distribution is sometimes called the central chi-squared distribution.

In probability theory and statistics, a probability distribution is a mathematical function that provides the probabilities of occurrence of different possible outcomes in an experiment. In more technical terms, the probability distribution is a description of a random phenomenon in terms of the probabilities of events. For instance, if the random variable X is used to denote the outcome of a coin toss, then the probability distribution of X would take the value 0.5 for X = heads, and 0.5 for X = tails. Examples of random phenomena can include the results of an experiment or survey.

where

However, if

and are independent, then the sum of squares above has a non-central chi-squared distribution with n degrees of freedom and "noncentrality parameter"

It is trivial that a "central" chi-square distribution with zero degrees of freedom concentrates all probability at zero.

All of this leaves open the question of what happens with zero degrees of freedom when the noncentrality parameter is not zero.

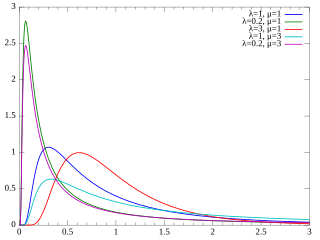

The noncentral chi-squared distribution with zero degrees of freedom and with noncentrality parameter μ is the distribution of

This concentrates probability e−μ/2 at zero; thus it is a mixture of discrete and continuous distributions