Phonetics is a branch of linguistics that studies the sounds of human speech, or—in the case of sign languages—the equivalent aspects of sign. It is concerned with the physical properties of speech sounds or signs (phones): their physiological production, acoustic properties, auditory perception, and neurophysiological status. Phonology, on the other hand, is concerned with the abstract, grammatical characterization of systems of sounds or signs.

Theoretical linguistics, or general linguistics, is the branch of linguistics which inquires into the nature of language itself and seeks to answer fundamental questions as to what language is; how it works; how universal grammar (UG) as a domain-specific mental organ operates, if it exists at all; what are its unique properties; how does language relate to other cognitive processes, etc. Theoretical linguists are most concerned with constructing models of linguistic knowledge, and ultimately developing a linguistic theory.

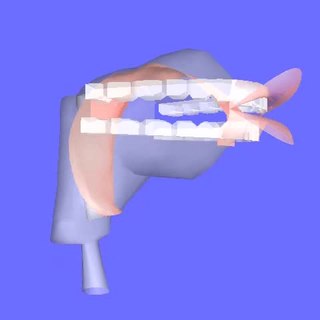

The field of articulatory phonetics is a subfield of phonetics. In studying articulation, phoneticians explain how humans produce speech sounds via the interaction of different physiological structures.

Articulation may refer to:

In linguistics, a segment is "any discrete unit that can be identified, either physically or auditorily, in the stream of speech". The term is most used in phonetics and phonology to refer to the smallest elements in a language, and this usage can be synonymous with the term phone.

Coarticulation in its general sense refers to a situation in which a conceptually isolated speech sound is influenced by, and becomes more like, a preceding or following speech sound. There are two types of coarticulation: anticipatory coarticulation, when a feature or characteristic of a speech sound is anticipated (assumed) during the production of a preceding speech sound; and carryover or perseverative coarticulation, when the effects of a sound are seen during the production of sound(s) that follow. Many models have been developed to account for coarticulation. They include the look-ahead, articulatory syllable, time-locked, window, coproduction and articulatory phonology models.

The Multimodal Interaction Activity is an initiative from W3C aiming to provide means to support Multimodal interaction scenarios on the Web.

Speech perception is the process by which the sounds of language are heard, interpreted and understood. The study of speech perception is closely linked to the fields of phonology and phonetics in linguistics and cognitive psychology and perception in psychology. Research in speech perception seeks to understand how human listeners recognize speech sounds and use this information to understand spoken language. Speech perception research has applications in building computer systems that can recognize speech, in improving speech recognition for hearing- and language-impaired listeners, and in foreign-language teaching.

Haskins Laboratories is an independent 501(c) non-profit corporation, founded in 1935 and located in New Haven, Connecticut, since 1970. It is a multidisciplinary and international community of researchers which conducts basic research on spoken and written language. A guiding perspective of their research is to view speech and language as biological processes. The Laboratories has a long history of technological and theoretical innovation, from creating the rules for speech synthesis and the first working prototype of a reading machine for the blind to developing the landmark concept of phonemic awareness as the critical preparation for learning to read.

Articulatory phonology is a linguistic theory originally proposed in 1986 by Catherine Browman of Haskins Laboratories and Louis M. Goldstein of Yale University and Haskins. The theory identifies theoretical discrepancies between phonetics and phonology and aims to unify the two by treating them as low- and high-dimensional descriptions of a single system.

Catherine P. Browman (1945–2008) was an American linguist and speech scientist. She was a research scientist at Bell Laboratories in New Jersey and Haskins Laboratories in New Haven, Connecticut, from which she retired due to illness. While at Bell Laboratories, she was known for her work on speech synthesis using demisyllables. She was best known for development, with Louis Goldstein, of the theory of articulatory phonology, a gesture-based approach to phonological and phonetic structure. The theoretical approach is incorporated in a computational model that generates speech from a gesturally-specified lexicon. She received her Ph.D. in linguistics from UCLA in 1978 and was a founding member of the Association for Laboratory Phonology.

Acoustic landmarks and distinctive features is a proposed model of speech perception by Kenneth N. Stevens and his colleagues at MIT.

An acoustic model is used in automatic speech recognition to represent the relationship between an audio signal and the phonemes or other linguistic units that make up speech. The model is learned from a set of audio recordings and their corresponding transcripts. It is created by taking audio recordings of speech, and their text transcriptions, and using software to create statistical representations of the sounds that make up each word.

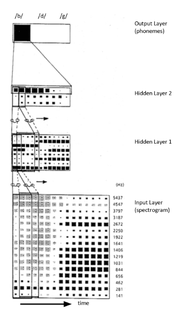

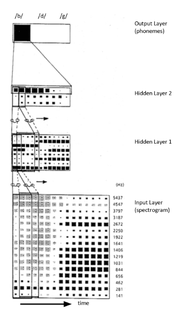

Time delay neural network (TDNN) is a multilayer artificial neural network architecture whose purpose is to 1) classify patterns with shift-invariance, and 2) model context at each layer of the network.

Deep learning is part of a broader family of machine learning methods based on learning data representations, as opposed to task-specific algorithms. Learning can be supervised, semi-supervised or unsupervised.

Neurocomputational speech processing is computer-simulation of speech production and speech perception by referring to the natural neuronal processes of speech production and speech perception, as they occur in the human nervous system. This topic is based on neuroscience and computational neuroscience.

Georg Heike is a German phonetician and linguist.

Bernd J. Kröger is a German phonetician and professor at RWTH Aachen University. He is known for his contributions in the field of neurocomputational speech processing, in particular the ACT model.