Related Research Articles

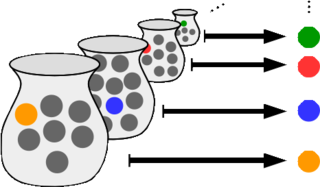

In mathematics, the axiom of choice, or AC, is an axiom of set theory equivalent to the statement that a Cartesian product of a collection of non-empty sets is non-empty. Informally put, the axiom of choice says that given any collection of bins, each containing at least one object, it is possible to construct a set by arbitrarily choosing one object from each bin, even if the collection is infinite. Formally, it states that for every indexed family of nonempty sets, there exists an indexed set such that for every . The axiom of choice was formulated in 1904 by Ernst Zermelo in order to formalize his proof of the well-ordering theorem.

In algorithmic information theory, the Kolmogorov complexity of an object, such as a piece of text, is the length of a shortest computer program that produces the object as output. It is a measure of the computational resources needed to specify the object, and is also known as algorithmic complexity, Solomonoff–Kolmogorov–Chaitin complexity, program-size complexity, descriptive complexity, or algorithmic entropy. It is named after Andrey Kolmogorov, who first published on the subject in 1963.

Automated theorem proving is a subfield of automated reasoning and mathematical logic dealing with proving mathematical theorems by computer programs. Automated reasoning over mathematical proof was a major impetus for the development of computer science.

The P versus NP problem is a major unsolved problem in computer science. It asks whether every problem whose solution can be quickly verified can also be solved quickly.

Computational complexity theory focuses on classifying computational problems according to their resource usage, and relating these classes to each other. A computational problem is a task solved by a computer. A computation problem is solvable by mechanical application of mathematical steps, such as an algorithm.

Gödel's completeness theorem is a fundamental theorem in mathematical logic that establishes a correspondence between semantic truth and syntactic provability in first-order logic.

In mathematics, a theorem is a statement that has been proved, or can be proved. The proof of a theorem is a logical argument that uses the inference rules of a deductive system to establish that the theorem is a logical consequence of the axioms and previously proved theorems.

Gödel's incompleteness theorems are two theorems of mathematical logic that are concerned with the limits of provability in formal axiomatic theories. These results, published by Kurt Gödel in 1931, are important both in mathematical logic and in the philosophy of mathematics. The theorems are widely, but not universally, interpreted as showing that Hilbert's program to find a complete and consistent set of axioms for all mathematics is impossible.

A mathematical proof is an inferential argument for a mathematical statement, showing that the stated assumptions logically guarantee the conclusion. The argument may use other previously established statements, such as theorems; but every proof can, in principle, be constructed using only certain basic or original assumptions known as axioms, along with the accepted rules of inference. Proofs are examples of exhaustive deductive reasoning which establish logical certainty, to be distinguished from empirical arguments or non-exhaustive inductive reasoning which establish "reasonable expectation". Presenting many cases in which the statement holds is not enough for a proof, which must demonstrate that the statement is true in all possible cases. A proposition that has not been proved but is believed to be true is known as a conjecture, or a hypothesis if frequently used as an assumption for further mathematical work.

Proof theory is a major branch of mathematical logic that represents proofs as formal mathematical objects, facilitating their analysis by mathematical techniques. Proofs are typically presented as inductively-defined data structures such as plain lists, boxed lists, or trees, which are constructed according to the axioms and rules of inference of the logical system. Consequently, proof theory is syntactic in nature, in contrast to model theory, which is semantic in nature.

In computer science, program optimization, code optimization, or software optimization, is the process of modifying a software system to make some aspect of it work more efficiently or use fewer resources. In general, a computer program may be optimized so that it executes more rapidly, or to make it capable of operating with less memory storage or other resources, or draw less power.

Reverse mathematics is a program in mathematical logic that seeks to determine which axioms are required to prove theorems of mathematics. Its defining method can briefly be described as "going backwards from the theorems to the axioms", in contrast to the ordinary mathematical practice of deriving theorems from axioms. It can be conceptualized as sculpting out necessary conditions from sufficient ones.

In mathematics, a proof by infinite descent, also known as Fermat's method of descent, is a particular kind of proof by contradiction used to show that a statement cannot possibly hold for any number, by showing that if the statement were to hold for a number, then the same would be true for a smaller number, leading to an infinite descent and ultimately a contradiction. It is a method which relies on the well-ordering principle, and is often used to show that a given equation, such as a Diophantine equation, has no solutions.

In computer science and operations research, approximation algorithms are efficient algorithms that find approximate solutions to optimization problems with provable guarantees on the distance of the returned solution to the optimal one. Approximation algorithms naturally arise in the field of theoretical computer science as a consequence of the widely believed P ≠ NP conjecture. Under this conjecture, a wide class of optimization problems cannot be solved exactly in polynomial time. The field of approximation algorithms, therefore, tries to understand how closely it is possible to approximate optimal solutions to such problems in polynomial time. In an overwhelming majority of the cases, the guarantee of such algorithms is a multiplicative one expressed as an approximation ratio or approximation factor i.e., the optimal solution is always guaranteed to be within a (predetermined) multiplicative factor of the returned solution. However, there are also many approximation algorithms that provide an additive guarantee on the quality of the returned solution. A notable example of an approximation algorithm that provides both is the classic approximation algorithm of Lenstra, Shmoys and Tardos for scheduling on unrelated parallel machines.

In computability theory, a machine that always halts, also called a decider or a total Turing machine, is a Turing machine that eventually halts for every input.

In computer science, an algorithm is said to be asymptotically optimal if, roughly speaking, for large inputs it performs at worst a constant factor worse than the best possible algorithm. It is a term commonly encountered in computer science research as a result of widespread use of big-O notation.

In mathematics, a proof of impossibility, also known as negative proof, proof of an impossibility theorem, or negative result is a proof demonstrating that a particular problem cannot be solved as described in the claim, or that a particular set of problems cannot be solved in general. Proofs of impossibility often put decades or centuries of work attempting to find a solution to rest. To prove that something is impossible is usually much harder than the opposite task, as it is often necessary to develop a theory. Impossibility theorems are usually expressible as negative existential propositions, or universal propositions in logic.

In computability theory and computational complexity theory, an undecidable problem is a decision problem for which it is proved to be impossible to construct an algorithm that always leads to a correct yes-or-no answer. The halting problem is an example: it can be proven that there is no algorithm that correctly determines whether arbitrary programs eventually halt when run.

In computational complexity theory, a problem is NP-complete when:

- it is a problem for which the correctness of each solution can be verified quickly and a brute-force search algorithm can find a solution by trying all possible solutions.

- the problem can be used to simulate every other problem for which we can verify quickly that a solution is correct. In this sense, NP-complete problems are the hardest of the problems to which solutions can be verified quickly. If we could find solutions of some NP-complete problem quickly, we could quickly find the solutions of every other problem to which a given solution can be easily verified.

References

- Solomonoff, Ray, "A Preliminary Report on a General Theory of Inductive Inference", Report V-131, Zator Co., Cambridge, Ma. Feb 4, 1960.

- p. 401, Modern Compiler Implementation in ML, Andrew W. Appel, Cambridge University Press, 1998. ISBN 0-521-58274-1.

- p. 27, Retargetable Compiler Technology for Embedded Systems: Tools and Applications, Rainer Leupers and Peter Marwedel, Springer-Verlag, 2001. ISBN 0-7923-7578-5.

- Notes from a course in Modern Programming Languages at the University of Pennsylvania See p. 8.